---

license: gpl-3.0

datasets:

- nkp37/OpenVid-1M

- TempoFunk/webvid-10M

base_model:

- VideoCrafter/VideoCrafter2

pipeline_tag: text-to-video

---

# Advanced text-to-video Diffusion Models

⚡️ This repository provides training recipes for the AMD efficient text-to-video models, which are designed for high performance and efficiency. The training process includes two key steps:

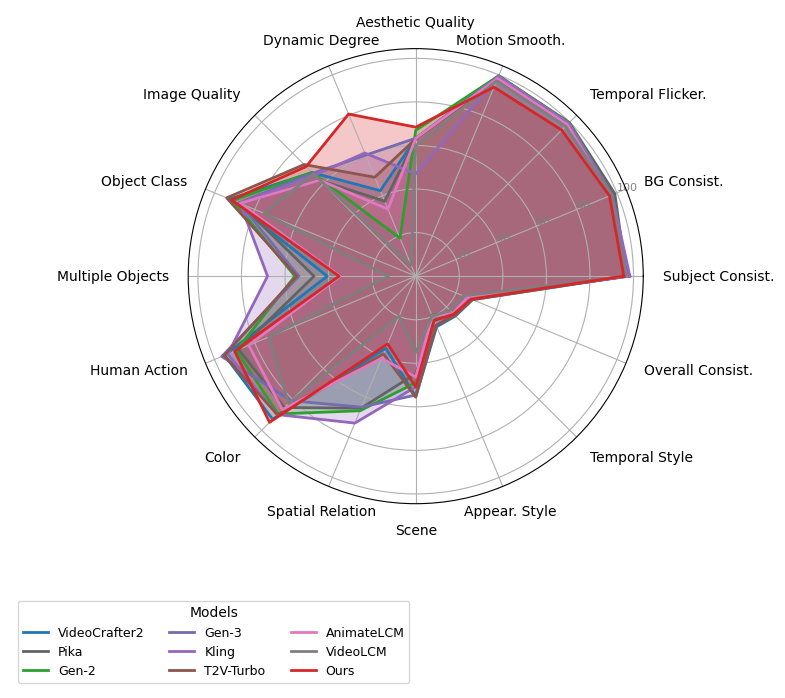

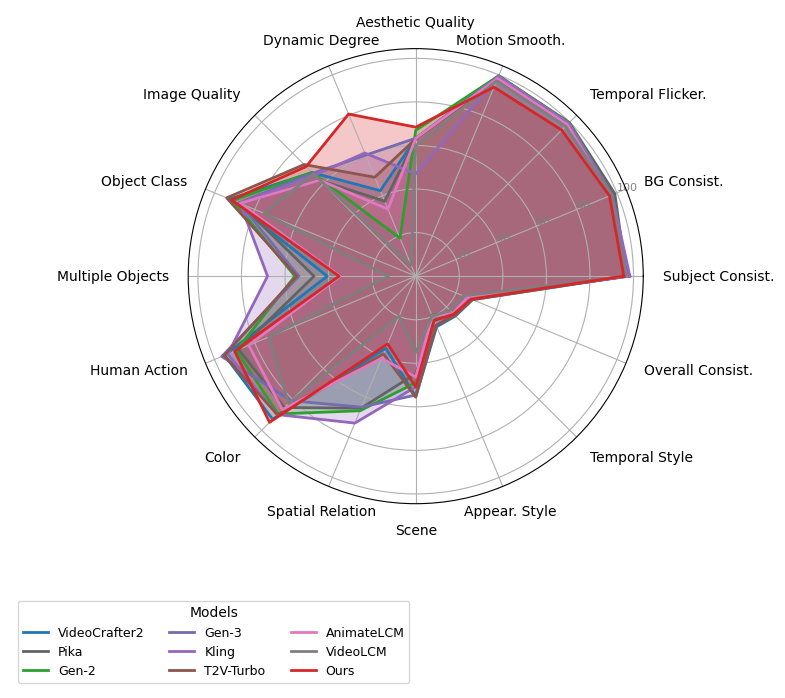

* Distillation and Pruning: We distill and prune the popular text-to-video model [VideoCrafter2](https://github.com/AILab-CVC/VideoCrafter), reducing the parameters to a compact 945M while maintaining competitive performance.

* Optimization with T2V-Turbo: We apply the [T2V-Turbo](https://github.com/Ji4chenLi/t2v-turbo) method on the distilled model to reduce inference steps and further enhance model quality.

This implementation is released to promote further research and innovation in the field of efficient text-to-video generation, optimized for AMD Instinct accelerators.

**8-Steps Results**

**8-Steps Results**

| A cute happy Corgi playing in park, sunset, pixel. |

A cute happy Corgi playing in park, sunset, animated style.gif |

A cute raccoon playing guitar in the beach. |

A cute raccoon playing guitar in the forest. |

|

|

|

|

| A quiet beach at dawn and the waves gently lapping. |

A cute teddy bear, dressed in a red silk outfit, stands in a vibrant street, Chinese New Year. |

A sandcastle being eroded by the incoming tide. |

An astronaut flying in space, in cyberpunk style. |

|

|

|

|

| A cat DJ at a party. |

A 3D model of a 1800s victorian house. |

A drone flying over a snowy forest. |

A ghost ship navigating through a sea under a moon. |

|

|

|

|

# License

Copyright (c) 2024 Advanced Micro Devices, Inc. All Rights Reserved.

**8-Steps Results**

**8-Steps Results**