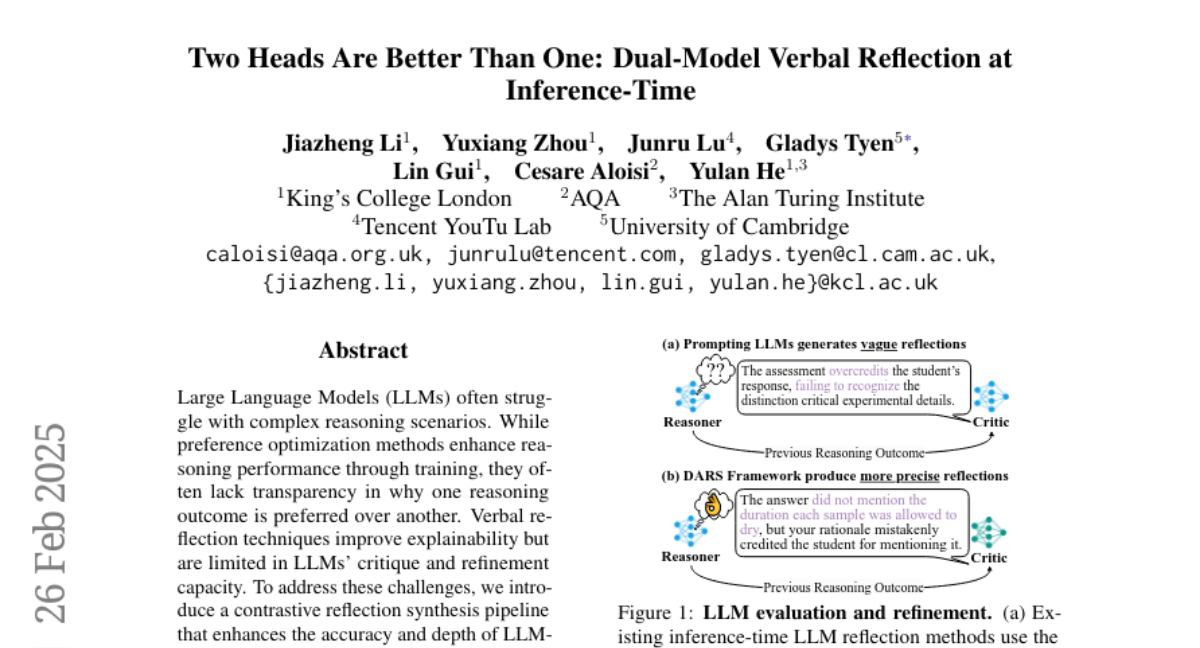

Two Heads Are Better Than One: Dual-Model Verbal Reflection at Inference-Time

J Li

jiazhengli

AI & ML interests

AI for Education

Recent Activity

commented on

a paper

about 1 month ago

SSA: Sparse Sparse Attention by Aligning Full and Sparse Attention Outputs in Feature Space

authored

a paper

about 1 month ago

SSA: Sparse Sparse Attention by Aligning Full and Sparse Attention Outputs in Feature Space

upvoted

a

paper

about 1 month ago

SSA: Sparse Sparse Attention by Aligning Full and Sparse Attention Outputs in Feature Space

Organizations

None yet