Swahili Gemma 1B

A fine-tuned Gemma 3 1B instruction model specialized for English-to-Swahili translation and Swahili conversational AI. The model accepts input in both English and Swahili but outputs responses exclusively in Swahili.

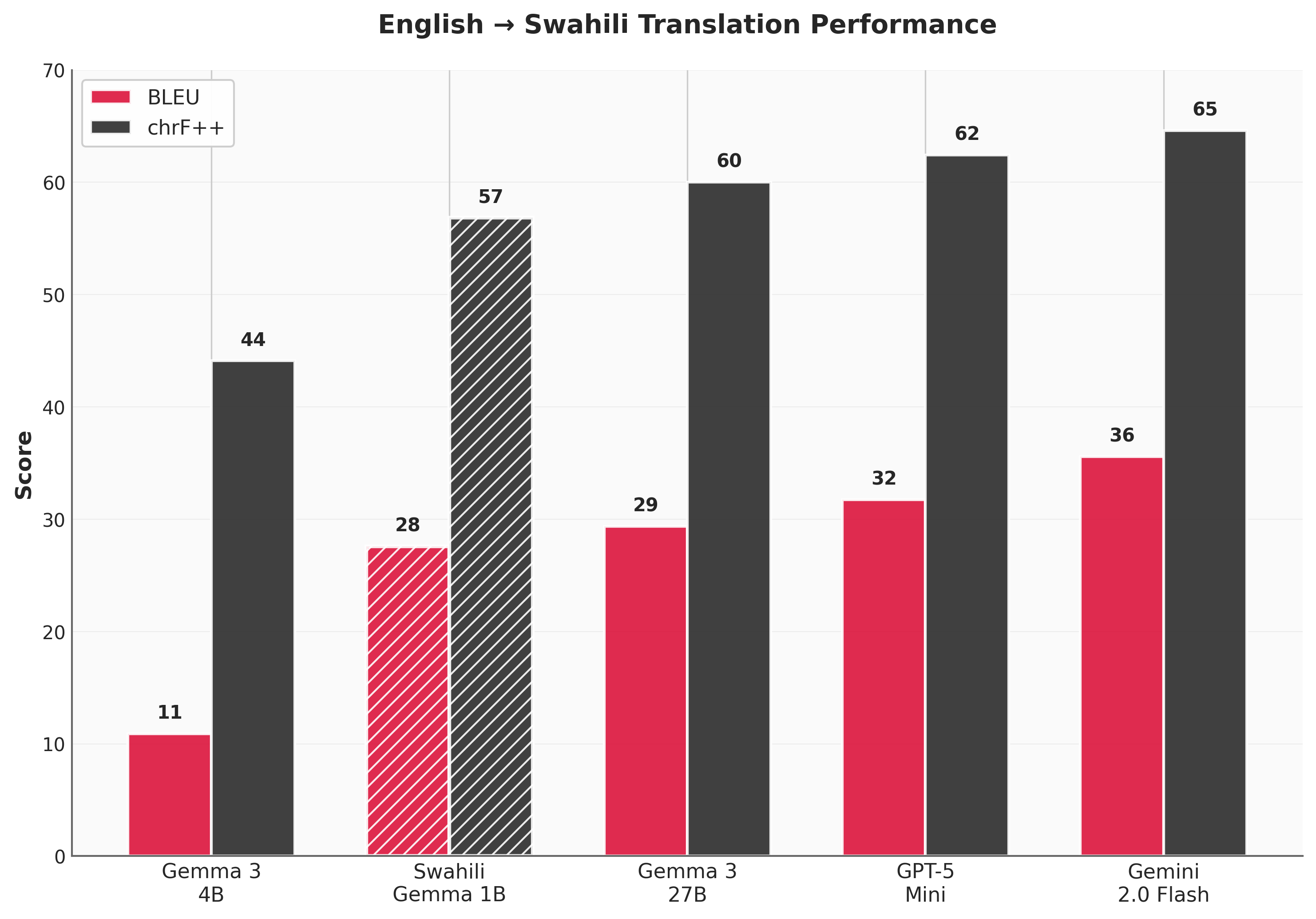

📊 Translation Performance

Model Comparison

| Model | Parameters | BLEU | chrF++ | Efficiency* |

|---|---|---|---|---|

| Gemma 3 4B | 4B | 10.9 | 44.1 | 2.7 |

| Swahili Gemma 1B | 1B | 27.6 | 56.8 | 27.6 |

| Gemma 3 27B | 27B | 29.4 | 60.0 | 1.1 |

| GPT-5 Mini | ~8B | 31.8 | 62.4 | 4.0 |

| Gemini 2.0 Flash | Large | 35.6 | 64.6 | N/A |

*Efficiency = BLEU Score / Parameters (in billions)

Key Performance Insights

🎯 Efficiency Leader: Achieves the highest BLEU-to-parameter ratio (27.6 BLEU per billion parameters)

🚀 Size Advantage: Outperforms Gemma 3 4B (4x larger) by 153% on BLEU score

💎 Competitive Quality: Achieves 94% of Gemma 3 27B performance with 27x fewer parameters

⚡ Practical Deployment: Runs efficiently on consumer hardware while maintaining quality

Evaluation Details

- Dataset: FLORES-200 English→Swahili (1,012 translation pairs)

- Metrics: BLEU (bilingual evaluation understudy) and chrF++ (character F-score)

- Evaluation: Zero-shot translation performance

🚀 Quick Start

from transformers import AutoModelForCausalLM, AutoTokenizer

# Load model and tokenizer

model = AutoModelForCausalLM.from_pretrained("CraneAILabs/swahili-gemma-1b")

tokenizer = AutoTokenizer.from_pretrained("CraneAILabs/swahili-gemma-1b")

# Translate to Swahili

prompt = "Translate to Swahili: Hello, how are you today?"

inputs = tokenizer(prompt, return_tensors="pt")

outputs = model.generate(**inputs, max_length=100, temperature=0.3)

response = tokenizer.decode(outputs[0], skip_special_tokens=True)

print(response)

🌍 Language Capabilities

- Input Languages: English + Swahili

- Output Language: Swahili only

- Primary Focus: English-to-Swahili translation and Swahili conversation

🎯 Capabilities

- Translation: English-to-Swahili translation

- Conversational AI: Natural dialogue in Swahili

- Summarization: Text summarization in Swahili

- Writing: Creative and informational writing in Swahili

- Question Answering: General knowledge responses in Swahili

💻 Usage Examples

Basic Translation

import torch

from transformers import AutoModelForCausalLM, AutoTokenizer

model = AutoModelForCausalLM.from_pretrained("CraneAILabs/swahili-gemma-1b")

tokenizer = AutoTokenizer.from_pretrained("CraneAILabs/swahili-gemma-1b")

# English to Swahili translation

prompt = "Translate to Swahili: Good morning, how did you sleep?"

inputs = tokenizer(prompt, return_tensors="pt")

with torch.no_grad():

outputs = model.generate(

inputs.input_ids,

max_length=128,

temperature=0.3,

top_p=0.95,

top_k=64,

repetition_penalty=1.1,

do_sample=True,

pad_token_id=tokenizer.eos_token_id

)

response = tokenizer.decode(outputs[0], skip_special_tokens=True)

print(response)

Swahili Conversation

# Direct Swahili conversation

prompt = "Hujambo! Je, unaweza kunisaidia leo?"

inputs = tokenizer(prompt, return_tensors="pt")

outputs = model.generate(**inputs, max_length=100, temperature=0.3)

response = tokenizer.decode(outputs[0], skip_special_tokens=True)

print(response)

Using the Pipeline

from transformers import pipeline

# Create a text generation pipeline

generator = pipeline(

"text-generation",

model="CraneAILabs/swahili-gemma-1b",

tokenizer="CraneAILabs/swahili-gemma-1b",

device=0 if torch.cuda.is_available() else -1

)

# Generate Swahili text

result = generator(

"Translate to Swahili: Welcome to our school",

max_length=100,

temperature=0.3,

do_sample=True

)

print(result[0]['generated_text'])

Ollama Usage

# Run the recommended Q4_K_M quantization

ollama run crane-ai-labs/swahili-gemma-1b:q4-k-m

# Try different quantizations based on your needs

ollama run crane-ai-labs/swahili-gemma-1b:q8-0 # Higher quality

ollama run crane-ai-labs/swahili-gemma-1b:q4-k-s # Smaller size

ollama run crane-ai-labs/swahili-gemma-1b:f16 # Original quality

Available Quantizations

| Quantization | Size | Quality | Use Case |

|---|---|---|---|

f16 |

~1.9GB | Highest | Maximum quality inference |

f32 |

~3.8GB | Highest | Research & benchmarking |

q8-0 |

~1.0GB | Very High | Production with ample resources |

q5-k-m |

~812MB | High | Balanced quality/size |

q4-k-m |

~769MB | Good | Recommended for most users |

q4-k-s |

~745MB | Good | Resource-constrained environments |

q3-k-m |

~689MB | Fair | Mobile/edge deployment |

q2-k |

~658MB | Lower | Minimal resource usage |

💡 Generation Parameters

Recommended settings for optimal results:

generation_config = {

"temperature": 0.3, # Focused, coherent responses

"top_p": 0.95, # Nucleus sampling

"top_k": 64, # Top-k sampling

"max_length": 128, # Response length limit

"repetition_penalty": 1.1, # Reduces repetition

"do_sample": True,

"pad_token_id": tokenizer.eos_token_id

}

🔗 Related Models

- GGUF Quantizations: CraneAILabs/swahili-gemma-1b-GGUF - Optimized for llama.cpp/Ollama

- LiteRT Mobile: CraneAILabs/swahili-gemma-1b-litert - Mobile deployment

- Ollama: crane-ai-labs/swahili-gemma-1b - Ready-to-run with Ollama

🎨 Use Cases

- Language Learning: Practice English-Swahili translation

- Cultural Preservation: Create and document Swahili content

- Educational Tools: Swahili learning assistants

- Content Localization: Translate materials to Swahili

- Conversational Practice: Improve Swahili dialogue skills

- Text Summarization: Summarize content in Swahili

⚠️ Limitations

- Language Output: Responds only in Swahili

- Factual Knowledge: General knowledge only, not trained on specific factual datasets

- No Coding/Math: Not designed for programming or mathematical tasks

- Context Length: Limited to 4,096 tokens for optimal performance

- Specialized Domains: May require domain-specific fine-tuning

📄 License

This model is released under the Gemma Terms of Use. Please review the terms before use.

🙏 Acknowledgments

- Google: For the Gemma 3 base model, support and guidance.

- Community: For Swahili language resources and datasets

- Gilbert Korir (Msingi AI, Nairobi, Kenya)

- Alfred Malengo Kondoro (Hanyang University, Seoul, South Korea)

Citation

If you use this model in your research or applications, please cite:

@misc{crane_ai_labs_2025,

author = {Bakunga Bronson and Kato Steven Mubiru and Lwanga Caleb and Gimei Alex and Kavuma Lameck and Roland Ganafa and Sibomana Glorry and Atuhaire Collins and JohnRoy Nangeso and Tukamushaba Catherine},

title = {Swahili Gemma: A Fine-tuned Gemma 3 1B Model for Swahili conversational AI},

year = {2025},

url = {https://huggingface.co/CraneAILabs/swahili-gemma-1b},

organization = {Crane AI Labs}

}

Built with ❤️ by Crane AI Labs

Swahili Gemma - Your helpful Swahili AI companion

- Downloads last month

- -

Model tree for CraneAILabs/swahili-gemma-1b

Base model

google/gemma-3-1b-pt