SaplingDream

Collection

The SaplingDream series.

•

4 items

•

Updated

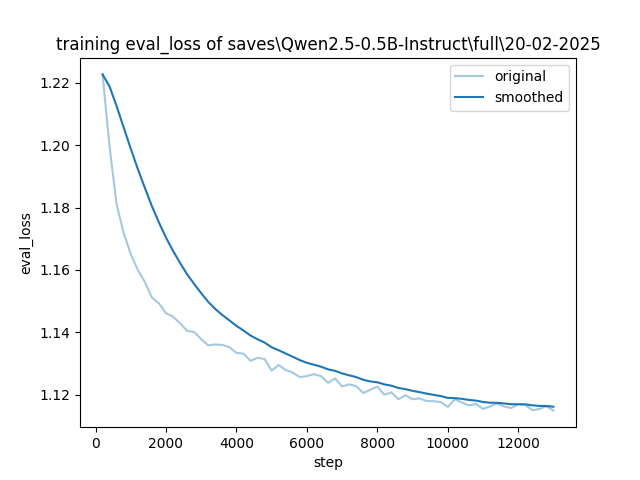

Introducing SaplingDream, a compact GPT model with 0.5 billion parameters, based on the Qwen/Qwen2.5-0.5B-Instruct architecture. This model has been fine-tuned on a RTX4060 8GB for a bit over two days on ~0.3B tokens...

Evaluation Loss Chart

Base model

Qwen/Qwen2.5-0.5B