Llama-3.1-8B Sonnet fine-tuning

Using unsloth for fine-tuning:

==((====))== Unsloth 2025.2.4: Fast Llama patching. Transformers: 4.48.2.

\\ /| GPU: NVIDIA A100-SXM4-40GB. Max memory: 39.557 GB. Platform: Linux.

O^O/ \_/ \ Torch: 2.5.1+cu124. CUDA: 8.0. CUDA Toolkit: 12.4. Triton: 3.1.0

\ / Bfloat16 = TRUE. FA [Xformers = 0.0.29. FA2 = False]

"-____-" Free Apache license: http://github.com/unslothai/unsloth

Original model: https://huggingface.co/meta-llama/Llama-3.1-8B

Applied open Sonnet datasets containing ~360k question/answer pairs for fine-tuning.

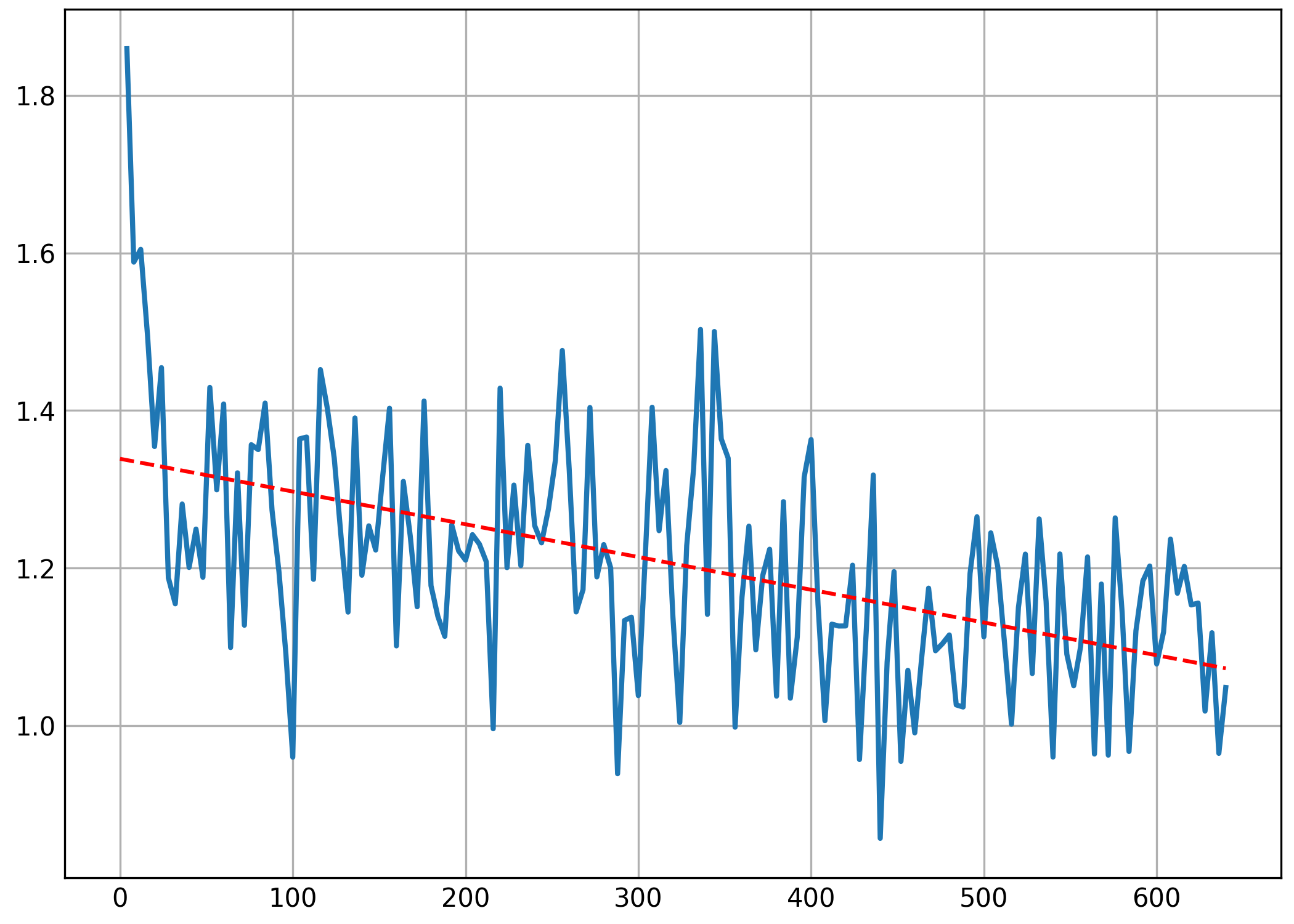

Training loss

Prompt format

<|begin_of_text|>{prompt}

Release History

- v0.1 - [2025-02-08] Initial release, trained to 512 steps

- v0.2 - [2025-02-10] Restarted training with cleaner dataset, ran to 640 steps

Credits

Thanks to Meta, mlfoundations-dev, and Gryphe for providing the data used to create this fine-tuning.

- Downloads last month

- 35

Inference Providers

NEW

This model is not currently available via any of the supported Inference Providers.

The model cannot be deployed to the HF Inference API:

The model has no library tag.