gguf quantized version of wan2.2 models

- drag wan to >

./ComfyUI/models/diffusion_models - drag umt5xxl to >

./ComfyUI/models/text_encoders - drag pig to >

./ComfyUI/models/vae

- Prompt

- a cute anime girl picking up a little pinky pig and moving quickly

- Negative Prompt

- blurry ugly bad

- Prompt

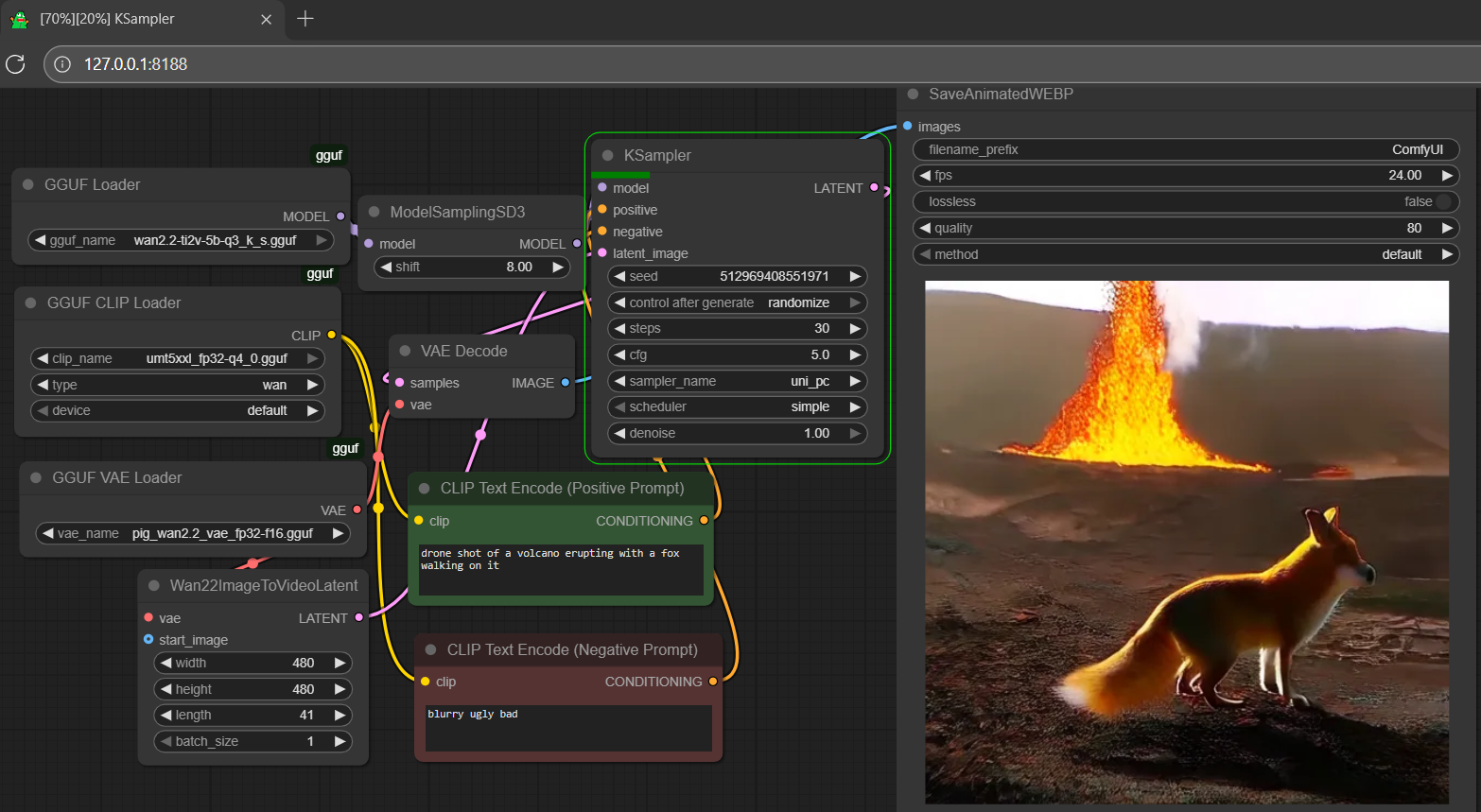

- drone shot of a volcano erupting with a pig walking on it

- Negative Prompt

- blurry ugly bad

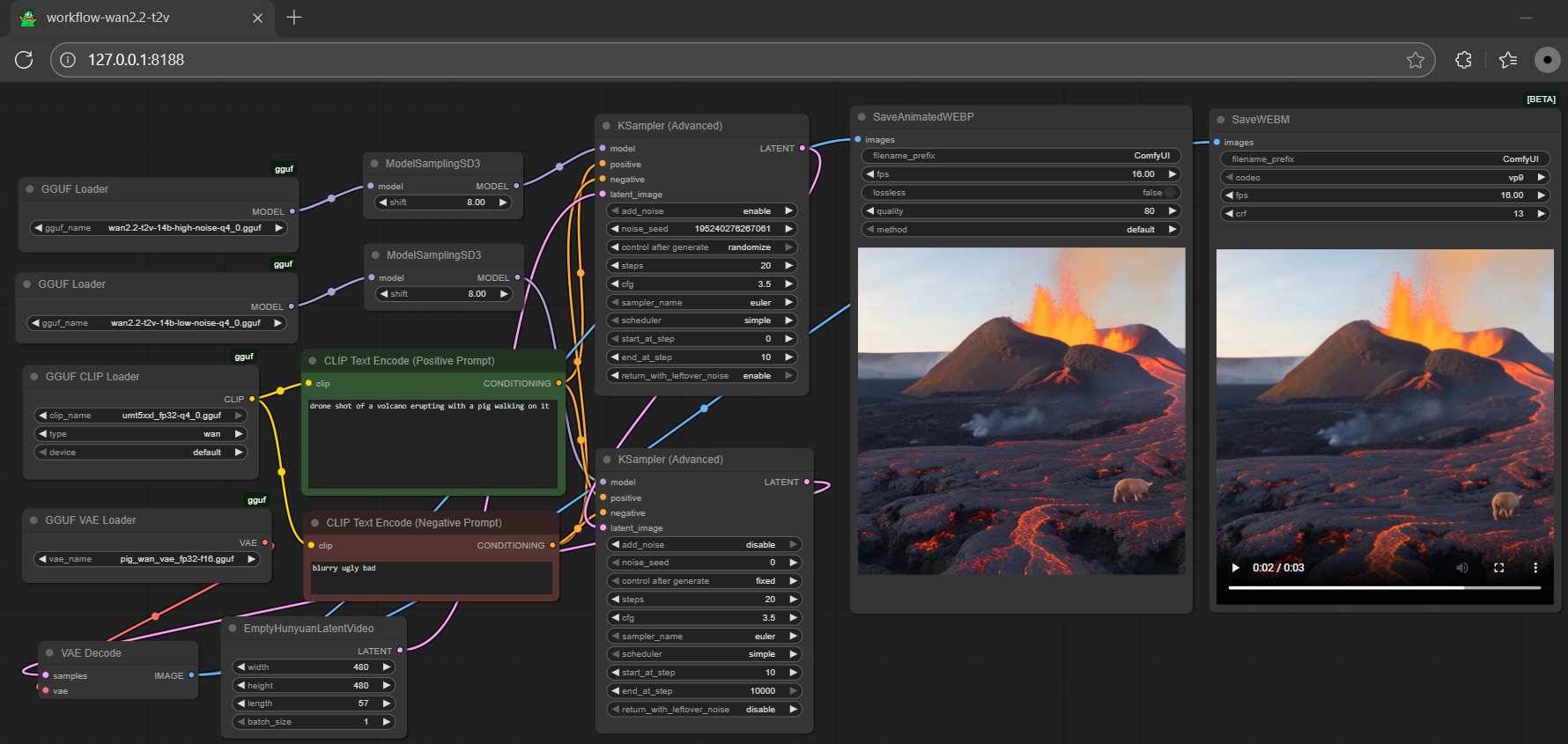

- Prompt

- drone shot of a volcano erupting with a pig walking on it

- Negative Prompt

- blurry ugly bad

tip: for 5b model, use pig-wan2-vae [1.41GB]

tip: for 5b model, use pig-wan2-vae [1.41GB]

tip: for 14b model, use pig-wan-vae [254MB]

tip: for 14b model, use pig-wan-vae [254MB]

update

- upgrade your node (see last item from reference) for new/full quant support

- get more umt5xxl gguf encoder either here or here

reference

- base model from wan-ai

- comfyui from comfyanonymous

- gguf-node (pypi|repo|pack)

- Downloads last month

- 14,796

Hardware compatibility

Log In

to view the estimation

1-bit

2-bit

3-bit

4-bit

5-bit

6-bit

8-bit

16-bit

Model tree for calcuis/wan2-gguf

Base model

Comfy-Org/Wan_2.2_ComfyUI_Repackaged