id

stringlengths 6

113

| author

stringlengths 2

36

| task_category

stringclasses 42

values | tags

listlengths 1

4.05k

| created_time

timestamp[ns, tz=UTC]date 2022-03-02 23:29:04

2025-04-10 08:38:38

| last_modified

stringdate 2020-05-14 13:13:12

2025-04-19 04:15:39

| downloads

int64 0

118M

| likes

int64 0

4.86k

| README

stringlengths 30

1.01M

| matched_bigbio_names

listlengths 1

8

⌀ | is_bionlp

stringclasses 3

values | model_cards

stringlengths 0

1M

| metadata

stringlengths 2

698k

| source

stringclasses 2

values | matched_task

listlengths 1

10

⌀ | __index_level_0__

int64 0

46.9k

|

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

TheBloke/finance-LLM-GGUF

|

TheBloke

|

text-generation

|

[

"transformers",

"gguf",

"llama",

"finance",

"text-generation",

"en",

"dataset:Open-Orca/OpenOrca",

"dataset:GAIR/lima",

"dataset:WizardLM/WizardLM_evol_instruct_V2_196k",

"arxiv:2309.09530",

"base_model:AdaptLLM/finance-LLM",

"base_model:quantized:AdaptLLM/finance-LLM",

"license:other",

"region:us"

] | 2023-12-24T21:28:55Z |

2023-12-24T21:33:31+00:00

| 757 | 19 |

---

base_model: AdaptLLM/finance-LLM

datasets:

- Open-Orca/OpenOrca

- GAIR/lima

- WizardLM/WizardLM_evol_instruct_V2_196k

language:

- en

license: other

metrics:

- accuracy

model_name: Finance LLM

pipeline_tag: text-generation

tags:

- finance

inference: false

model_creator: AdaptLLM

model_type: llama

prompt_template: '[INST] <<SYS>>

{system_message}

<</SYS>>

{prompt} [/INST]

'

quantized_by: TheBloke

---

<!-- markdownlint-disable MD041 -->

<!-- header start -->

<!-- 200823 -->

<div style="width: auto; margin-left: auto; margin-right: auto">

<img src="https://i.imgur.com/EBdldam.jpg" alt="TheBlokeAI" style="width: 100%; min-width: 400px; display: block; margin: auto;">

</div>

<div style="display: flex; justify-content: space-between; width: 100%;">

<div style="display: flex; flex-direction: column; align-items: flex-start;">

<p style="margin-top: 0.5em; margin-bottom: 0em;"><a href="https://discord.gg/theblokeai">Chat & support: TheBloke's Discord server</a></p>

</div>

<div style="display: flex; flex-direction: column; align-items: flex-end;">

<p style="margin-top: 0.5em; margin-bottom: 0em;"><a href="https://www.patreon.com/TheBlokeAI">Want to contribute? TheBloke's Patreon page</a></p>

</div>

</div>

<div style="text-align:center; margin-top: 0em; margin-bottom: 0em"><p style="margin-top: 0.25em; margin-bottom: 0em;">TheBloke's LLM work is generously supported by a grant from <a href="https://a16z.com">andreessen horowitz (a16z)</a></p></div>

<hr style="margin-top: 1.0em; margin-bottom: 1.0em;">

<!-- header end -->

# Finance LLM - GGUF

- Model creator: [AdaptLLM](https://huggingface.co/AdaptLLM)

- Original model: [Finance LLM](https://huggingface.co/AdaptLLM/finance-LLM)

<!-- description start -->

## Description

This repo contains GGUF format model files for [AdaptLLM's Finance LLM](https://huggingface.co/AdaptLLM/finance-LLM).

These files were quantised using hardware kindly provided by [Massed Compute](https://massedcompute.com/).

<!-- description end -->

<!-- README_GGUF.md-about-gguf start -->

### About GGUF

GGUF is a new format introduced by the llama.cpp team on August 21st 2023. It is a replacement for GGML, which is no longer supported by llama.cpp.

Here is an incomplete list of clients and libraries that are known to support GGUF:

* [llama.cpp](https://github.com/ggerganov/llama.cpp). The source project for GGUF. Offers a CLI and a server option.

* [text-generation-webui](https://github.com/oobabooga/text-generation-webui), the most widely used web UI, with many features and powerful extensions. Supports GPU acceleration.

* [KoboldCpp](https://github.com/LostRuins/koboldcpp), a fully featured web UI, with GPU accel across all platforms and GPU architectures. Especially good for story telling.

* [GPT4All](https://gpt4all.io/index.html), a free and open source local running GUI, supporting Windows, Linux and macOS with full GPU accel.

* [LM Studio](https://lmstudio.ai/), an easy-to-use and powerful local GUI for Windows and macOS (Silicon), with GPU acceleration. Linux available, in beta as of 27/11/2023.

* [LoLLMS Web UI](https://github.com/ParisNeo/lollms-webui), a great web UI with many interesting and unique features, including a full model library for easy model selection.

* [Faraday.dev](https://faraday.dev/), an attractive and easy to use character-based chat GUI for Windows and macOS (both Silicon and Intel), with GPU acceleration.

* [llama-cpp-python](https://github.com/abetlen/llama-cpp-python), a Python library with GPU accel, LangChain support, and OpenAI-compatible API server.

* [candle](https://github.com/huggingface/candle), a Rust ML framework with a focus on performance, including GPU support, and ease of use.

* [ctransformers](https://github.com/marella/ctransformers), a Python library with GPU accel, LangChain support, and OpenAI-compatible AI server. Note, as of time of writing (November 27th 2023), ctransformers has not been updated in a long time and does not support many recent models.

<!-- README_GGUF.md-about-gguf end -->

<!-- repositories-available start -->

## Repositories available

* [AWQ model(s) for GPU inference.](https://huggingface.co/TheBloke/finance-LLM-AWQ)

* [GPTQ models for GPU inference, with multiple quantisation parameter options.](https://huggingface.co/TheBloke/finance-LLM-GPTQ)

* [2, 3, 4, 5, 6 and 8-bit GGUF models for CPU+GPU inference](https://huggingface.co/TheBloke/finance-LLM-GGUF)

* [AdaptLLM's original unquantised fp16 model in pytorch format, for GPU inference and for further conversions](https://huggingface.co/AdaptLLM/finance-LLM)

<!-- repositories-available end -->

<!-- prompt-template start -->

## Prompt template: Llama-2-Chat

```

[INST] <<SYS>>

{system_message}

<</SYS>>

{prompt} [/INST]

```

<!-- prompt-template end -->

<!-- compatibility_gguf start -->

## Compatibility

These quantised GGUFv2 files are compatible with llama.cpp from August 27th onwards, as of commit [d0cee0d](https://github.com/ggerganov/llama.cpp/commit/d0cee0d36d5be95a0d9088b674dbb27354107221)

They are also compatible with many third party UIs and libraries - please see the list at the top of this README.

## Explanation of quantisation methods

<details>

<summary>Click to see details</summary>

The new methods available are:

* GGML_TYPE_Q2_K - "type-1" 2-bit quantization in super-blocks containing 16 blocks, each block having 16 weight. Block scales and mins are quantized with 4 bits. This ends up effectively using 2.5625 bits per weight (bpw)

* GGML_TYPE_Q3_K - "type-0" 3-bit quantization in super-blocks containing 16 blocks, each block having 16 weights. Scales are quantized with 6 bits. This end up using 3.4375 bpw.

* GGML_TYPE_Q4_K - "type-1" 4-bit quantization in super-blocks containing 8 blocks, each block having 32 weights. Scales and mins are quantized with 6 bits. This ends up using 4.5 bpw.

* GGML_TYPE_Q5_K - "type-1" 5-bit quantization. Same super-block structure as GGML_TYPE_Q4_K resulting in 5.5 bpw

* GGML_TYPE_Q6_K - "type-0" 6-bit quantization. Super-blocks with 16 blocks, each block having 16 weights. Scales are quantized with 8 bits. This ends up using 6.5625 bpw

Refer to the Provided Files table below to see what files use which methods, and how.

</details>

<!-- compatibility_gguf end -->

<!-- README_GGUF.md-provided-files start -->

## Provided files

| Name | Quant method | Bits | Size | Max RAM required | Use case |

| ---- | ---- | ---- | ---- | ---- | ----- |

| [finance-llm.Q2_K.gguf](https://huggingface.co/TheBloke/finance-LLM-GGUF/blob/main/finance-llm.Q2_K.gguf) | Q2_K | 2 | 2.83 GB| 5.33 GB | smallest, significant quality loss - not recommended for most purposes |

| [finance-llm.Q3_K_S.gguf](https://huggingface.co/TheBloke/finance-LLM-GGUF/blob/main/finance-llm.Q3_K_S.gguf) | Q3_K_S | 3 | 2.95 GB| 5.45 GB | very small, high quality loss |

| [finance-llm.Q3_K_M.gguf](https://huggingface.co/TheBloke/finance-LLM-GGUF/blob/main/finance-llm.Q3_K_M.gguf) | Q3_K_M | 3 | 3.30 GB| 5.80 GB | very small, high quality loss |

| [finance-llm.Q3_K_L.gguf](https://huggingface.co/TheBloke/finance-LLM-GGUF/blob/main/finance-llm.Q3_K_L.gguf) | Q3_K_L | 3 | 3.60 GB| 6.10 GB | small, substantial quality loss |

| [finance-llm.Q4_0.gguf](https://huggingface.co/TheBloke/finance-LLM-GGUF/blob/main/finance-llm.Q4_0.gguf) | Q4_0 | 4 | 3.83 GB| 6.33 GB | legacy; small, very high quality loss - prefer using Q3_K_M |

| [finance-llm.Q4_K_S.gguf](https://huggingface.co/TheBloke/finance-LLM-GGUF/blob/main/finance-llm.Q4_K_S.gguf) | Q4_K_S | 4 | 3.86 GB| 6.36 GB | small, greater quality loss |

| [finance-llm.Q4_K_M.gguf](https://huggingface.co/TheBloke/finance-LLM-GGUF/blob/main/finance-llm.Q4_K_M.gguf) | Q4_K_M | 4 | 4.08 GB| 6.58 GB | medium, balanced quality - recommended |

| [finance-llm.Q5_0.gguf](https://huggingface.co/TheBloke/finance-LLM-GGUF/blob/main/finance-llm.Q5_0.gguf) | Q5_0 | 5 | 4.65 GB| 7.15 GB | legacy; medium, balanced quality - prefer using Q4_K_M |

| [finance-llm.Q5_K_S.gguf](https://huggingface.co/TheBloke/finance-LLM-GGUF/blob/main/finance-llm.Q5_K_S.gguf) | Q5_K_S | 5 | 4.65 GB| 7.15 GB | large, low quality loss - recommended |

| [finance-llm.Q5_K_M.gguf](https://huggingface.co/TheBloke/finance-LLM-GGUF/blob/main/finance-llm.Q5_K_M.gguf) | Q5_K_M | 5 | 4.78 GB| 7.28 GB | large, very low quality loss - recommended |

| [finance-llm.Q6_K.gguf](https://huggingface.co/TheBloke/finance-LLM-GGUF/blob/main/finance-llm.Q6_K.gguf) | Q6_K | 6 | 5.53 GB| 8.03 GB | very large, extremely low quality loss |

| [finance-llm.Q8_0.gguf](https://huggingface.co/TheBloke/finance-LLM-GGUF/blob/main/finance-llm.Q8_0.gguf) | Q8_0 | 8 | 7.16 GB| 9.66 GB | very large, extremely low quality loss - not recommended |

**Note**: the above RAM figures assume no GPU offloading. If layers are offloaded to the GPU, this will reduce RAM usage and use VRAM instead.

<!-- README_GGUF.md-provided-files end -->

<!-- README_GGUF.md-how-to-download start -->

## How to download GGUF files

**Note for manual downloaders:** You almost never want to clone the entire repo! Multiple different quantisation formats are provided, and most users only want to pick and download a single file.

The following clients/libraries will automatically download models for you, providing a list of available models to choose from:

* LM Studio

* LoLLMS Web UI

* Faraday.dev

### In `text-generation-webui`

Under Download Model, you can enter the model repo: TheBloke/finance-LLM-GGUF and below it, a specific filename to download, such as: finance-llm.Q4_K_M.gguf.

Then click Download.

### On the command line, including multiple files at once

I recommend using the `huggingface-hub` Python library:

```shell

pip3 install huggingface-hub

```

Then you can download any individual model file to the current directory, at high speed, with a command like this:

```shell

huggingface-cli download TheBloke/finance-LLM-GGUF finance-llm.Q4_K_M.gguf --local-dir . --local-dir-use-symlinks False

```

<details>

<summary>More advanced huggingface-cli download usage (click to read)</summary>

You can also download multiple files at once with a pattern:

```shell

huggingface-cli download TheBloke/finance-LLM-GGUF --local-dir . --local-dir-use-symlinks False --include='*Q4_K*gguf'

```

For more documentation on downloading with `huggingface-cli`, please see: [HF -> Hub Python Library -> Download files -> Download from the CLI](https://huggingface.co/docs/huggingface_hub/guides/download#download-from-the-cli).

To accelerate downloads on fast connections (1Gbit/s or higher), install `hf_transfer`:

```shell

pip3 install hf_transfer

```

And set environment variable `HF_HUB_ENABLE_HF_TRANSFER` to `1`:

```shell

HF_HUB_ENABLE_HF_TRANSFER=1 huggingface-cli download TheBloke/finance-LLM-GGUF finance-llm.Q4_K_M.gguf --local-dir . --local-dir-use-symlinks False

```

Windows Command Line users: You can set the environment variable by running `set HF_HUB_ENABLE_HF_TRANSFER=1` before the download command.

</details>

<!-- README_GGUF.md-how-to-download end -->

<!-- README_GGUF.md-how-to-run start -->

## Example `llama.cpp` command

Make sure you are using `llama.cpp` from commit [d0cee0d](https://github.com/ggerganov/llama.cpp/commit/d0cee0d36d5be95a0d9088b674dbb27354107221) or later.

```shell

./main -ngl 35 -m finance-llm.Q4_K_M.gguf --color -c 2048 --temp 0.7 --repeat_penalty 1.1 -n -1 -p "[INST] <<SYS>>\n{system_message}\n<</SYS>>\n{prompt} [/INST]"

```

Change `-ngl 32` to the number of layers to offload to GPU. Remove it if you don't have GPU acceleration.

Change `-c 2048` to the desired sequence length. For extended sequence models - eg 8K, 16K, 32K - the necessary RoPE scaling parameters are read from the GGUF file and set by llama.cpp automatically. Note that longer sequence lengths require much more resources, so you may need to reduce this value.

If you want to have a chat-style conversation, replace the `-p <PROMPT>` argument with `-i -ins`

For other parameters and how to use them, please refer to [the llama.cpp documentation](https://github.com/ggerganov/llama.cpp/blob/master/examples/main/README.md)

## How to run in `text-generation-webui`

Further instructions can be found in the text-generation-webui documentation, here: [text-generation-webui/docs/04 ‐ Model Tab.md](https://github.com/oobabooga/text-generation-webui/blob/main/docs/04%20%E2%80%90%20Model%20Tab.md#llamacpp).

## How to run from Python code

You can use GGUF models from Python using the [llama-cpp-python](https://github.com/abetlen/llama-cpp-python) or [ctransformers](https://github.com/marella/ctransformers) libraries. Note that at the time of writing (Nov 27th 2023), ctransformers has not been updated for some time and is not compatible with some recent models. Therefore I recommend you use llama-cpp-python.

### How to load this model in Python code, using llama-cpp-python

For full documentation, please see: [llama-cpp-python docs](https://abetlen.github.io/llama-cpp-python/).

#### First install the package

Run one of the following commands, according to your system:

```shell

# Base ctransformers with no GPU acceleration

pip install llama-cpp-python

# With NVidia CUDA acceleration

CMAKE_ARGS="-DLLAMA_CUBLAS=on" pip install llama-cpp-python

# Or with OpenBLAS acceleration

CMAKE_ARGS="-DLLAMA_BLAS=ON -DLLAMA_BLAS_VENDOR=OpenBLAS" pip install llama-cpp-python

# Or with CLBLast acceleration

CMAKE_ARGS="-DLLAMA_CLBLAST=on" pip install llama-cpp-python

# Or with AMD ROCm GPU acceleration (Linux only)

CMAKE_ARGS="-DLLAMA_HIPBLAS=on" pip install llama-cpp-python

# Or with Metal GPU acceleration for macOS systems only

CMAKE_ARGS="-DLLAMA_METAL=on" pip install llama-cpp-python

# In windows, to set the variables CMAKE_ARGS in PowerShell, follow this format; eg for NVidia CUDA:

$env:CMAKE_ARGS = "-DLLAMA_OPENBLAS=on"

pip install llama-cpp-python

```

#### Simple llama-cpp-python example code

```python

from llama_cpp import Llama

# Set gpu_layers to the number of layers to offload to GPU. Set to 0 if no GPU acceleration is available on your system.

llm = Llama(

model_path="./finance-llm.Q4_K_M.gguf", # Download the model file first

n_ctx=2048, # The max sequence length to use - note that longer sequence lengths require much more resources

n_threads=8, # The number of CPU threads to use, tailor to your system and the resulting performance

n_gpu_layers=35 # The number of layers to offload to GPU, if you have GPU acceleration available

)

# Simple inference example

output = llm(

"[INST] <<SYS>>\n{system_message}\n<</SYS>>\n{prompt} [/INST]", # Prompt

max_tokens=512, # Generate up to 512 tokens

stop=["</s>"], # Example stop token - not necessarily correct for this specific model! Please check before using.

echo=True # Whether to echo the prompt

)

# Chat Completion API

llm = Llama(model_path="./finance-llm.Q4_K_M.gguf", chat_format="llama-2") # Set chat_format according to the model you are using

llm.create_chat_completion(

messages = [

{"role": "system", "content": "You are a story writing assistant."},

{

"role": "user",

"content": "Write a story about llamas."

}

]

)

```

## How to use with LangChain

Here are guides on using llama-cpp-python and ctransformers with LangChain:

* [LangChain + llama-cpp-python](https://python.langchain.com/docs/integrations/llms/llamacpp)

* [LangChain + ctransformers](https://python.langchain.com/docs/integrations/providers/ctransformers)

<!-- README_GGUF.md-how-to-run end -->

<!-- footer start -->

<!-- 200823 -->

## Discord

For further support, and discussions on these models and AI in general, join us at:

[TheBloke AI's Discord server](https://discord.gg/theblokeai)

## Thanks, and how to contribute

Thanks to the [chirper.ai](https://chirper.ai) team!

Thanks to Clay from [gpus.llm-utils.org](llm-utils)!

I've had a lot of people ask if they can contribute. I enjoy providing models and helping people, and would love to be able to spend even more time doing it, as well as expanding into new projects like fine tuning/training.

If you're able and willing to contribute it will be most gratefully received and will help me to keep providing more models, and to start work on new AI projects.

Donaters will get priority support on any and all AI/LLM/model questions and requests, access to a private Discord room, plus other benefits.

* Patreon: https://patreon.com/TheBlokeAI

* Ko-Fi: https://ko-fi.com/TheBlokeAI

**Special thanks to**: Aemon Algiz.

**Patreon special mentions**: Michael Levine, 阿明, Trailburnt, Nikolai Manek, John Detwiler, Randy H, Will Dee, Sebastain Graf, NimbleBox.ai, Eugene Pentland, Emad Mostaque, Ai Maven, Jim Angel, Jeff Scroggin, Michael Davis, Manuel Alberto Morcote, Stephen Murray, Robert, Justin Joy, Luke @flexchar, Brandon Frisco, Elijah Stavena, S_X, Dan Guido, Undi ., Komninos Chatzipapas, Shadi, theTransient, Lone Striker, Raven Klaugh, jjj, Cap'n Zoog, Michel-Marie MAUDET (LINAGORA), Matthew Berman, David, Fen Risland, Omer Bin Jawed, Luke Pendergrass, Kalila, OG, Erik Bjäreholt, Rooh Singh, Joseph William Delisle, Dan Lewis, TL, John Villwock, AzureBlack, Brad, Pedro Madruga, Caitlyn Gatomon, K, jinyuan sun, Mano Prime, Alex, Jeffrey Morgan, Alicia Loh, Illia Dulskyi, Chadd, transmissions 11, fincy, Rainer Wilmers, ReadyPlayerEmma, knownsqashed, Mandus, biorpg, Deo Leter, Brandon Phillips, SuperWojo, Sean Connelly, Iucharbius, Jack West, Harry Royden McLaughlin, Nicholas, terasurfer, Vitor Caleffi, Duane Dunston, Johann-Peter Hartmann, David Ziegler, Olakabola, Ken Nordquist, Trenton Dambrowitz, Tom X Nguyen, Vadim, Ajan Kanaga, Leonard Tan, Clay Pascal, Alexandros Triantafyllidis, JM33133, Xule, vamX, ya boyyy, subjectnull, Talal Aujan, Alps Aficionado, wassieverse, Ari Malik, James Bentley, Woland, Spencer Kim, Michael Dempsey, Fred von Graf, Elle, zynix, William Richards, Stanislav Ovsiannikov, Edmond Seymore, Jonathan Leane, Martin Kemka, usrbinkat, Enrico Ros

Thank you to all my generous patrons and donaters!

And thank you again to a16z for their generous grant.

<!-- footer end -->

<!-- original-model-card start -->

# Original model card: AdaptLLM's Finance LLM

# Adapt (Large) Language Models to Domains

This repo contains the domain-specific base model developed from **LLaMA-1-7B**, using the method in our paper [Adapting Large Language Models via Reading Comprehension](https://huggingface.co/papers/2309.09530).

We explore **continued pre-training on domain-specific corpora** for large language models. While this approach enriches LLMs with domain knowledge, it significantly hurts their prompting ability for question answering. Inspired by human learning via reading comprehension, we propose a simple method to **transform large-scale pre-training corpora into reading comprehension texts**, consistently improving prompting performance across tasks in biomedicine, finance, and law domains. **Our 7B model competes with much larger domain-specific models like BloombergGPT-50B**.

### 🤗 We are currently working hard on developing models across different domains, scales and architectures! Please stay tuned! 🤗

**************************** **Updates** ****************************

* 12/19: Released our [13B base models](https://huggingface.co/AdaptLLM/finance-LLM-13B) developed from LLaMA-1-13B.

* 12/8: Released our [chat models](https://huggingface.co/AdaptLLM/finance-chat) developed from LLaMA-2-Chat-7B.

* 9/18: Released our [paper](https://huggingface.co/papers/2309.09530), [code](https://github.com/microsoft/LMOps), [data](https://huggingface.co/datasets/AdaptLLM/finance-tasks), and [base models](https://huggingface.co/AdaptLLM/finance-LLM) developed from LLaMA-1-7B.

## Domain-Specific LLaMA-1

### LLaMA-1-7B

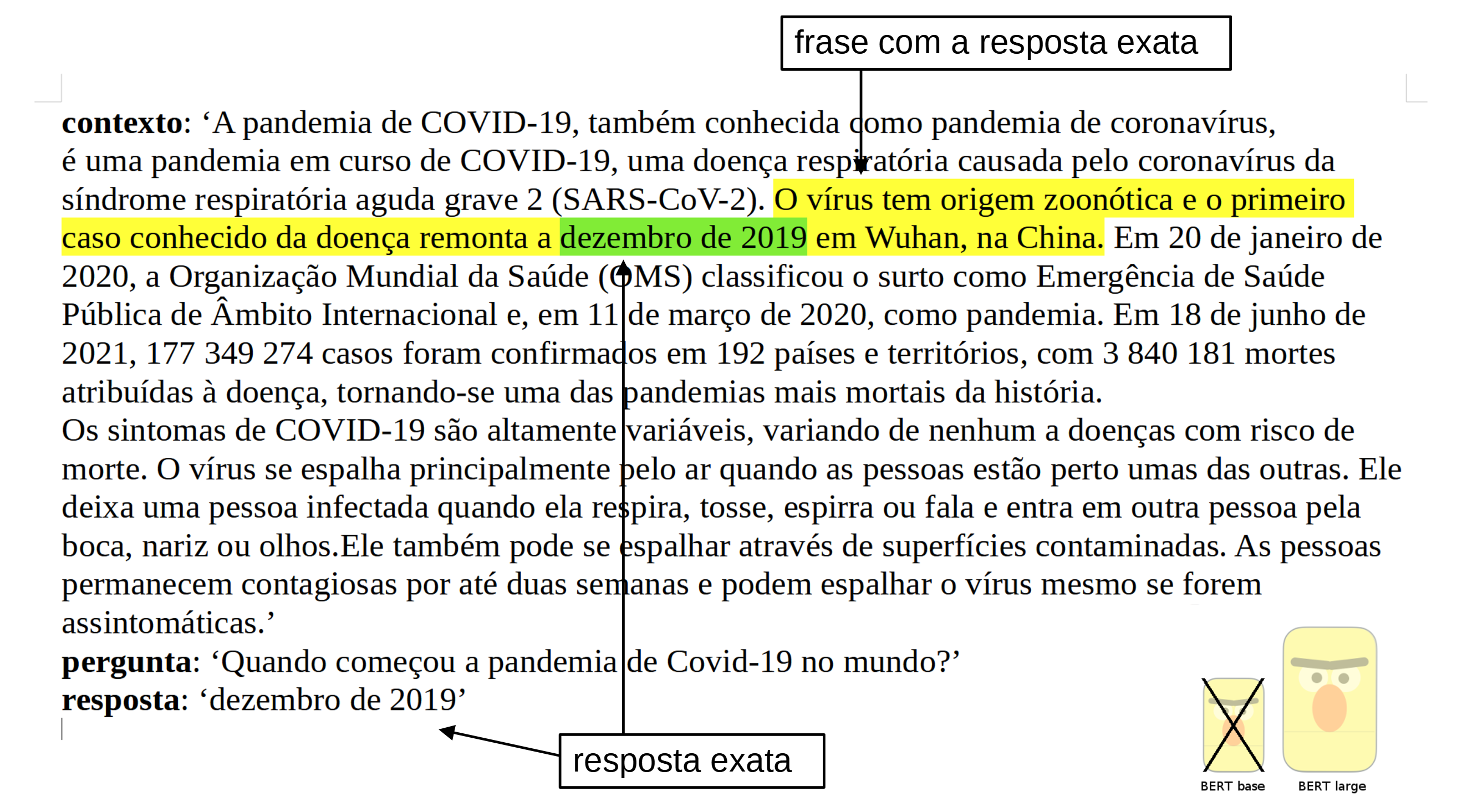

In our paper, we develop three domain-specific models from LLaMA-1-7B, which are also available in Huggingface: [Biomedicine-LLM](https://huggingface.co/AdaptLLM/medicine-LLM), [Finance-LLM](https://huggingface.co/AdaptLLM/finance-LLM) and [Law-LLM](https://huggingface.co/AdaptLLM/law-LLM), the performances of our AdaptLLM compared to other domain-specific LLMs are:

<p align='center'>

<img src="https://hf.fast360.xyz/production/uploads/650801ced5578ef7e20b33d4/6efPwitFgy-pLTzvccdcP.png" width="700">

</p>

### LLaMA-1-13B

Moreover, we scale up our base model to LLaMA-1-13B to see if **our method is similarly effective for larger-scale models**, and the results are consistently positive too: [Biomedicine-LLM-13B](https://huggingface.co/AdaptLLM/medicine-LLM-13B), [Finance-LLM-13B](https://huggingface.co/AdaptLLM/finance-LLM-13B) and [Law-LLM-13B](https://huggingface.co/AdaptLLM/law-LLM-13B).

## Domain-Specific LLaMA-2-Chat

Our method is also effective for aligned models! LLaMA-2-Chat requires a [specific data format](https://huggingface.co/blog/llama2#how-to-prompt-llama-2), and our **reading comprehension can perfectly fit the data format** by transforming the reading comprehension into a multi-turn conversation. We have also open-sourced chat models in different domains: [Biomedicine-Chat](https://huggingface.co/AdaptLLM/medicine-chat), [Finance-Chat](https://huggingface.co/AdaptLLM/finance-chat) and [Law-Chat](https://huggingface.co/AdaptLLM/law-chat)

For example, to chat with the finance model:

```python

from transformers import AutoModelForCausalLM, AutoTokenizer

model = AutoModelForCausalLM.from_pretrained("AdaptLLM/finance-chat")

tokenizer = AutoTokenizer.from_pretrained("AdaptLLM/finance-chat", use_fast=False)

# Put your input here:

user_input = '''Use this fact to answer the question: Title of each class Trading Symbol(s) Name of each exchange on which registered

Common Stock, Par Value $.01 Per Share MMM New York Stock Exchange

MMM Chicago Stock Exchange, Inc.

1.500% Notes due 2026 MMM26 New York Stock Exchange

1.750% Notes due 2030 MMM30 New York Stock Exchange

1.500% Notes due 2031 MMM31 New York Stock Exchange

Which debt securities are registered to trade on a national securities exchange under 3M's name as of Q2 of 2023?'''

# We use the prompt template of LLaMA-2-Chat demo

prompt = f"<s>[INST] <<SYS>>\nYou are a helpful, respectful and honest assistant. Always answer as helpfully as possible, while being safe. Your answers should not include any harmful, unethical, racist, sexist, toxic, dangerous, or illegal content. Please ensure that your responses are socially unbiased and positive in nature.\n\nIf a question does not make any sense, or is not factually coherent, explain why instead of answering something not correct. If you don't know the answer to a question, please don't share false information.\n<</SYS>>\n\n{user_input} [/INST]"

inputs = tokenizer(prompt, return_tensors="pt", add_special_tokens=False).input_ids.to(model.device)

outputs = model.generate(input_ids=inputs, max_length=4096)[0]

answer_start = int(inputs.shape[-1])

pred = tokenizer.decode(outputs[answer_start:], skip_special_tokens=True)

print(f'### User Input:\n{user_input}\n\n### Assistant Output:\n{pred}')

```

## Domain-Specific Tasks

To easily reproduce our results, we have uploaded the filled-in zero/few-shot input instructions and output completions of each domain-specific task: [biomedicine-tasks](https://huggingface.co/datasets/AdaptLLM/medicine-tasks), [finance-tasks](https://huggingface.co/datasets/AdaptLLM/finance-tasks), and [law-tasks](https://huggingface.co/datasets/AdaptLLM/law-tasks).

**Note:** those filled-in instructions are specifically tailored for models before alignment and do NOT fit for the specific data format required for chat models.

## Citation

If you find our work helpful, please cite us:

```bibtex

@article{adaptllm,

title = {Adapting Large Language Models via Reading Comprehension},

author = {Daixuan Cheng and Shaohan Huang and Furu Wei},

journal = {CoRR},

volume = {abs/2309.09530},

year = {2023}

}

```

<!-- original-model-card end -->

| null |

Non_BioNLP

|

<!-- markdownlint-disable MD041 -->

<!-- header start -->

<!-- 200823 -->

<div style="width: auto; margin-left: auto; margin-right: auto">

<img src="https://i.imgur.com/EBdldam.jpg" alt="TheBlokeAI" style="width: 100%; min-width: 400px; display: block; margin: auto;">

</div>

<div style="display: flex; justify-content: space-between; width: 100%;">

<div style="display: flex; flex-direction: column; align-items: flex-start;">

<p style="margin-top: 0.5em; margin-bottom: 0em;"><a href="https://discord.gg/theblokeai">Chat & support: TheBloke's Discord server</a></p>

</div>

<div style="display: flex; flex-direction: column; align-items: flex-end;">

<p style="margin-top: 0.5em; margin-bottom: 0em;"><a href="https://www.patreon.com/TheBlokeAI">Want to contribute? TheBloke's Patreon page</a></p>

</div>

</div>

<div style="text-align:center; margin-top: 0em; margin-bottom: 0em"><p style="margin-top: 0.25em; margin-bottom: 0em;">TheBloke's LLM work is generously supported by a grant from <a href="https://a16z.com">andreessen horowitz (a16z)</a></p></div>

<hr style="margin-top: 1.0em; margin-bottom: 1.0em;">

<!-- header end -->

# Finance LLM - GGUF

- Model creator: [AdaptLLM](https://huggingface.co/AdaptLLM)

- Original model: [Finance LLM](https://huggingface.co/AdaptLLM/finance-LLM)

<!-- description start -->

## Description

This repo contains GGUF format model files for [AdaptLLM's Finance LLM](https://huggingface.co/AdaptLLM/finance-LLM).

These files were quantised using hardware kindly provided by [Massed Compute](https://massedcompute.com/).

<!-- description end -->

<!-- README_GGUF.md-about-gguf start -->

### About GGUF

GGUF is a new format introduced by the llama.cpp team on August 21st 2023. It is a replacement for GGML, which is no longer supported by llama.cpp.

Here is an incomplete list of clients and libraries that are known to support GGUF:

* [llama.cpp](https://github.com/ggerganov/llama.cpp). The source project for GGUF. Offers a CLI and a server option.

* [text-generation-webui](https://github.com/oobabooga/text-generation-webui), the most widely used web UI, with many features and powerful extensions. Supports GPU acceleration.

* [KoboldCpp](https://github.com/LostRuins/koboldcpp), a fully featured web UI, with GPU accel across all platforms and GPU architectures. Especially good for story telling.

* [GPT4All](https://gpt4all.io/index.html), a free and open source local running GUI, supporting Windows, Linux and macOS with full GPU accel.

* [LM Studio](https://lmstudio.ai/), an easy-to-use and powerful local GUI for Windows and macOS (Silicon), with GPU acceleration. Linux available, in beta as of 27/11/2023.

* [LoLLMS Web UI](https://github.com/ParisNeo/lollms-webui), a great web UI with many interesting and unique features, including a full model library for easy model selection.

* [Faraday.dev](https://faraday.dev/), an attractive and easy to use character-based chat GUI for Windows and macOS (both Silicon and Intel), with GPU acceleration.

* [llama-cpp-python](https://github.com/abetlen/llama-cpp-python), a Python library with GPU accel, LangChain support, and OpenAI-compatible API server.

* [candle](https://github.com/huggingface/candle), a Rust ML framework with a focus on performance, including GPU support, and ease of use.

* [ctransformers](https://github.com/marella/ctransformers), a Python library with GPU accel, LangChain support, and OpenAI-compatible AI server. Note, as of time of writing (November 27th 2023), ctransformers has not been updated in a long time and does not support many recent models.

<!-- README_GGUF.md-about-gguf end -->

<!-- repositories-available start -->

## Repositories available

* [AWQ model(s) for GPU inference.](https://huggingface.co/TheBloke/finance-LLM-AWQ)

* [GPTQ models for GPU inference, with multiple quantisation parameter options.](https://huggingface.co/TheBloke/finance-LLM-GPTQ)

* [2, 3, 4, 5, 6 and 8-bit GGUF models for CPU+GPU inference](https://huggingface.co/TheBloke/finance-LLM-GGUF)

* [AdaptLLM's original unquantised fp16 model in pytorch format, for GPU inference and for further conversions](https://huggingface.co/AdaptLLM/finance-LLM)

<!-- repositories-available end -->

<!-- prompt-template start -->

## Prompt template: Llama-2-Chat

```

[INST] <<SYS>>

{system_message}

<</SYS>>

{prompt} [/INST]

```

<!-- prompt-template end -->

<!-- compatibility_gguf start -->

## Compatibility

These quantised GGUFv2 files are compatible with llama.cpp from August 27th onwards, as of commit [d0cee0d](https://github.com/ggerganov/llama.cpp/commit/d0cee0d36d5be95a0d9088b674dbb27354107221)

They are also compatible with many third party UIs and libraries - please see the list at the top of this README.

## Explanation of quantisation methods

<details>

<summary>Click to see details</summary>

The new methods available are:

* GGML_TYPE_Q2_K - "type-1" 2-bit quantization in super-blocks containing 16 blocks, each block having 16 weight. Block scales and mins are quantized with 4 bits. This ends up effectively using 2.5625 bits per weight (bpw)

* GGML_TYPE_Q3_K - "type-0" 3-bit quantization in super-blocks containing 16 blocks, each block having 16 weights. Scales are quantized with 6 bits. This end up using 3.4375 bpw.

* GGML_TYPE_Q4_K - "type-1" 4-bit quantization in super-blocks containing 8 blocks, each block having 32 weights. Scales and mins are quantized with 6 bits. This ends up using 4.5 bpw.

* GGML_TYPE_Q5_K - "type-1" 5-bit quantization. Same super-block structure as GGML_TYPE_Q4_K resulting in 5.5 bpw

* GGML_TYPE_Q6_K - "type-0" 6-bit quantization. Super-blocks with 16 blocks, each block having 16 weights. Scales are quantized with 8 bits. This ends up using 6.5625 bpw

Refer to the Provided Files table below to see what files use which methods, and how.

</details>

<!-- compatibility_gguf end -->

<!-- README_GGUF.md-provided-files start -->

## Provided files

| Name | Quant method | Bits | Size | Max RAM required | Use case |

| ---- | ---- | ---- | ---- | ---- | ----- |

| [finance-llm.Q2_K.gguf](https://huggingface.co/TheBloke/finance-LLM-GGUF/blob/main/finance-llm.Q2_K.gguf) | Q2_K | 2 | 2.83 GB| 5.33 GB | smallest, significant quality loss - not recommended for most purposes |

| [finance-llm.Q3_K_S.gguf](https://huggingface.co/TheBloke/finance-LLM-GGUF/blob/main/finance-llm.Q3_K_S.gguf) | Q3_K_S | 3 | 2.95 GB| 5.45 GB | very small, high quality loss |

| [finance-llm.Q3_K_M.gguf](https://huggingface.co/TheBloke/finance-LLM-GGUF/blob/main/finance-llm.Q3_K_M.gguf) | Q3_K_M | 3 | 3.30 GB| 5.80 GB | very small, high quality loss |

| [finance-llm.Q3_K_L.gguf](https://huggingface.co/TheBloke/finance-LLM-GGUF/blob/main/finance-llm.Q3_K_L.gguf) | Q3_K_L | 3 | 3.60 GB| 6.10 GB | small, substantial quality loss |

| [finance-llm.Q4_0.gguf](https://huggingface.co/TheBloke/finance-LLM-GGUF/blob/main/finance-llm.Q4_0.gguf) | Q4_0 | 4 | 3.83 GB| 6.33 GB | legacy; small, very high quality loss - prefer using Q3_K_M |

| [finance-llm.Q4_K_S.gguf](https://huggingface.co/TheBloke/finance-LLM-GGUF/blob/main/finance-llm.Q4_K_S.gguf) | Q4_K_S | 4 | 3.86 GB| 6.36 GB | small, greater quality loss |

| [finance-llm.Q4_K_M.gguf](https://huggingface.co/TheBloke/finance-LLM-GGUF/blob/main/finance-llm.Q4_K_M.gguf) | Q4_K_M | 4 | 4.08 GB| 6.58 GB | medium, balanced quality - recommended |

| [finance-llm.Q5_0.gguf](https://huggingface.co/TheBloke/finance-LLM-GGUF/blob/main/finance-llm.Q5_0.gguf) | Q5_0 | 5 | 4.65 GB| 7.15 GB | legacy; medium, balanced quality - prefer using Q4_K_M |

| [finance-llm.Q5_K_S.gguf](https://huggingface.co/TheBloke/finance-LLM-GGUF/blob/main/finance-llm.Q5_K_S.gguf) | Q5_K_S | 5 | 4.65 GB| 7.15 GB | large, low quality loss - recommended |

| [finance-llm.Q5_K_M.gguf](https://huggingface.co/TheBloke/finance-LLM-GGUF/blob/main/finance-llm.Q5_K_M.gguf) | Q5_K_M | 5 | 4.78 GB| 7.28 GB | large, very low quality loss - recommended |

| [finance-llm.Q6_K.gguf](https://huggingface.co/TheBloke/finance-LLM-GGUF/blob/main/finance-llm.Q6_K.gguf) | Q6_K | 6 | 5.53 GB| 8.03 GB | very large, extremely low quality loss |

| [finance-llm.Q8_0.gguf](https://huggingface.co/TheBloke/finance-LLM-GGUF/blob/main/finance-llm.Q8_0.gguf) | Q8_0 | 8 | 7.16 GB| 9.66 GB | very large, extremely low quality loss - not recommended |

**Note**: the above RAM figures assume no GPU offloading. If layers are offloaded to the GPU, this will reduce RAM usage and use VRAM instead.

<!-- README_GGUF.md-provided-files end -->

<!-- README_GGUF.md-how-to-download start -->

## How to download GGUF files

**Note for manual downloaders:** You almost never want to clone the entire repo! Multiple different quantisation formats are provided, and most users only want to pick and download a single file.

The following clients/libraries will automatically download models for you, providing a list of available models to choose from:

* LM Studio

* LoLLMS Web UI

* Faraday.dev

### In `text-generation-webui`

Under Download Model, you can enter the model repo: TheBloke/finance-LLM-GGUF and below it, a specific filename to download, such as: finance-llm.Q4_K_M.gguf.

Then click Download.

### On the command line, including multiple files at once

I recommend using the `huggingface-hub` Python library:

```shell

pip3 install huggingface-hub

```

Then you can download any individual model file to the current directory, at high speed, with a command like this:

```shell

huggingface-cli download TheBloke/finance-LLM-GGUF finance-llm.Q4_K_M.gguf --local-dir . --local-dir-use-symlinks False

```

<details>

<summary>More advanced huggingface-cli download usage (click to read)</summary>

You can also download multiple files at once with a pattern:

```shell

huggingface-cli download TheBloke/finance-LLM-GGUF --local-dir . --local-dir-use-symlinks False --include='*Q4_K*gguf'

```

For more documentation on downloading with `huggingface-cli`, please see: [HF -> Hub Python Library -> Download files -> Download from the CLI](https://huggingface.co/docs/huggingface_hub/guides/download#download-from-the-cli).

To accelerate downloads on fast connections (1Gbit/s or higher), install `hf_transfer`:

```shell

pip3 install hf_transfer

```

And set environment variable `HF_HUB_ENABLE_HF_TRANSFER` to `1`:

```shell

HF_HUB_ENABLE_HF_TRANSFER=1 huggingface-cli download TheBloke/finance-LLM-GGUF finance-llm.Q4_K_M.gguf --local-dir . --local-dir-use-symlinks False

```

Windows Command Line users: You can set the environment variable by running `set HF_HUB_ENABLE_HF_TRANSFER=1` before the download command.

</details>

<!-- README_GGUF.md-how-to-download end -->

<!-- README_GGUF.md-how-to-run start -->

## Example `llama.cpp` command

Make sure you are using `llama.cpp` from commit [d0cee0d](https://github.com/ggerganov/llama.cpp/commit/d0cee0d36d5be95a0d9088b674dbb27354107221) or later.

```shell

./main -ngl 35 -m finance-llm.Q4_K_M.gguf --color -c 2048 --temp 0.7 --repeat_penalty 1.1 -n -1 -p "[INST] <<SYS>>\n{system_message}\n<</SYS>>\n{prompt} [/INST]"

```

Change `-ngl 32` to the number of layers to offload to GPU. Remove it if you don't have GPU acceleration.

Change `-c 2048` to the desired sequence length. For extended sequence models - eg 8K, 16K, 32K - the necessary RoPE scaling parameters are read from the GGUF file and set by llama.cpp automatically. Note that longer sequence lengths require much more resources, so you may need to reduce this value.

If you want to have a chat-style conversation, replace the `-p <PROMPT>` argument with `-i -ins`

For other parameters and how to use them, please refer to [the llama.cpp documentation](https://github.com/ggerganov/llama.cpp/blob/master/examples/main/README.md)

## How to run in `text-generation-webui`

Further instructions can be found in the text-generation-webui documentation, here: [text-generation-webui/docs/04 ‐ Model Tab.md](https://github.com/oobabooga/text-generation-webui/blob/main/docs/04%20%E2%80%90%20Model%20Tab.md#llamacpp).

## How to run from Python code

You can use GGUF models from Python using the [llama-cpp-python](https://github.com/abetlen/llama-cpp-python) or [ctransformers](https://github.com/marella/ctransformers) libraries. Note that at the time of writing (Nov 27th 2023), ctransformers has not been updated for some time and is not compatible with some recent models. Therefore I recommend you use llama-cpp-python.

### How to load this model in Python code, using llama-cpp-python

For full documentation, please see: [llama-cpp-python docs](https://abetlen.github.io/llama-cpp-python/).

#### First install the package

Run one of the following commands, according to your system:

```shell

# Base ctransformers with no GPU acceleration

pip install llama-cpp-python

# With NVidia CUDA acceleration

CMAKE_ARGS="-DLLAMA_CUBLAS=on" pip install llama-cpp-python

# Or with OpenBLAS acceleration

CMAKE_ARGS="-DLLAMA_BLAS=ON -DLLAMA_BLAS_VENDOR=OpenBLAS" pip install llama-cpp-python

# Or with CLBLast acceleration

CMAKE_ARGS="-DLLAMA_CLBLAST=on" pip install llama-cpp-python

# Or with AMD ROCm GPU acceleration (Linux only)

CMAKE_ARGS="-DLLAMA_HIPBLAS=on" pip install llama-cpp-python

# Or with Metal GPU acceleration for macOS systems only

CMAKE_ARGS="-DLLAMA_METAL=on" pip install llama-cpp-python

# In windows, to set the variables CMAKE_ARGS in PowerShell, follow this format; eg for NVidia CUDA:

$env:CMAKE_ARGS = "-DLLAMA_OPENBLAS=on"

pip install llama-cpp-python

```

#### Simple llama-cpp-python example code

```python

from llama_cpp import Llama

# Set gpu_layers to the number of layers to offload to GPU. Set to 0 if no GPU acceleration is available on your system.

llm = Llama(

model_path="./finance-llm.Q4_K_M.gguf", # Download the model file first

n_ctx=2048, # The max sequence length to use - note that longer sequence lengths require much more resources

n_threads=8, # The number of CPU threads to use, tailor to your system and the resulting performance

n_gpu_layers=35 # The number of layers to offload to GPU, if you have GPU acceleration available

)

# Simple inference example

output = llm(

"[INST] <<SYS>>\n{system_message}\n<</SYS>>\n{prompt} [/INST]", # Prompt

max_tokens=512, # Generate up to 512 tokens

stop=["</s>"], # Example stop token - not necessarily correct for this specific model! Please check before using.

echo=True # Whether to echo the prompt

)

# Chat Completion API

llm = Llama(model_path="./finance-llm.Q4_K_M.gguf", chat_format="llama-2") # Set chat_format according to the model you are using

llm.create_chat_completion(

messages = [

{"role": "system", "content": "You are a story writing assistant."},

{

"role": "user",

"content": "Write a story about llamas."

}

]

)

```

## How to use with LangChain

Here are guides on using llama-cpp-python and ctransformers with LangChain:

* [LangChain + llama-cpp-python](https://python.langchain.com/docs/integrations/llms/llamacpp)

* [LangChain + ctransformers](https://python.langchain.com/docs/integrations/providers/ctransformers)

<!-- README_GGUF.md-how-to-run end -->

<!-- footer start -->

<!-- 200823 -->

## Discord

For further support, and discussions on these models and AI in general, join us at:

[TheBloke AI's Discord server](https://discord.gg/theblokeai)

## Thanks, and how to contribute

Thanks to the [chirper.ai](https://chirper.ai) team!

Thanks to Clay from [gpus.llm-utils.org](llm-utils)!

I've had a lot of people ask if they can contribute. I enjoy providing models and helping people, and would love to be able to spend even more time doing it, as well as expanding into new projects like fine tuning/training.

If you're able and willing to contribute it will be most gratefully received and will help me to keep providing more models, and to start work on new AI projects.

Donaters will get priority support on any and all AI/LLM/model questions and requests, access to a private Discord room, plus other benefits.

* Patreon: https://patreon.com/TheBlokeAI

* Ko-Fi: https://ko-fi.com/TheBlokeAI

**Special thanks to**: Aemon Algiz.

**Patreon special mentions**: Michael Levine, 阿明, Trailburnt, Nikolai Manek, John Detwiler, Randy H, Will Dee, Sebastain Graf, NimbleBox.ai, Eugene Pentland, Emad Mostaque, Ai Maven, Jim Angel, Jeff Scroggin, Michael Davis, Manuel Alberto Morcote, Stephen Murray, Robert, Justin Joy, Luke @flexchar, Brandon Frisco, Elijah Stavena, S_X, Dan Guido, Undi ., Komninos Chatzipapas, Shadi, theTransient, Lone Striker, Raven Klaugh, jjj, Cap'n Zoog, Michel-Marie MAUDET (LINAGORA), Matthew Berman, David, Fen Risland, Omer Bin Jawed, Luke Pendergrass, Kalila, OG, Erik Bjäreholt, Rooh Singh, Joseph William Delisle, Dan Lewis, TL, John Villwock, AzureBlack, Brad, Pedro Madruga, Caitlyn Gatomon, K, jinyuan sun, Mano Prime, Alex, Jeffrey Morgan, Alicia Loh, Illia Dulskyi, Chadd, transmissions 11, fincy, Rainer Wilmers, ReadyPlayerEmma, knownsqashed, Mandus, biorpg, Deo Leter, Brandon Phillips, SuperWojo, Sean Connelly, Iucharbius, Jack West, Harry Royden McLaughlin, Nicholas, terasurfer, Vitor Caleffi, Duane Dunston, Johann-Peter Hartmann, David Ziegler, Olakabola, Ken Nordquist, Trenton Dambrowitz, Tom X Nguyen, Vadim, Ajan Kanaga, Leonard Tan, Clay Pascal, Alexandros Triantafyllidis, JM33133, Xule, vamX, ya boyyy, subjectnull, Talal Aujan, Alps Aficionado, wassieverse, Ari Malik, James Bentley, Woland, Spencer Kim, Michael Dempsey, Fred von Graf, Elle, zynix, William Richards, Stanislav Ovsiannikov, Edmond Seymore, Jonathan Leane, Martin Kemka, usrbinkat, Enrico Ros

Thank you to all my generous patrons and donaters!

And thank you again to a16z for their generous grant.

<!-- footer end -->

<!-- original-model-card start -->

# Original model card: AdaptLLM's Finance LLM

# Adapt (Large) Language Models to Domains

This repo contains the domain-specific base model developed from **LLaMA-1-7B**, using the method in our paper [Adapting Large Language Models via Reading Comprehension](https://huggingface.co/papers/2309.09530).

We explore **continued pre-training on domain-specific corpora** for large language models. While this approach enriches LLMs with domain knowledge, it significantly hurts their prompting ability for question answering. Inspired by human learning via reading comprehension, we propose a simple method to **transform large-scale pre-training corpora into reading comprehension texts**, consistently improving prompting performance across tasks in biomedicine, finance, and law domains. **Our 7B model competes with much larger domain-specific models like BloombergGPT-50B**.

### 🤗 We are currently working hard on developing models across different domains, scales and architectures! Please stay tuned! 🤗

**************************** **Updates** ****************************

* 12/19: Released our [13B base models](https://huggingface.co/AdaptLLM/finance-LLM-13B) developed from LLaMA-1-13B.

* 12/8: Released our [chat models](https://huggingface.co/AdaptLLM/finance-chat) developed from LLaMA-2-Chat-7B.

* 9/18: Released our [paper](https://huggingface.co/papers/2309.09530), [code](https://github.com/microsoft/LMOps), [data](https://huggingface.co/datasets/AdaptLLM/finance-tasks), and [base models](https://huggingface.co/AdaptLLM/finance-LLM) developed from LLaMA-1-7B.

## Domain-Specific LLaMA-1

### LLaMA-1-7B

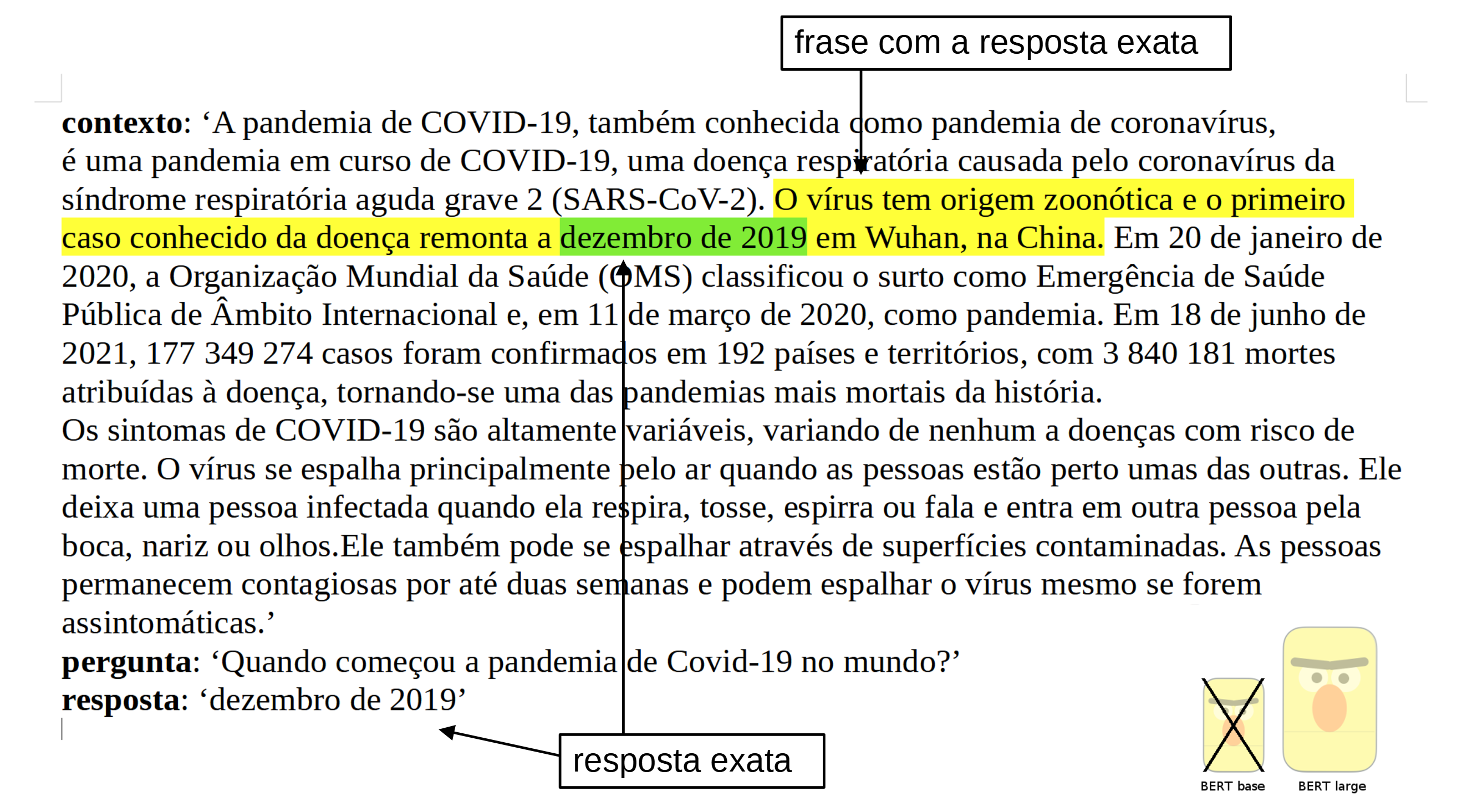

In our paper, we develop three domain-specific models from LLaMA-1-7B, which are also available in Huggingface: [Biomedicine-LLM](https://huggingface.co/AdaptLLM/medicine-LLM), [Finance-LLM](https://huggingface.co/AdaptLLM/finance-LLM) and [Law-LLM](https://huggingface.co/AdaptLLM/law-LLM), the performances of our AdaptLLM compared to other domain-specific LLMs are:

<p align='center'>

<img src="https://hf.fast360.xyz/production/uploads/650801ced5578ef7e20b33d4/6efPwitFgy-pLTzvccdcP.png" width="700">

</p>

### LLaMA-1-13B

Moreover, we scale up our base model to LLaMA-1-13B to see if **our method is similarly effective for larger-scale models**, and the results are consistently positive too: [Biomedicine-LLM-13B](https://huggingface.co/AdaptLLM/medicine-LLM-13B), [Finance-LLM-13B](https://huggingface.co/AdaptLLM/finance-LLM-13B) and [Law-LLM-13B](https://huggingface.co/AdaptLLM/law-LLM-13B).

## Domain-Specific LLaMA-2-Chat

Our method is also effective for aligned models! LLaMA-2-Chat requires a [specific data format](https://huggingface.co/blog/llama2#how-to-prompt-llama-2), and our **reading comprehension can perfectly fit the data format** by transforming the reading comprehension into a multi-turn conversation. We have also open-sourced chat models in different domains: [Biomedicine-Chat](https://huggingface.co/AdaptLLM/medicine-chat), [Finance-Chat](https://huggingface.co/AdaptLLM/finance-chat) and [Law-Chat](https://huggingface.co/AdaptLLM/law-chat)

For example, to chat with the finance model:

```python

from transformers import AutoModelForCausalLM, AutoTokenizer

model = AutoModelForCausalLM.from_pretrained("AdaptLLM/finance-chat")

tokenizer = AutoTokenizer.from_pretrained("AdaptLLM/finance-chat", use_fast=False)

# Put your input here:

user_input = '''Use this fact to answer the question: Title of each class Trading Symbol(s) Name of each exchange on which registered

Common Stock, Par Value $.01 Per Share MMM New York Stock Exchange

MMM Chicago Stock Exchange, Inc.

1.500% Notes due 2026 MMM26 New York Stock Exchange

1.750% Notes due 2030 MMM30 New York Stock Exchange

1.500% Notes due 2031 MMM31 New York Stock Exchange

Which debt securities are registered to trade on a national securities exchange under 3M's name as of Q2 of 2023?'''

# We use the prompt template of LLaMA-2-Chat demo

prompt = f"<s>[INST] <<SYS>>\nYou are a helpful, respectful and honest assistant. Always answer as helpfully as possible, while being safe. Your answers should not include any harmful, unethical, racist, sexist, toxic, dangerous, or illegal content. Please ensure that your responses are socially unbiased and positive in nature.\n\nIf a question does not make any sense, or is not factually coherent, explain why instead of answering something not correct. If you don't know the answer to a question, please don't share false information.\n<</SYS>>\n\n{user_input} [/INST]"

inputs = tokenizer(prompt, return_tensors="pt", add_special_tokens=False).input_ids.to(model.device)

outputs = model.generate(input_ids=inputs, max_length=4096)[0]

answer_start = int(inputs.shape[-1])

pred = tokenizer.decode(outputs[answer_start:], skip_special_tokens=True)

print(f'### User Input:\n{user_input}\n\n### Assistant Output:\n{pred}')

```

## Domain-Specific Tasks

To easily reproduce our results, we have uploaded the filled-in zero/few-shot input instructions and output completions of each domain-specific task: [biomedicine-tasks](https://huggingface.co/datasets/AdaptLLM/medicine-tasks), [finance-tasks](https://huggingface.co/datasets/AdaptLLM/finance-tasks), and [law-tasks](https://huggingface.co/datasets/AdaptLLM/law-tasks).

**Note:** those filled-in instructions are specifically tailored for models before alignment and do NOT fit for the specific data format required for chat models.

## Citation

If you find our work helpful, please cite us:

```bibtex

@article{adaptllm,

title = {Adapting Large Language Models via Reading Comprehension},

author = {Daixuan Cheng and Shaohan Huang and Furu Wei},

journal = {CoRR},

volume = {abs/2309.09530},

year = {2023}

}

```

<!-- original-model-card end -->

|

{"base_model": "AdaptLLM/finance-LLM", "datasets": ["Open-Orca/OpenOrca", "GAIR/lima", "WizardLM/WizardLM_evol_instruct_V2_196k"], "language": ["en"], "license": "other", "metrics": ["accuracy"], "model_name": "Finance LLM", "pipeline_tag": "text-generation", "tags": ["finance"], "inference": false, "model_creator": "AdaptLLM", "model_type": "llama", "prompt_template": "[INST] <<SYS>>\n{system_message}\n<</SYS>>\n{prompt} [/INST]\n", "quantized_by": "TheBloke"}

|

task

|

[

"QUESTION_ANSWERING"

] | 46,834 |

anismahmahi/G2_replace_Whata_repetition_with_noPropaganda_SetFit

|

anismahmahi

|

text-classification

|

[

"setfit",

"safetensors",

"mpnet",

"sentence-transformers",

"text-classification",

"generated_from_setfit_trainer",

"arxiv:2209.11055",

"base_model:sentence-transformers/paraphrase-mpnet-base-v2",

"base_model:finetune:sentence-transformers/paraphrase-mpnet-base-v2",

"model-index",

"region:us"

] | 2024-01-07T13:33:28Z |

2024-01-07T13:33:55+00:00

| 3 | 0 |

---

base_model: sentence-transformers/paraphrase-mpnet-base-v2

library_name: setfit

metrics:

- accuracy

pipeline_tag: text-classification

tags:

- setfit

- sentence-transformers

- text-classification

- generated_from_setfit_trainer

widget:

- text: Fox News, The Washington Post, NBC News, The Associated Press and the Los

Angeles Times are among the entities that have said they will file amicus briefs

on behalf of CNN.

- text: 'Tommy Robinson is in prison today because he violated a court order demanding

that he not film videos outside the trials of Muslim rape gangs.

'

- text: As I wrote during the presidential campaign, Trump has no idea of Washington

and no idea who to appoint who would support him rather than work against him.

- text: IN MAY 2013, the Washington Post’s Greg Miller reported that the head of the

CIA’s clandestine service was being shifted out of that position as a result of

“a management shake-up” by then-Director John Brennan.

- text: Columbus police are investigating the shootings.

inference: false

model-index:

- name: SetFit with sentence-transformers/paraphrase-mpnet-base-v2

results:

- task:

type: text-classification

name: Text Classification

dataset:

name: Unknown

type: unknown

split: test

metrics:

- type: accuracy

value: 0.602089552238806

name: Accuracy

---

# SetFit with sentence-transformers/paraphrase-mpnet-base-v2

This is a [SetFit](https://github.com/huggingface/setfit) model that can be used for Text Classification. This SetFit model uses [sentence-transformers/paraphrase-mpnet-base-v2](https://huggingface.co/sentence-transformers/paraphrase-mpnet-base-v2) as the Sentence Transformer embedding model. A OneVsRestClassifier instance is used for classification.

The model has been trained using an efficient few-shot learning technique that involves:

1. Fine-tuning a [Sentence Transformer](https://www.sbert.net) with contrastive learning.

2. Training a classification head with features from the fine-tuned Sentence Transformer.

## Model Details

### Model Description

- **Model Type:** SetFit

- **Sentence Transformer body:** [sentence-transformers/paraphrase-mpnet-base-v2](https://huggingface.co/sentence-transformers/paraphrase-mpnet-base-v2)

- **Classification head:** a OneVsRestClassifier instance

- **Maximum Sequence Length:** 512 tokens

<!-- - **Number of Classes:** Unknown -->

<!-- - **Training Dataset:** [Unknown](https://huggingface.co/datasets/unknown) -->

<!-- - **Language:** Unknown -->

<!-- - **License:** Unknown -->

### Model Sources

- **Repository:** [SetFit on GitHub](https://github.com/huggingface/setfit)

- **Paper:** [Efficient Few-Shot Learning Without Prompts](https://arxiv.org/abs/2209.11055)

- **Blogpost:** [SetFit: Efficient Few-Shot Learning Without Prompts](https://huggingface.co/blog/setfit)

## Evaluation

### Metrics

| Label | Accuracy |

|:--------|:---------|

| **all** | 0.6021 |

## Uses

### Direct Use for Inference

First install the SetFit library:

```bash

pip install setfit

```

Then you can load this model and run inference.

```python

from setfit import SetFitModel

# Download from the 🤗 Hub

model = SetFitModel.from_pretrained("anismahmahi/G2_replace_Whata_repetition_with_noPropaganda_SetFit")

# Run inference

preds = model("Columbus police are investigating the shootings.")

```

<!--

### Downstream Use

*List how someone could finetune this model on their own dataset.*

-->

<!--

### Out-of-Scope Use

*List how the model may foreseeably be misused and address what users ought not to do with the model.*

-->

<!--

## Bias, Risks and Limitations

*What are the known or foreseeable issues stemming from this model? You could also flag here known failure cases or weaknesses of the model.*

-->

<!--

### Recommendations

*What are recommendations with respect to the foreseeable issues? For example, filtering explicit content.*

-->

## Training Details

### Training Set Metrics

| Training set | Min | Median | Max |

|:-------------|:----|:--------|:----|

| Word count | 1 | 23.1093 | 129 |

### Training Hyperparameters

- batch_size: (16, 16)

- num_epochs: (2, 2)

- max_steps: -1

- sampling_strategy: oversampling

- num_iterations: 10

- body_learning_rate: (2e-05, 1e-05)

- head_learning_rate: 0.01

- loss: CosineSimilarityLoss

- distance_metric: cosine_distance

- margin: 0.25

- end_to_end: False

- use_amp: False

- warmup_proportion: 0.1

- seed: 42

- eval_max_steps: -1

- load_best_model_at_end: True

### Training Results

| Epoch | Step | Training Loss | Validation Loss |

|:-------:|:--------:|:-------------:|:---------------:|

| 0.0002 | 1 | 0.3592 | - |

| 0.0121 | 50 | 0.2852 | - |

| 0.0243 | 100 | 0.2694 | - |

| 0.0364 | 150 | 0.2182 | - |

| 0.0486 | 200 | 0.2224 | - |

| 0.0607 | 250 | 0.2634 | - |

| 0.0729 | 300 | 0.2431 | - |

| 0.0850 | 350 | 0.2286 | - |

| 0.0971 | 400 | 0.197 | - |

| 0.1093 | 450 | 0.2466 | - |

| 0.1214 | 500 | 0.2374 | - |

| 0.1336 | 550 | 0.2134 | - |

| 0.1457 | 600 | 0.2092 | - |

| 0.1578 | 650 | 0.1987 | - |

| 0.1700 | 700 | 0.2288 | - |

| 0.1821 | 750 | 0.1562 | - |

| 0.1943 | 800 | 0.27 | - |

| 0.2064 | 850 | 0.1314 | - |

| 0.2186 | 900 | 0.2144 | - |

| 0.2307 | 950 | 0.184 | - |

| 0.2428 | 1000 | 0.2069 | - |

| 0.2550 | 1050 | 0.1773 | - |

| 0.2671 | 1100 | 0.0704 | - |

| 0.2793 | 1150 | 0.1139 | - |

| 0.2914 | 1200 | 0.2398 | - |

| 0.3035 | 1250 | 0.0672 | - |

| 0.3157 | 1300 | 0.1321 | - |

| 0.3278 | 1350 | 0.0803 | - |

| 0.3400 | 1400 | 0.0589 | - |

| 0.3521 | 1450 | 0.0428 | - |

| 0.3643 | 1500 | 0.0886 | - |

| 0.3764 | 1550 | 0.0839 | - |

| 0.3885 | 1600 | 0.1843 | - |

| 0.4007 | 1650 | 0.0375 | - |

| 0.4128 | 1700 | 0.114 | - |

| 0.4250 | 1750 | 0.1264 | - |

| 0.4371 | 1800 | 0.0585 | - |

| 0.4492 | 1850 | 0.0586 | - |

| 0.4614 | 1900 | 0.0805 | - |

| 0.4735 | 1950 | 0.0686 | - |

| 0.4857 | 2000 | 0.0684 | - |

| 0.4978 | 2050 | 0.0803 | - |

| 0.5100 | 2100 | 0.076 | - |

| 0.5221 | 2150 | 0.0888 | - |

| 0.5342 | 2200 | 0.1091 | - |

| 0.5464 | 2250 | 0.038 | - |

| 0.5585 | 2300 | 0.0674 | - |

| 0.5707 | 2350 | 0.0562 | - |

| 0.5828 | 2400 | 0.0603 | - |

| 0.5949 | 2450 | 0.0669 | - |

| 0.6071 | 2500 | 0.0829 | - |

| 0.6192 | 2550 | 0.1442 | - |

| 0.6314 | 2600 | 0.0914 | - |

| 0.6435 | 2650 | 0.0357 | - |

| 0.6557 | 2700 | 0.0546 | - |

| 0.6678 | 2750 | 0.0748 | - |

| 0.6799 | 2800 | 0.0149 | - |

| 0.6921 | 2850 | 0.1067 | - |

| 0.7042 | 2900 | 0.0054 | - |

| 0.7164 | 2950 | 0.0878 | - |

| 0.7285 | 3000 | 0.0385 | - |

| 0.7407 | 3050 | 0.036 | - |

| 0.7528 | 3100 | 0.0902 | - |

| 0.7649 | 3150 | 0.0734 | - |

| 0.7771 | 3200 | 0.0369 | - |

| 0.7892 | 3250 | 0.0031 | - |

| 0.8014 | 3300 | 0.0113 | - |

| 0.8135 | 3350 | 0.0862 | - |

| 0.8256 | 3400 | 0.0549 | - |

| 0.8378 | 3450 | 0.0104 | - |

| 0.8499 | 3500 | 0.0072 | - |

| 0.8621 | 3550 | 0.0546 | - |

| 0.8742 | 3600 | 0.0579 | - |

| 0.8864 | 3650 | 0.0789 | - |

| 0.8985 | 3700 | 0.0711 | - |

| 0.9106 | 3750 | 0.0361 | - |

| 0.9228 | 3800 | 0.0292 | - |

| 0.9349 | 3850 | 0.0121 | - |

| 0.9471 | 3900 | 0.0066 | - |

| 0.9592 | 3950 | 0.0091 | - |

| 0.9713 | 4000 | 0.0027 | - |

| 0.9835 | 4050 | 0.0891 | - |

| 0.9956 | 4100 | 0.0186 | - |

| **1.0** | **4118** | **-** | **0.2746** |

| 1.0078 | 4150 | 0.0246 | - |

| 1.0199 | 4200 | 0.0154 | - |

| 1.0321 | 4250 | 0.0056 | - |

| 1.0442 | 4300 | 0.0343 | - |

| 1.0563 | 4350 | 0.0375 | - |

| 1.0685 | 4400 | 0.0106 | - |

| 1.0806 | 4450 | 0.0025 | - |

| 1.0928 | 4500 | 0.0425 | - |

| 1.1049 | 4550 | 0.0019 | - |

| 1.1170 | 4600 | 0.0014 | - |

| 1.1292 | 4650 | 0.0883 | - |

| 1.1413 | 4700 | 0.0176 | - |

| 1.1535 | 4750 | 0.0204 | - |

| 1.1656 | 4800 | 0.0011 | - |

| 1.1778 | 4850 | 0.005 | - |

| 1.1899 | 4900 | 0.0238 | - |

| 1.2020 | 4950 | 0.0362 | - |

| 1.2142 | 5000 | 0.0219 | - |

| 1.2263 | 5050 | 0.0487 | - |

| 1.2385 | 5100 | 0.0609 | - |

| 1.2506 | 5150 | 0.0464 | - |

| 1.2627 | 5200 | 0.0033 | - |

| 1.2749 | 5250 | 0.0087 | - |

| 1.2870 | 5300 | 0.0101 | - |

| 1.2992 | 5350 | 0.0529 | - |

| 1.3113 | 5400 | 0.0243 | - |

| 1.3235 | 5450 | 0.001 | - |

| 1.3356 | 5500 | 0.0102 | - |

| 1.3477 | 5550 | 0.0047 | - |

| 1.3599 | 5600 | 0.0034 | - |

| 1.3720 | 5650 | 0.0118 | - |

| 1.3842 | 5700 | 0.0742 | - |

| 1.3963 | 5750 | 0.0538 | - |

| 1.4085 | 5800 | 0.0162 | - |

| 1.4206 | 5850 | 0.0079 | - |

| 1.4327 | 5900 | 0.0027 | - |

| 1.4449 | 5950 | 0.0035 | - |

| 1.4570 | 6000 | 0.0581 | - |

| 1.4692 | 6050 | 0.0813 | - |

| 1.4813 | 6100 | 0.0339 | - |

| 1.4934 | 6150 | 0.0312 | - |

| 1.5056 | 6200 | 0.0323 | - |

| 1.5177 | 6250 | 0.0521 | - |

| 1.5299 | 6300 | 0.0016 | - |

| 1.5420 | 6350 | 0.0009 | - |

| 1.5542 | 6400 | 0.0967 | - |

| 1.5663 | 6450 | 0.0009 | - |

| 1.5784 | 6500 | 0.031 | - |

| 1.5906 | 6550 | 0.0114 | - |

| 1.6027 | 6600 | 0.0599 | - |

| 1.6149 | 6650 | 0.0416 | - |

| 1.6270 | 6700 | 0.0047 | - |

| 1.6391 | 6750 | 0.0234 | - |

| 1.6513 | 6800 | 0.0609 | - |

| 1.6634 | 6850 | 0.022 | - |

| 1.6756 | 6900 | 0.0042 | - |

| 1.6877 | 6950 | 0.0336 | - |

| 1.6999 | 7000 | 0.0592 | - |

| 1.7120 | 7050 | 0.0536 | - |

| 1.7241 | 7100 | 0.1198 | - |

| 1.7363 | 7150 | 0.1035 | - |

| 1.7484 | 7200 | 0.0549 | - |

| 1.7606 | 7250 | 0.027 | - |

| 1.7727 | 7300 | 0.0251 | - |

| 1.7848 | 7350 | 0.0225 | - |

| 1.7970 | 7400 | 0.0027 | - |

| 1.8091 | 7450 | 0.0309 | - |

| 1.8213 | 7500 | 0.024 | - |

| 1.8334 | 7550 | 0.0355 | - |

| 1.8456 | 7600 | 0.0239 | - |

| 1.8577 | 7650 | 0.0377 | - |

| 1.8698 | 7700 | 0.012 | - |

| 1.8820 | 7750 | 0.0233 | - |

| 1.8941 | 7800 | 0.0184 | - |

| 1.9063 | 7850 | 0.0022 | - |

| 1.9184 | 7900 | 0.0043 | - |

| 1.9305 | 7950 | 0.014 | - |

| 1.9427 | 8000 | 0.0083 | - |

| 1.9548 | 8050 | 0.0084 | - |

| 1.9670 | 8100 | 0.0009 | - |

| 1.9791 | 8150 | 0.002 | - |

| 1.9913 | 8200 | 0.0002 | - |

| 2.0 | 8236 | - | 0.2768 |

* The bold row denotes the saved checkpoint.

### Framework Versions

- Python: 3.10.12

- SetFit: 1.0.1

- Sentence Transformers: 2.2.2

- Transformers: 4.35.2

- PyTorch: 2.1.0+cu121

- Datasets: 2.16.1

- Tokenizers: 0.15.0

## Citation

### BibTeX

```bibtex

@article{https://doi.org/10.48550/arxiv.2209.11055,

doi = {10.48550/ARXIV.2209.11055},

url = {https://arxiv.org/abs/2209.11055},

author = {Tunstall, Lewis and Reimers, Nils and Jo, Unso Eun Seo and Bates, Luke and Korat, Daniel and Wasserblat, Moshe and Pereg, Oren},

keywords = {Computation and Language (cs.CL), FOS: Computer and information sciences, FOS: Computer and information sciences},

title = {Efficient Few-Shot Learning Without Prompts},

publisher = {arXiv},

year = {2022},

copyright = {Creative Commons Attribution 4.0 International}

}

```

<!--

## Glossary

*Clearly define terms in order to be accessible across audiences.*

-->

<!--

## Model Card Authors

*Lists the people who create the model card, providing recognition and accountability for the detailed work that goes into its construction.*

-->

<!--

## Model Card Contact

*Provides a way for people who have updates to the Model Card, suggestions, or questions, to contact the Model Card authors.*

-->

| null |

Non_BioNLP

|

# SetFit with sentence-transformers/paraphrase-mpnet-base-v2

This is a [SetFit](https://github.com/huggingface/setfit) model that can be used for Text Classification. This SetFit model uses [sentence-transformers/paraphrase-mpnet-base-v2](https://huggingface.co/sentence-transformers/paraphrase-mpnet-base-v2) as the Sentence Transformer embedding model. A OneVsRestClassifier instance is used for classification.

The model has been trained using an efficient few-shot learning technique that involves:

1. Fine-tuning a [Sentence Transformer](https://www.sbert.net) with contrastive learning.

2. Training a classification head with features from the fine-tuned Sentence Transformer.

## Model Details

### Model Description

- **Model Type:** SetFit

- **Sentence Transformer body:** [sentence-transformers/paraphrase-mpnet-base-v2](https://huggingface.co/sentence-transformers/paraphrase-mpnet-base-v2)

- **Classification head:** a OneVsRestClassifier instance

- **Maximum Sequence Length:** 512 tokens

<!-- - **Number of Classes:** Unknown -->

<!-- - **Training Dataset:** [Unknown](https://huggingface.co/datasets/unknown) -->

<!-- - **Language:** Unknown -->

<!-- - **License:** Unknown -->

### Model Sources

- **Repository:** [SetFit on GitHub](https://github.com/huggingface/setfit)

- **Paper:** [Efficient Few-Shot Learning Without Prompts](https://arxiv.org/abs/2209.11055)

- **Blogpost:** [SetFit: Efficient Few-Shot Learning Without Prompts](https://huggingface.co/blog/setfit)

## Evaluation

### Metrics

| Label | Accuracy |

|:--------|:---------|

| **all** | 0.6021 |

## Uses

### Direct Use for Inference

First install the SetFit library:

```bash

pip install setfit

```

Then you can load this model and run inference.

```python

from setfit import SetFitModel

# Download from the 🤗 Hub

model = SetFitModel.from_pretrained("anismahmahi/G2_replace_Whata_repetition_with_noPropaganda_SetFit")

# Run inference

preds = model("Columbus police are investigating the shootings.")

```

<!--

### Downstream Use

*List how someone could finetune this model on their own dataset.*

-->

<!--

### Out-of-Scope Use

*List how the model may foreseeably be misused and address what users ought not to do with the model.*

-->

<!--

## Bias, Risks and Limitations

*What are the known or foreseeable issues stemming from this model? You could also flag here known failure cases or weaknesses of the model.*

-->

<!--

### Recommendations

*What are recommendations with respect to the foreseeable issues? For example, filtering explicit content.*

-->

## Training Details

### Training Set Metrics

| Training set | Min | Median | Max |

|:-------------|:----|:--------|:----|

| Word count | 1 | 23.1093 | 129 |

### Training Hyperparameters

- batch_size: (16, 16)

- num_epochs: (2, 2)

- max_steps: -1

- sampling_strategy: oversampling

- num_iterations: 10

- body_learning_rate: (2e-05, 1e-05)

- head_learning_rate: 0.01

- loss: CosineSimilarityLoss

- distance_metric: cosine_distance

- margin: 0.25

- end_to_end: False

- use_amp: False

- warmup_proportion: 0.1

- seed: 42

- eval_max_steps: -1

- load_best_model_at_end: True

### Training Results

| Epoch | Step | Training Loss | Validation Loss |

|:-------:|:--------:|:-------------:|:---------------:|

| 0.0002 | 1 | 0.3592 | - |

| 0.0121 | 50 | 0.2852 | - |

| 0.0243 | 100 | 0.2694 | - |

| 0.0364 | 150 | 0.2182 | - |

| 0.0486 | 200 | 0.2224 | - |

| 0.0607 | 250 | 0.2634 | - |

| 0.0729 | 300 | 0.2431 | - |

| 0.0850 | 350 | 0.2286 | - |

| 0.0971 | 400 | 0.197 | - |

| 0.1093 | 450 | 0.2466 | - |

| 0.1214 | 500 | 0.2374 | - |

| 0.1336 | 550 | 0.2134 | - |

| 0.1457 | 600 | 0.2092 | - |

| 0.1578 | 650 | 0.1987 | - |

| 0.1700 | 700 | 0.2288 | - |

| 0.1821 | 750 | 0.1562 | - |

| 0.1943 | 800 | 0.27 | - |

| 0.2064 | 850 | 0.1314 | - |

| 0.2186 | 900 | 0.2144 | - |

| 0.2307 | 950 | 0.184 | - |

| 0.2428 | 1000 | 0.2069 | - |

| 0.2550 | 1050 | 0.1773 | - |

| 0.2671 | 1100 | 0.0704 | - |

| 0.2793 | 1150 | 0.1139 | - |

| 0.2914 | 1200 | 0.2398 | - |

| 0.3035 | 1250 | 0.0672 | - |

| 0.3157 | 1300 | 0.1321 | - |

| 0.3278 | 1350 | 0.0803 | - |

| 0.3400 | 1400 | 0.0589 | - |

| 0.3521 | 1450 | 0.0428 | - |

| 0.3643 | 1500 | 0.0886 | - |

| 0.3764 | 1550 | 0.0839 | - |

| 0.3885 | 1600 | 0.1843 | - |

| 0.4007 | 1650 | 0.0375 | - |

| 0.4128 | 1700 | 0.114 | - |

| 0.4250 | 1750 | 0.1264 | - |

| 0.4371 | 1800 | 0.0585 | - |

| 0.4492 | 1850 | 0.0586 | - |

| 0.4614 | 1900 | 0.0805 | - |

| 0.4735 | 1950 | 0.0686 | - |

| 0.4857 | 2000 | 0.0684 | - |

| 0.4978 | 2050 | 0.0803 | - |

| 0.5100 | 2100 | 0.076 | - |

| 0.5221 | 2150 | 0.0888 | - |

| 0.5342 | 2200 | 0.1091 | - |

| 0.5464 | 2250 | 0.038 | - |

| 0.5585 | 2300 | 0.0674 | - |

| 0.5707 | 2350 | 0.0562 | - |

| 0.5828 | 2400 | 0.0603 | - |

| 0.5949 | 2450 | 0.0669 | - |

| 0.6071 | 2500 | 0.0829 | - |

| 0.6192 | 2550 | 0.1442 | - |

| 0.6314 | 2600 | 0.0914 | - |

| 0.6435 | 2650 | 0.0357 | - |

| 0.6557 | 2700 | 0.0546 | - |

| 0.6678 | 2750 | 0.0748 | - |

| 0.6799 | 2800 | 0.0149 | - |

| 0.6921 | 2850 | 0.1067 | - |

| 0.7042 | 2900 | 0.0054 | - |

| 0.7164 | 2950 | 0.0878 | - |

| 0.7285 | 3000 | 0.0385 | - |

| 0.7407 | 3050 | 0.036 | - |

| 0.7528 | 3100 | 0.0902 | - |

| 0.7649 | 3150 | 0.0734 | - |

| 0.7771 | 3200 | 0.0369 | - |

| 0.7892 | 3250 | 0.0031 | - |

| 0.8014 | 3300 | 0.0113 | - |

| 0.8135 | 3350 | 0.0862 | - |

| 0.8256 | 3400 | 0.0549 | - |

| 0.8378 | 3450 | 0.0104 | - |

| 0.8499 | 3500 | 0.0072 | - |

| 0.8621 | 3550 | 0.0546 | - |

| 0.8742 | 3600 | 0.0579 | - |

| 0.8864 | 3650 | 0.0789 | - |

| 0.8985 | 3700 | 0.0711 | - |

| 0.9106 | 3750 | 0.0361 | - |

| 0.9228 | 3800 | 0.0292 | - |

| 0.9349 | 3850 | 0.0121 | - |

| 0.9471 | 3900 | 0.0066 | - |

| 0.9592 | 3950 | 0.0091 | - |

| 0.9713 | 4000 | 0.0027 | - |

| 0.9835 | 4050 | 0.0891 | - |

| 0.9956 | 4100 | 0.0186 | - |

| **1.0** | **4118** | **-** | **0.2746** |

| 1.0078 | 4150 | 0.0246 | - |

| 1.0199 | 4200 | 0.0154 | - |

| 1.0321 | 4250 | 0.0056 | - |

| 1.0442 | 4300 | 0.0343 | - |

| 1.0563 | 4350 | 0.0375 | - |

| 1.0685 | 4400 | 0.0106 | - |

| 1.0806 | 4450 | 0.0025 | - |

| 1.0928 | 4500 | 0.0425 | - |

| 1.1049 | 4550 | 0.0019 | - |

| 1.1170 | 4600 | 0.0014 | - |

| 1.1292 | 4650 | 0.0883 | - |

| 1.1413 | 4700 | 0.0176 | - |

| 1.1535 | 4750 | 0.0204 | - |

| 1.1656 | 4800 | 0.0011 | - |

| 1.1778 | 4850 | 0.005 | - |

| 1.1899 | 4900 | 0.0238 | - |

| 1.2020 | 4950 | 0.0362 | - |

| 1.2142 | 5000 | 0.0219 | - |

| 1.2263 | 5050 | 0.0487 | - |

| 1.2385 | 5100 | 0.0609 | - |

| 1.2506 | 5150 | 0.0464 | - |

| 1.2627 | 5200 | 0.0033 | - |

| 1.2749 | 5250 | 0.0087 | - |

| 1.2870 | 5300 | 0.0101 | - |

| 1.2992 | 5350 | 0.0529 | - |

| 1.3113 | 5400 | 0.0243 | - |

| 1.3235 | 5450 | 0.001 | - |

| 1.3356 | 5500 | 0.0102 | - |

| 1.3477 | 5550 | 0.0047 | - |

| 1.3599 | 5600 | 0.0034 | - |

| 1.3720 | 5650 | 0.0118 | - |

| 1.3842 | 5700 | 0.0742 | - |

| 1.3963 | 5750 | 0.0538 | - |

| 1.4085 | 5800 | 0.0162 | - |

| 1.4206 | 5850 | 0.0079 | - |

| 1.4327 | 5900 | 0.0027 | - |

| 1.4449 | 5950 | 0.0035 | - |

| 1.4570 | 6000 | 0.0581 | - |

| 1.4692 | 6050 | 0.0813 | - |

| 1.4813 | 6100 | 0.0339 | - |

| 1.4934 | 6150 | 0.0312 | - |

| 1.5056 | 6200 | 0.0323 | - |

| 1.5177 | 6250 | 0.0521 | - |

| 1.5299 | 6300 | 0.0016 | - |

| 1.5420 | 6350 | 0.0009 | - |

| 1.5542 | 6400 | 0.0967 | - |

| 1.5663 | 6450 | 0.0009 | - |

| 1.5784 | 6500 | 0.031 | - |

| 1.5906 | 6550 | 0.0114 | - |

| 1.6027 | 6600 | 0.0599 | - |

| 1.6149 | 6650 | 0.0416 | - |

| 1.6270 | 6700 | 0.0047 | - |

| 1.6391 | 6750 | 0.0234 | - |

| 1.6513 | 6800 | 0.0609 | - |

| 1.6634 | 6850 | 0.022 | - |

| 1.6756 | 6900 | 0.0042 | - |

| 1.6877 | 6950 | 0.0336 | - |

| 1.6999 | 7000 | 0.0592 | - |

| 1.7120 | 7050 | 0.0536 | - |

| 1.7241 | 7100 | 0.1198 | - |

| 1.7363 | 7150 | 0.1035 | - |

| 1.7484 | 7200 | 0.0549 | - |

| 1.7606 | 7250 | 0.027 | - |

| 1.7727 | 7300 | 0.0251 | - |

| 1.7848 | 7350 | 0.0225 | - |

| 1.7970 | 7400 | 0.0027 | - |

| 1.8091 | 7450 | 0.0309 | - |

| 1.8213 | 7500 | 0.024 | - |

| 1.8334 | 7550 | 0.0355 | - |

| 1.8456 | 7600 | 0.0239 | - |

| 1.8577 | 7650 | 0.0377 | - |

| 1.8698 | 7700 | 0.012 | - |

| 1.8820 | 7750 | 0.0233 | - |

| 1.8941 | 7800 | 0.0184 | - |

| 1.9063 | 7850 | 0.0022 | - |

| 1.9184 | 7900 | 0.0043 | - |

| 1.9305 | 7950 | 0.014 | - |

| 1.9427 | 8000 | 0.0083 | - |

| 1.9548 | 8050 | 0.0084 | - |

| 1.9670 | 8100 | 0.0009 | - |

| 1.9791 | 8150 | 0.002 | - |

| 1.9913 | 8200 | 0.0002 | - |

| 2.0 | 8236 | - | 0.2768 |

* The bold row denotes the saved checkpoint.

### Framework Versions

- Python: 3.10.12

- SetFit: 1.0.1

- Sentence Transformers: 2.2.2

- Transformers: 4.35.2

- PyTorch: 2.1.0+cu121

- Datasets: 2.16.1

- Tokenizers: 0.15.0

## Citation

### BibTeX

```bibtex

@article{https://doi.org/10.48550/arxiv.2209.11055,

doi = {10.48550/ARXIV.2209.11055},

url = {https://arxiv.org/abs/2209.11055},

author = {Tunstall, Lewis and Reimers, Nils and Jo, Unso Eun Seo and Bates, Luke and Korat, Daniel and Wasserblat, Moshe and Pereg, Oren},

keywords = {Computation and Language (cs.CL), FOS: Computer and information sciences, FOS: Computer and information sciences},

title = {Efficient Few-Shot Learning Without Prompts},

publisher = {arXiv},

year = {2022},

copyright = {Creative Commons Attribution 4.0 International}

}

```

<!--

## Glossary

*Clearly define terms in order to be accessible across audiences.*

-->

<!--

## Model Card Authors

*Lists the people who create the model card, providing recognition and accountability for the detailed work that goes into its construction.*

-->

<!--

## Model Card Contact

*Provides a way for people who have updates to the Model Card, suggestions, or questions, to contact the Model Card authors.*

-->

|

{"base_model": "sentence-transformers/paraphrase-mpnet-base-v2", "library_name": "setfit", "metrics": ["accuracy"], "pipeline_tag": "text-classification", "tags": ["setfit", "sentence-transformers", "text-classification", "generated_from_setfit_trainer"], "widget": [{"text": "Fox News, The Washington Post, NBC News, The Associated Press and the Los Angeles Times are among the entities that have said they will file amicus briefs on behalf of CNN."}, {"text": "Tommy Robinson is in prison today because he violated a court order demanding that he not film videos outside the trials of Muslim rape gangs.\n"}, {"text": "As I wrote during the presidential campaign, Trump has no idea of Washington and no idea who to appoint who would support him rather than work against him."}, {"text": "IN MAY 2013, the Washington Post’s Greg Miller reported that the head of the CIA’s clandestine service was being shifted out of that position as a result of “a management shake-up” by then-Director John Brennan."}, {"text": "Columbus police are investigating the shootings."}], "inference": false, "model-index": [{"name": "SetFit with sentence-transformers/paraphrase-mpnet-base-v2", "results": [{"task": {"type": "text-classification", "name": "Text Classification"}, "dataset": {"name": "Unknown", "type": "unknown", "split": "test"}, "metrics": [{"type": "accuracy", "value": 0.602089552238806, "name": "Accuracy"}]}]}]}

|

task

|

[

"TEXT_CLASSIFICATION"

] | 46,835 |

MultiBertGunjanPatrick/multiberts-seed-4-100k

|

MultiBertGunjanPatrick

| null |

[

"transformers",

"pytorch",

"bert",

"pretraining",

"exbert",

"multiberts",

"multiberts-seed-4",

"en",

"dataset:bookcorpus",

"dataset:wikipedia",

"arxiv:2106.16163",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] | 2022-03-02T23:29:04Z |

2021-10-04T05:10:05+00:00

| 111 | 0 |

---

datasets:

- bookcorpus

- wikipedia

language: en

license: apache-2.0

tags:

- exbert

- multiberts

- multiberts-seed-4

---

# MultiBERTs Seed 4 Checkpoint 100k (uncased)

Seed 4 intermediate checkpoint 100k MultiBERTs (pretrained BERT) model on English language using a masked language modeling (MLM) objective. It was introduced in