video

video |

|---|

WorldSense: Evaluating Real-world Omnimodal Understanding for Multimodal LLMs

Jack Hong1, Shilin Yan1†, Jiayin Cai1, Xiaolong Jiang1, Yao Hu1, Weidi Xie2‡

1Xiaohongshu Inc. 2Shanghai Jiao Tong University

🔥 News

2025.02.07🌟 We release WorldSense, the first benchmark for real-world omnimodal understanding of MLLMs.

👀 WorldSense Overview

we introduce WorldSense, the first benchmark to assess the multi-modal video understanding, that simultaneously encompasses visual, audio, and text inputs. In contrast to existing benchmarks, our WorldSense has several features:

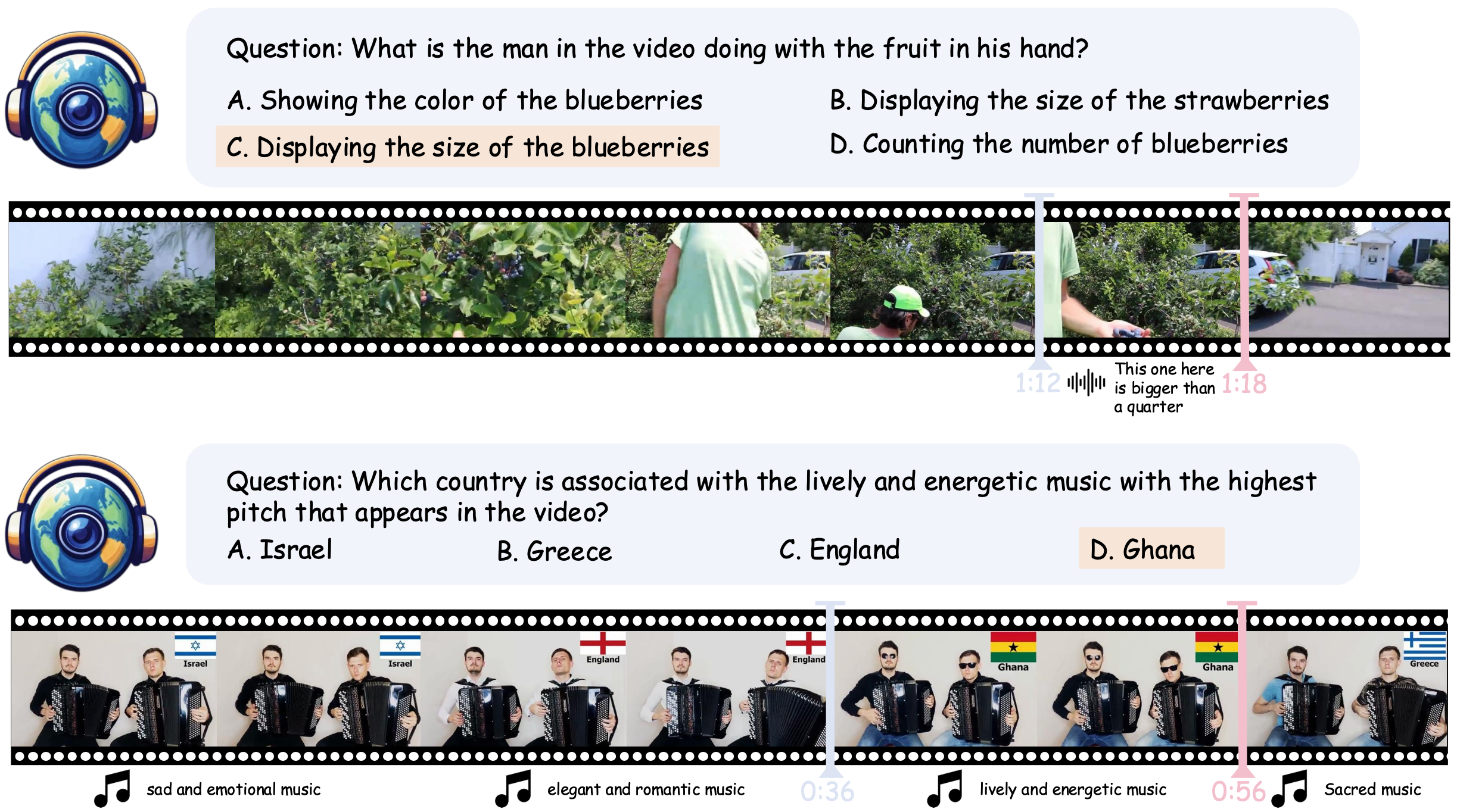

- Collaboration of omni-modality. We design the evaluation tasks to feature a strong coupling of audio and video, requiring models to effectively utilize the synergistic perception of omni-modality;

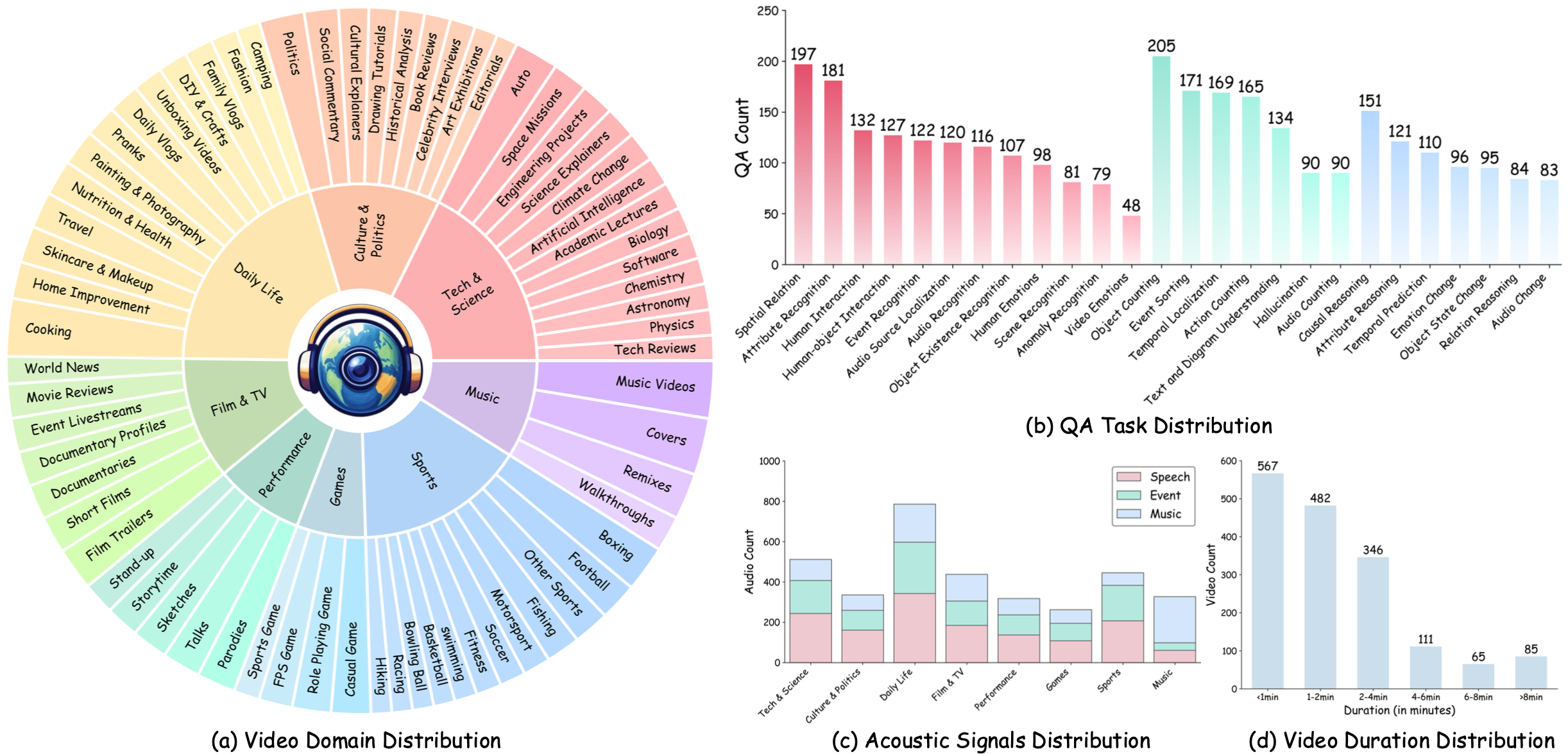

- Diversity of videos and tasks. WorldSense encompasses a diverse collection of 1,662 audio-visual synchronised videos, systematically categorized into 8 primary domains and 67 fine-grained subcategories to cover the broad scenarios, and 3,172 multi-choice QA pairs across 26 distinct tasks to enable the comprehensive evaluation;

- High-quality annotations. All the QA pairs are manually labeled by 80 expert annotators with multiple rounds of correction to ensure quality.

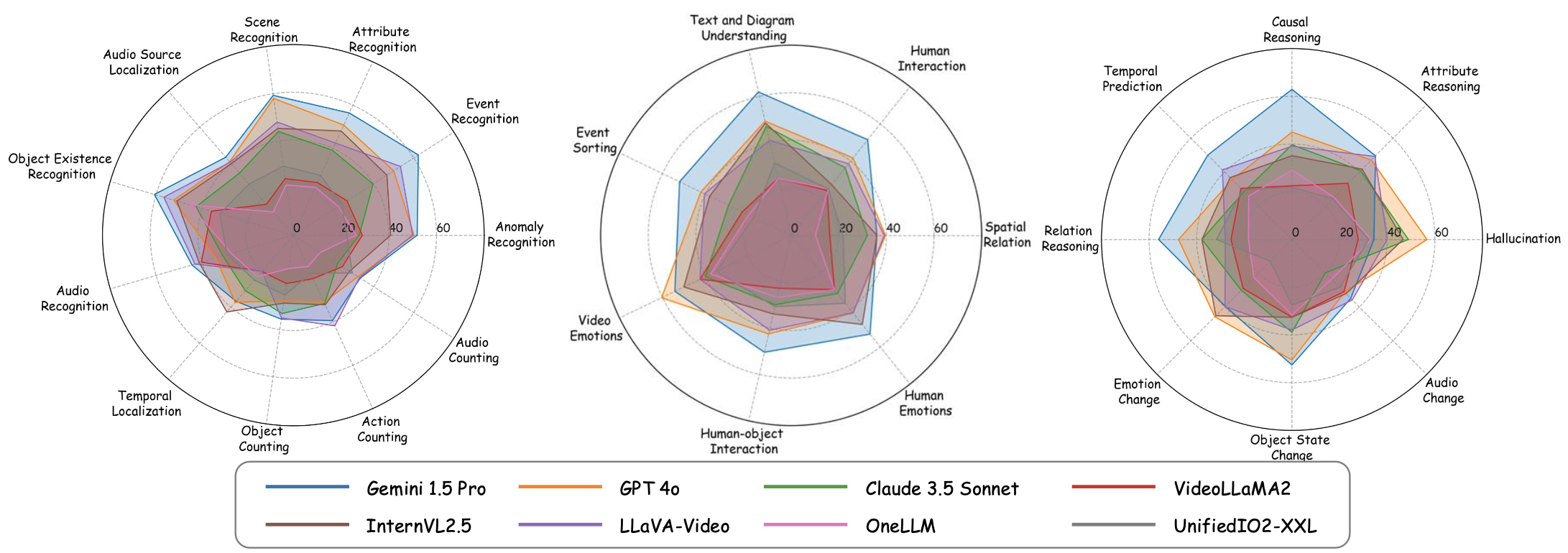

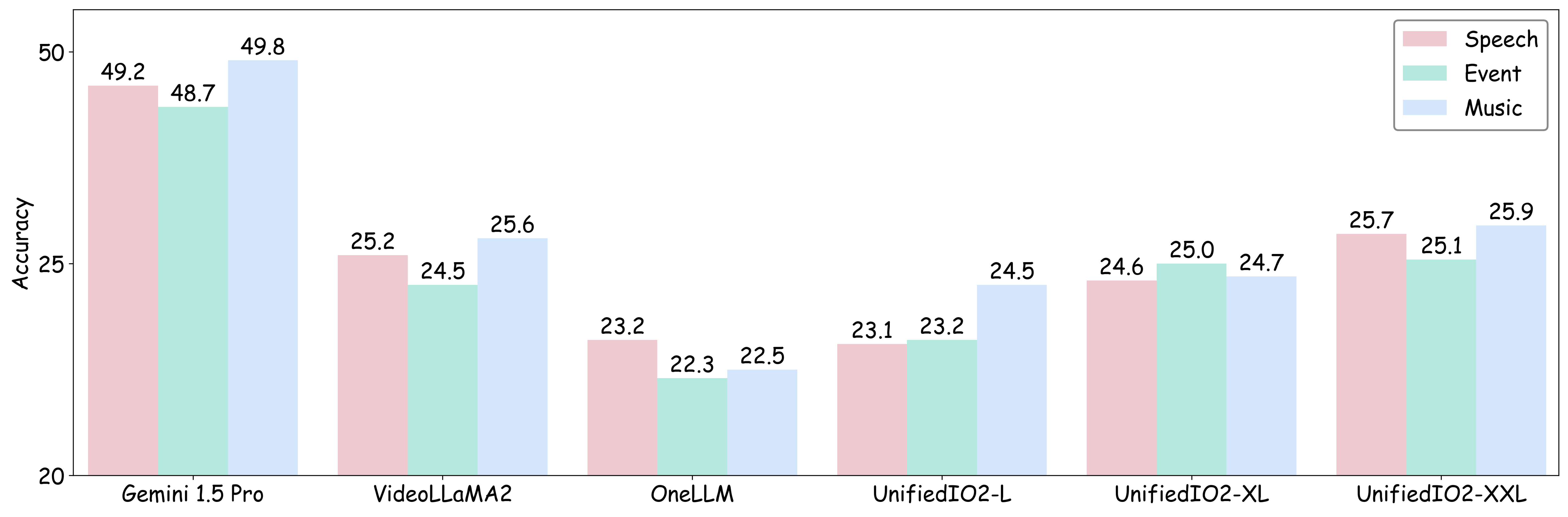

Based on our WorldSense, we extensively evaluate various state-of-the-art models. The experimental results indicate that existing models face significant challenges in understanding real-world scenarios (48% best accuracy). We hope our WorldSense can provide a platform for evaluating the ability in constructing and understanding coherent contexts from omni-modality.

📐 Dataset Examples

🔍 Dataset

Please download our WorldSense from here.

🔮 Evaluation Pipeline

📍 Evaluation: Thanks for the reproduction of our evaluation through VLMEvalkit. Please refer to VLMEvalkit for details.

📍 Leaderboard:

If you want to add your model to our leaderboard, please contact [email protected].

📈 Experimental Results

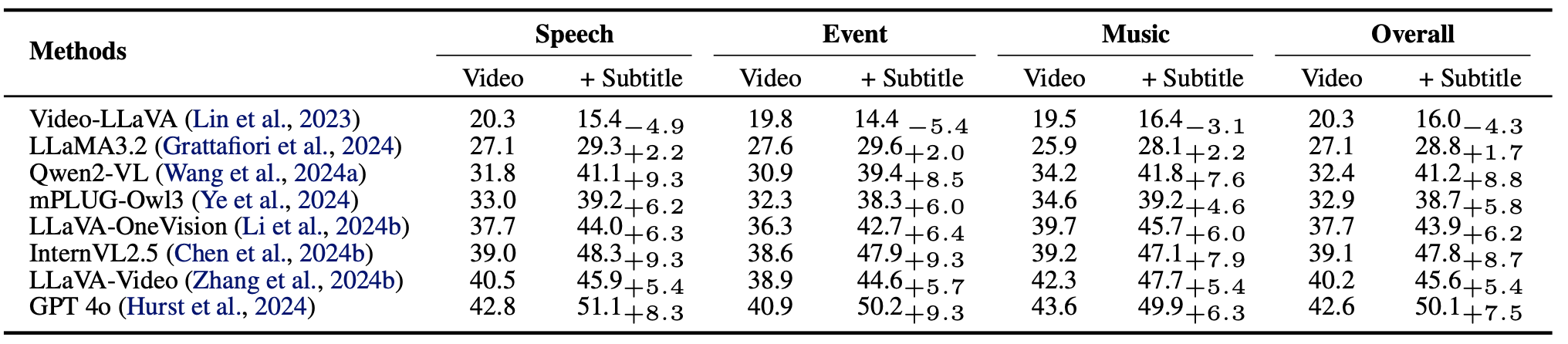

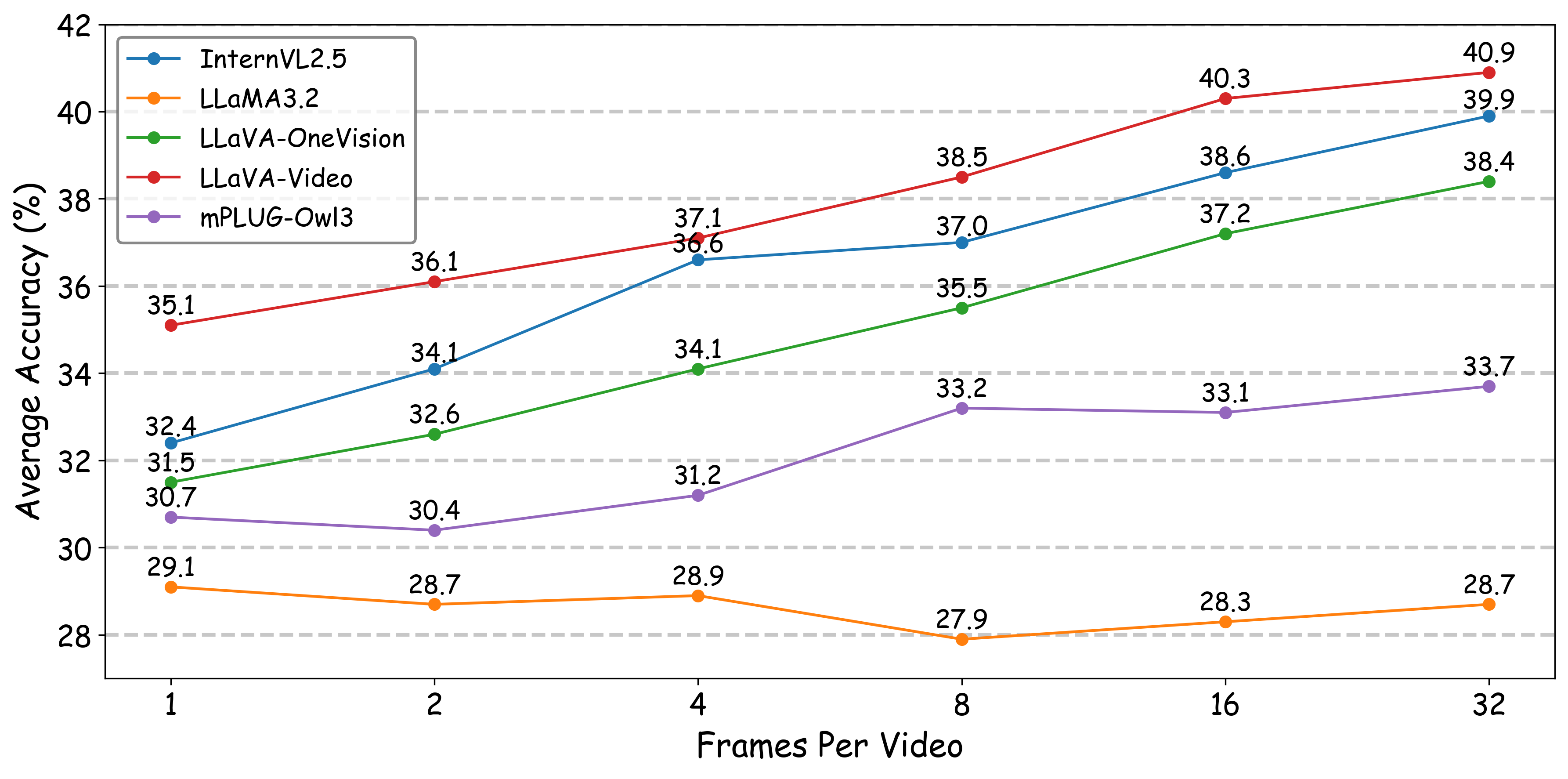

- Evaluation results of sota MLLMs.

- Fine-grained results on task category.

- Fine-grained results on audio type.

- In-depth analysis for real-world omnimodal understanding.

📖 Citation

If you find WorldSense helpful for your research, please consider citing our work. Thanks!

@article{hong2025worldsenseevaluatingrealworldomnimodal,

title={WorldSense: Evaluating Real-world Omnimodal Understanding for Multimodal LLMs},

author={Jack Hong and Shilin Yan and Jiayin Cai and Xiaolong Jiang and Yao Hu and Weidi Xie},

year={2025},

eprint={2502.04326},

archivePrefix={arXiv},

primaryClass={cs.CV},

url={https://arxiv.org/abs/2502.04326},

}

- Downloads last month

- 530