wav2vec2-large-lv60_phoneme-timit_english_timit-4k_002

This model is a fine-tuned version of facebook/wav2vec2-large-lv60 on the TIMIT dataset. It achieves the following results on the evaluation set:

- Loss: 0.3354

- PER: 0.1053

- So far the highest peforming model among my models

Intended uses & limitations

- Phoneme recognition based on the TIMIT phoneme set

Phoneme-wise errors

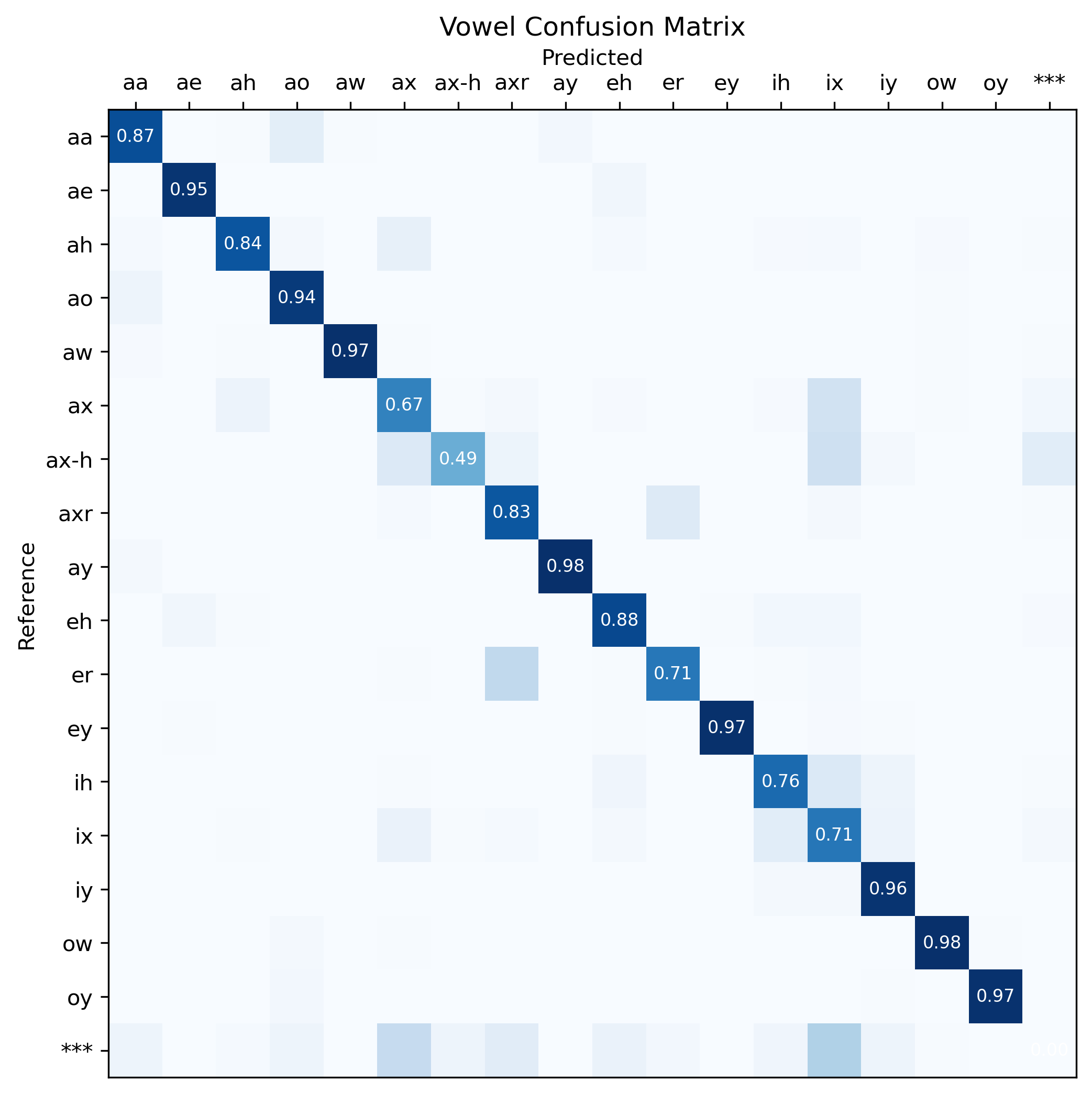

Vowel Phonemes

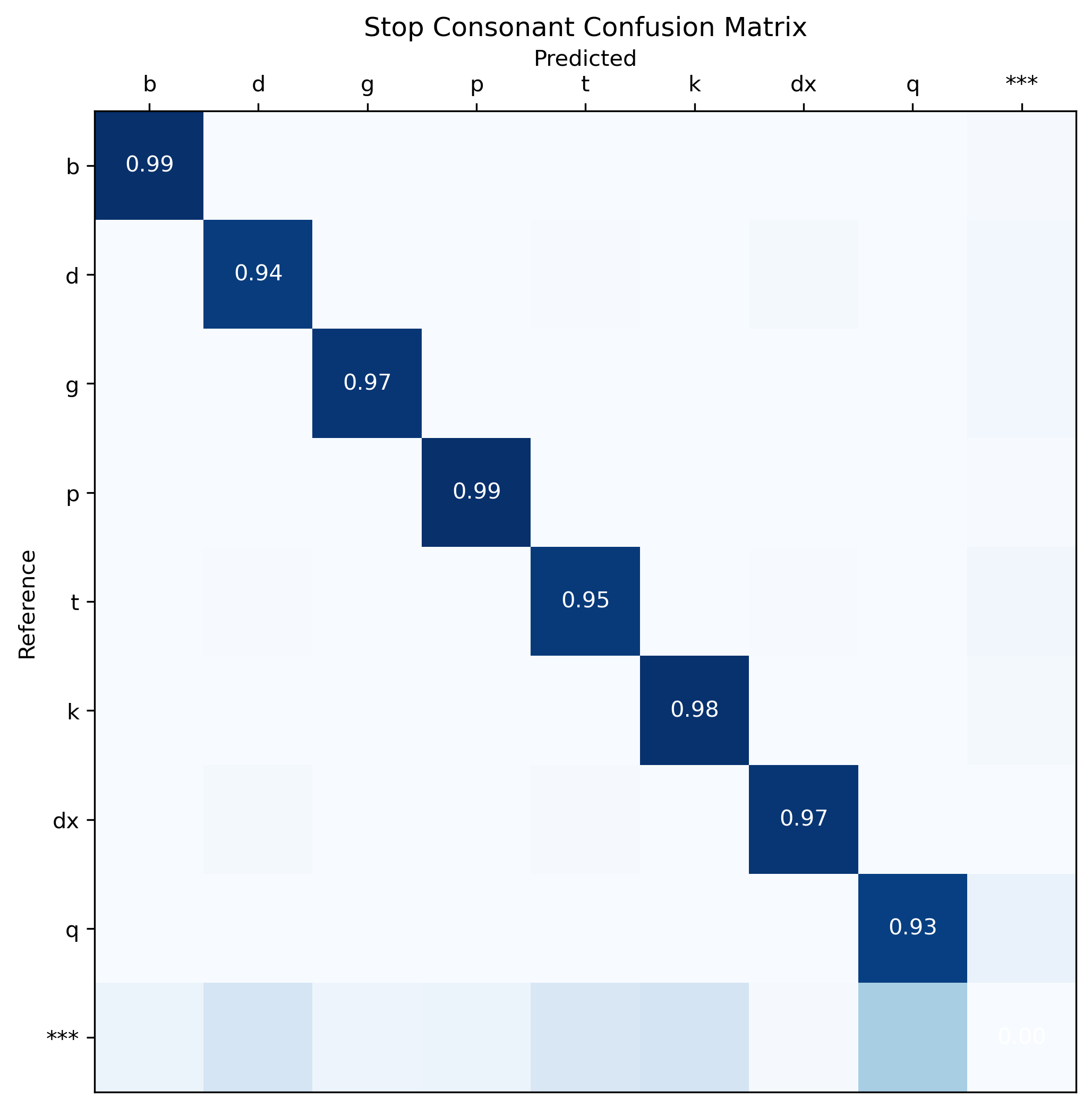

Stop Phonemes

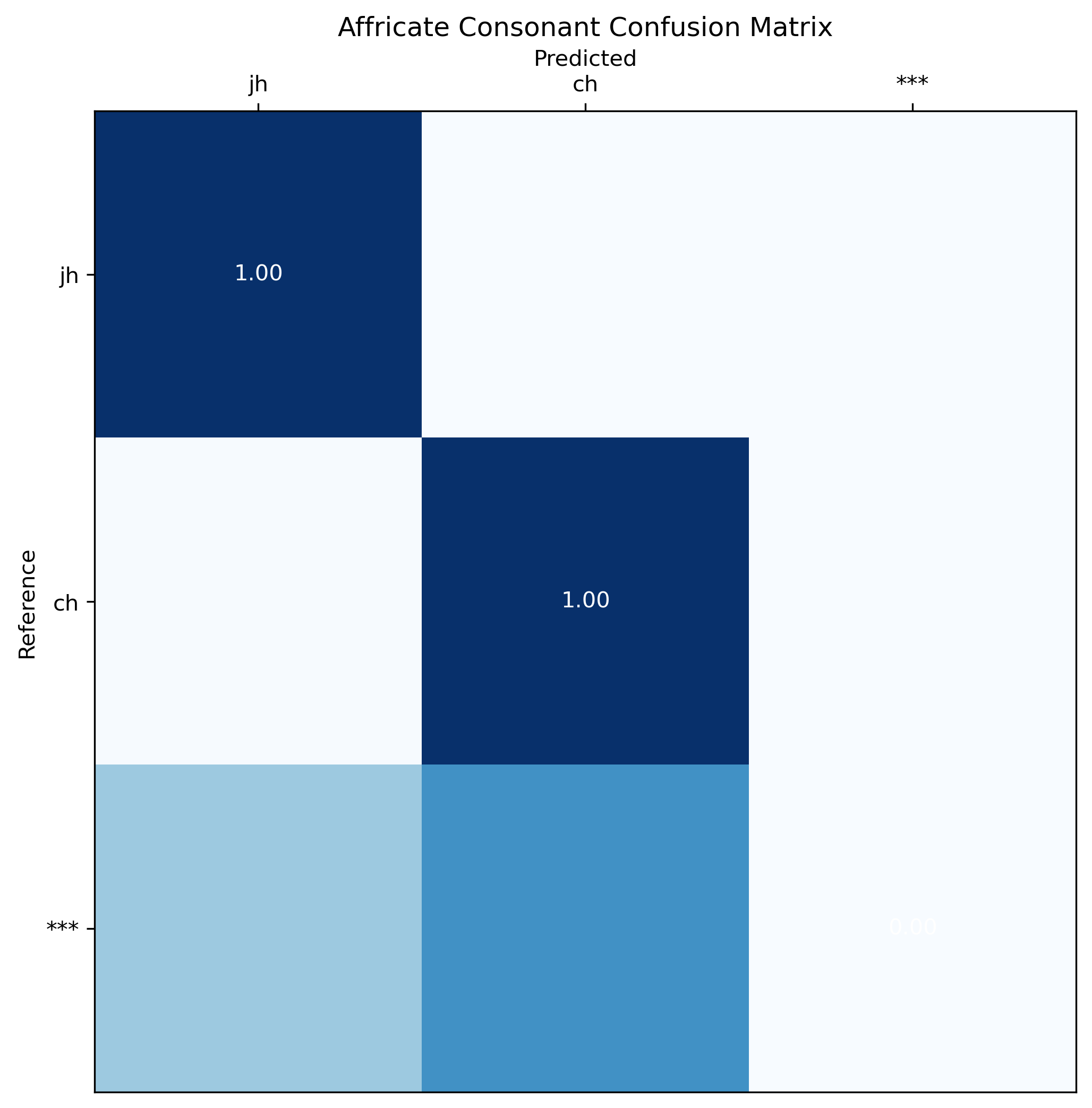

Affricate Phonemes

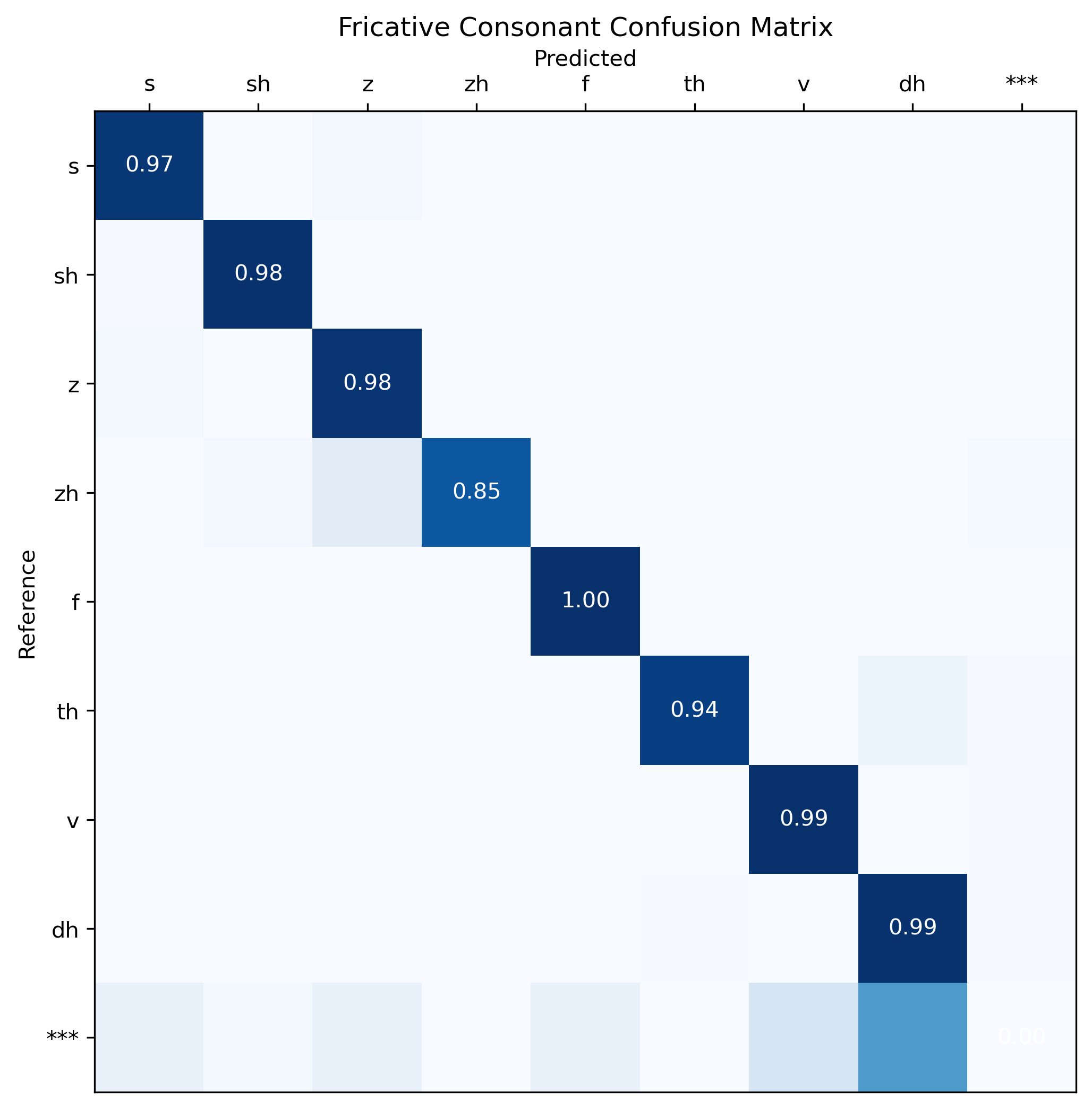

Fricative Phonemes

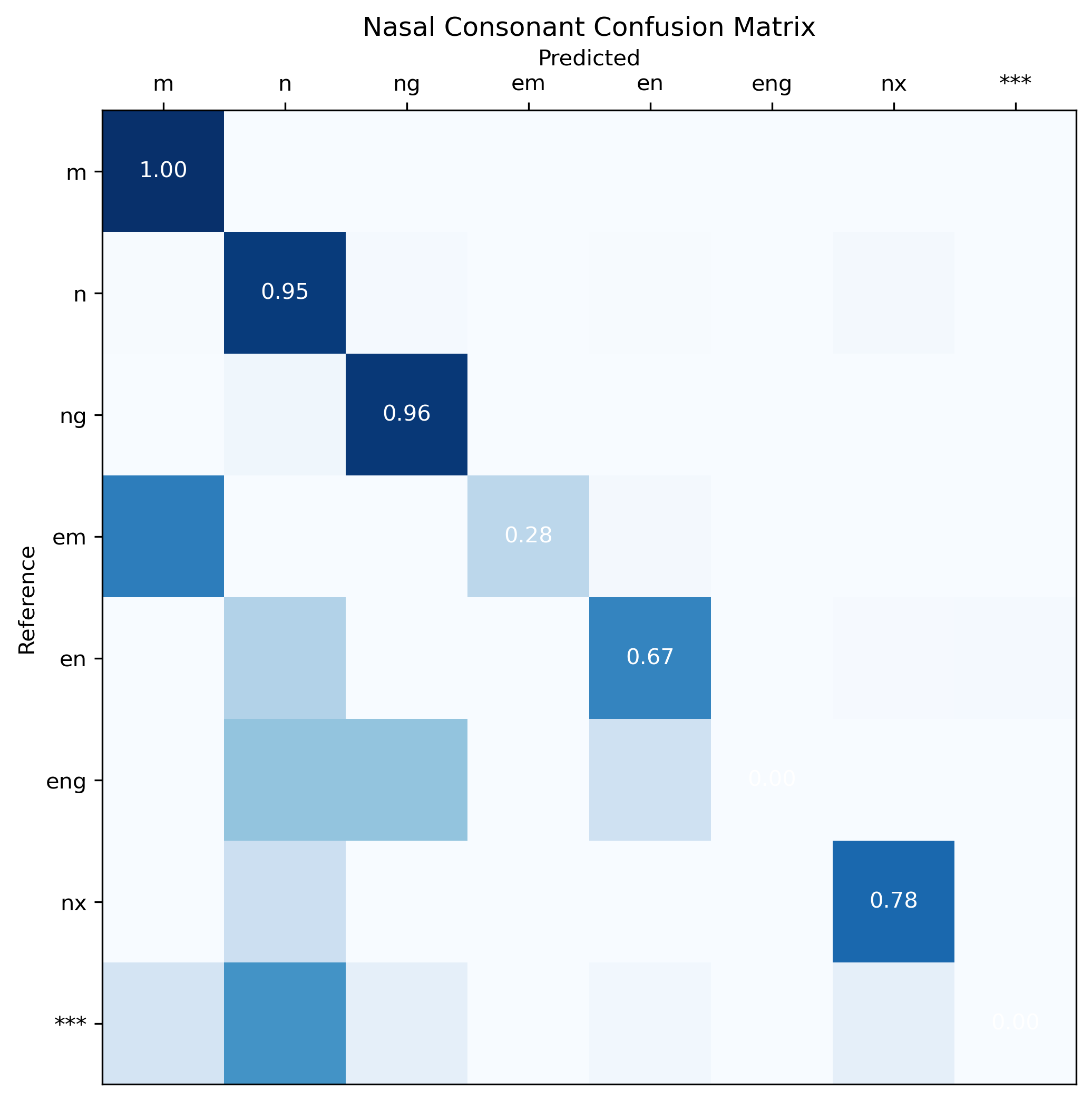

Nasal Phonemes

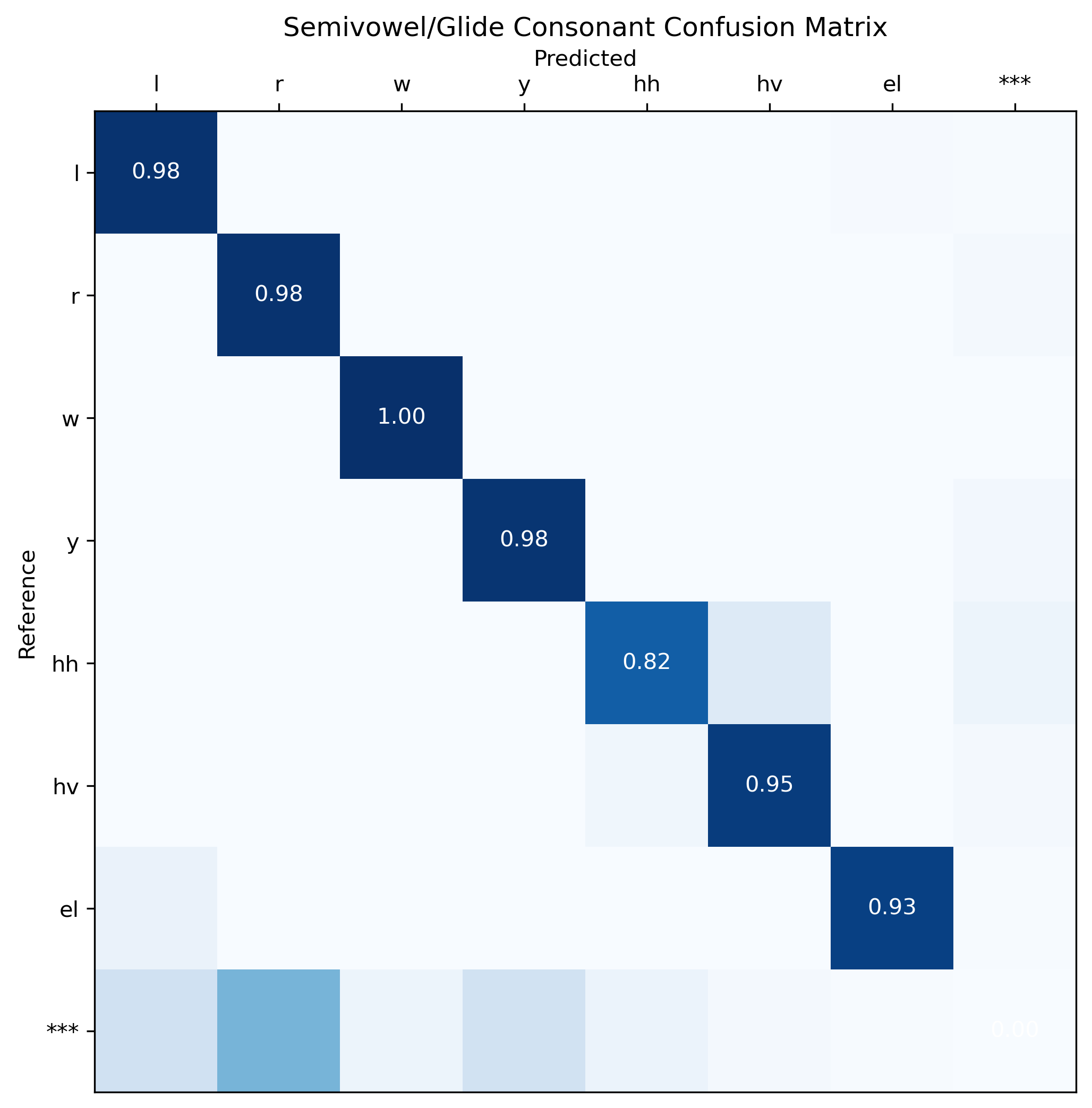

Semivowels/Glide Phonemes

Training and evaluation data

- Train: TIMIT train dataset (4620 samples)

- Test: TIMIT test dataset (1680 samples)

Training procedure

Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0001

- train_batch_size: 16

- eval_batch_size: 1

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 300

- training_steps: 3000

- mixed_precision_training: Native AMP

Training results

| Training Loss | Epoch | Step | Validation Loss | PER |

|---|---|---|---|---|

| 7.9352 | 1.04 | 300 | 3.7710 | 0.9617 |

| 2.7874 | 2.08 | 600 | 0.9080 | 0.1929 |

| 0.8205 | 3.11 | 900 | 0.4670 | 0.1492 |

| 0.5504 | 4.15 | 1200 | 0.4025 | 0.1408 |

| 0.4632 | 5.19 | 1500 | 0.3696 | 0.1374 |

| 0.4148 | 6.23 | 1800 | 0.3519 | 0.1343 |

| 0.3873 | 7.27 | 2100 | 0.3419 | 0.1329 |

| 0.3695 | 8.3 | 2400 | 0.3368 | 0.1317 |

| 0.3531 | 9.34 | 2700 | 0.3406 | 0.1320 |

| 0.3507 | 10.38 | 3000 | 0.3354 | 0.1315 |

Framework versions

- Transformers 4.38.1

- Pytorch 2.0.1

- Datasets 2.16.1

- Tokenizers 0.15.2

- Downloads last month

- 51

Inference Providers

NEW

This model is not currently available via any of the supported Inference Providers.

Model tree for excalibur12/wav2vec2-large-lv60_phoneme-timit_english_timit-4k_002

Base model

facebook/wav2vec2-large-lv60