Official PyTorch Implementation of SMILE (CVPR 2025).

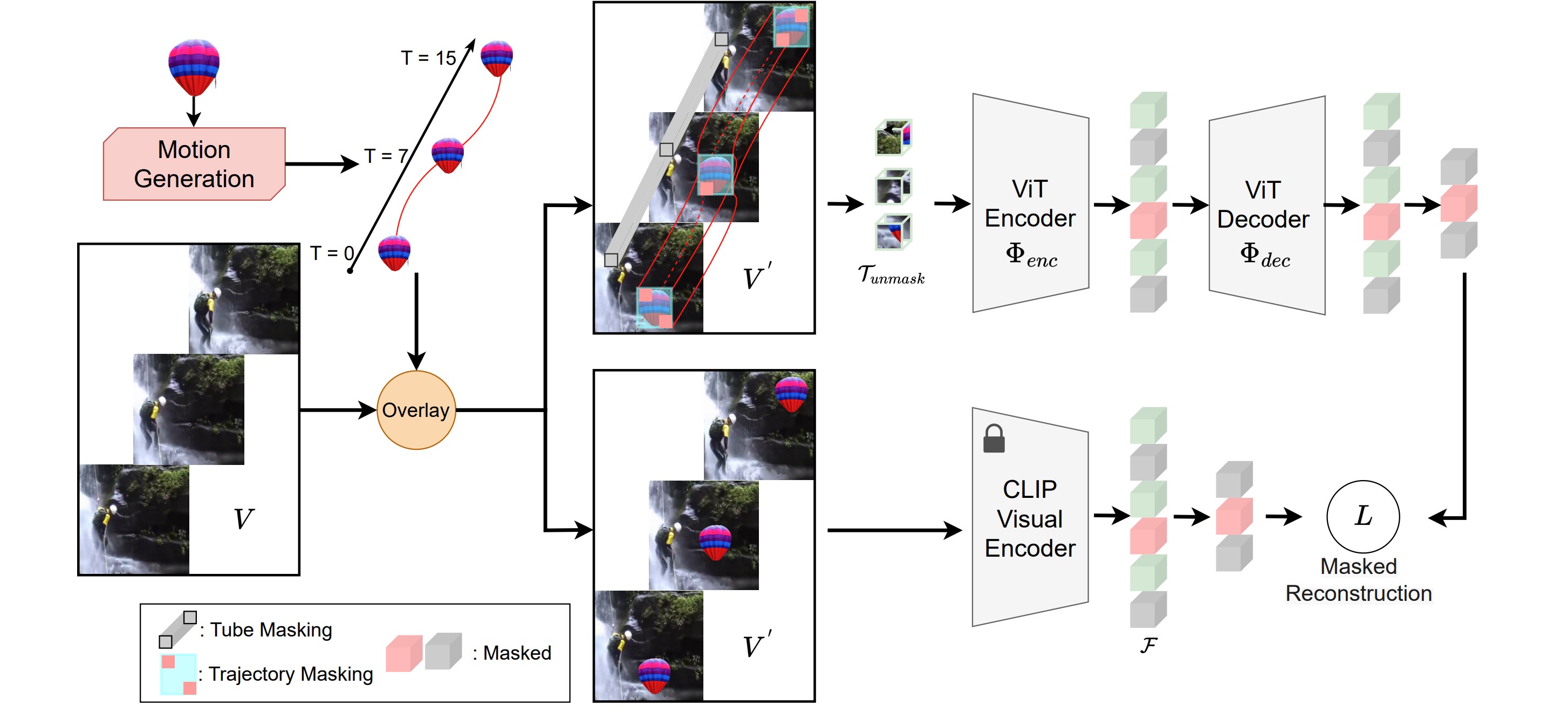

SMILE: Infusing Spatial and Motion Semantics in Masked Video Learning

Fida Mohammad Thoker, Letian Jiang, Chen Zhao, Bernard Ghanem

King Abdullah University of Science and Technology (KAUST)

📰 News

[2025.6.2] Code and pre-trained models are available now!

[2025.5.28] Code and pre-trained models will be released here. Welcome to watch this repository for the latest updates.

✨ Highlights

🔥 State-of-the-art on SSv2 and K400

Our method achieves state-of-the-art performance on SSv2 and K400 benchmarks with a ViT-B backbone, surpassing prior self-supervised video models by up to 2.5%, thanks to efficient CLIP-based semantic supervision.

⚡️ Leading Results Across Generalization Challenges

We evaluate our method on the SEVERE benchmark, covering domain shift, low-shot learning, fine-grained actions, and task adaptability. Our model consistently outperforms prior methods and achieves a 3.0% average gain over strong baselines, demonstrating superior generalization in diverse video understanding tasks.

😮 Superior Motion Representation Without Video-Text Alignment

Compared to CLIP-based methods such as ViCLIP and UMT, our model achieves higher accuracy on motion-sensitive datasets, particularly under linear probing. This indicates stronger video representations learned with less data and without relying on video-text alignment.

🚀 Main Results and Models

✨ Something-Something V2

| Method | Pretrain Dataset | Pretrain Epochs | Backbone | Top-1 | Finetune |

|---|---|---|---|---|---|

| SMILE | K400 | 800 | ViT-S | 69.1 | TODO |

| SMILE | K400 | 600 | ViT-B | 72.1 | log / checkpoint |

| SMILE | K400 | 1200 | ViT-B | 72.4 | log / checkpoint |

| SMILE | SSv2 | 800 | ViT-B | 72.5 | TODO |

✨ Kinetics-400

| Method | Pretrain Dataset | Pretrain Epochs | Backbone | Top-1 | Pretrain | Finetune |

|---|---|---|---|---|---|---|

| SMILE | K400 | 800 | ViT-S | 79.5 | TODO | TODO |

| SMILE | K400 | 600 | ViT-B | 83.1 | checkpoint | log / checkpoint |

| SMILE | K400 | 1200 | ViT-B | 83.4 | checkpoint | log / checkpoint |

🔨 Installation

Please follow the instructions in INSTALL.md.

➡️ Data Preparation

We follow VideoMAE Data preparation to prepare our datasets (K400 and SSv2). Here we provide our annotation files for those two datasets: annotation_files. For pretraining, we use training sets (train.csv).

We provide the list of segmented object images used for pretraining in object_instances.txt. The images will be released later.

🔄 Pre-training

Following the VideoMAE pre-training guide, we provide scripts for pre-training on the Kinetics-400 (K400) dataset using the ViT-Base model: scripts/pretrain/

As described in the paper, we adopt a two-stage training strategy. Please refer to the script names to identify which stage to run.

If you wish to perform your own pre-training, make sure to update the following parameters in the scripts:

DATA_PATH: Path to your datasetOUTPUT_DIR: Directory to save output resultsOBJECTS_PATH: Path to the overlaying objects image dataset (image data to be released)FIRST_STAGE_CKPT: Path to the ckpt from first stage pretraining ( for second stage training)

Note: Our pre-training experiments were conducted using 8 V100(32 GB) GPUs.

⤴️ Fine-tuning with Pre-trained Models

Following the VideoMAE finetuning guide, we provide scripts for fine-tuning on the Something-Something v2 (SSv2) and Kinetics-400 (K400) datasets using the ViT-Base model: scripts/finetune/

To perform your own fine-tuning, please update the following parameters in the script:

DATA_PATH: Path to your datasetMODEL_PATH: Path to the pre-trained modelOUTPUT_DIR: Directory to save output results

Note: Our finetuning experiments were conducted using 4 V100(32 GB) GPUs.

☎️ Contact

Fida Mohammad Thoker: [email protected]

👍 Acknowledgements

We sincerely thank Michael Dorkenwald for providing the object image dataset that supports this work.

This project is built upon VideoMAE and tubelet-contrast. Thanks to the contributors of these great codebases.

🔒 License

This project is released under the MIT license. For more details, please refer to the LICENSE file.

✏️ Citation

If you think this project is helpful, please feel free to leave a star⭐️ and cite our paper:

@inproceedings{thoker2025smile,

author = {Thoker, Fida Mohammad and Jiang, Letian and Zhao, Chen and Ghanem, Bernard},

title = {SMILE: Infusing Spatial and Motion Semantics in Masked Video Learning},

journal = {CVPR},

year = {2025},

}