OnlyFlow - Obvious Research

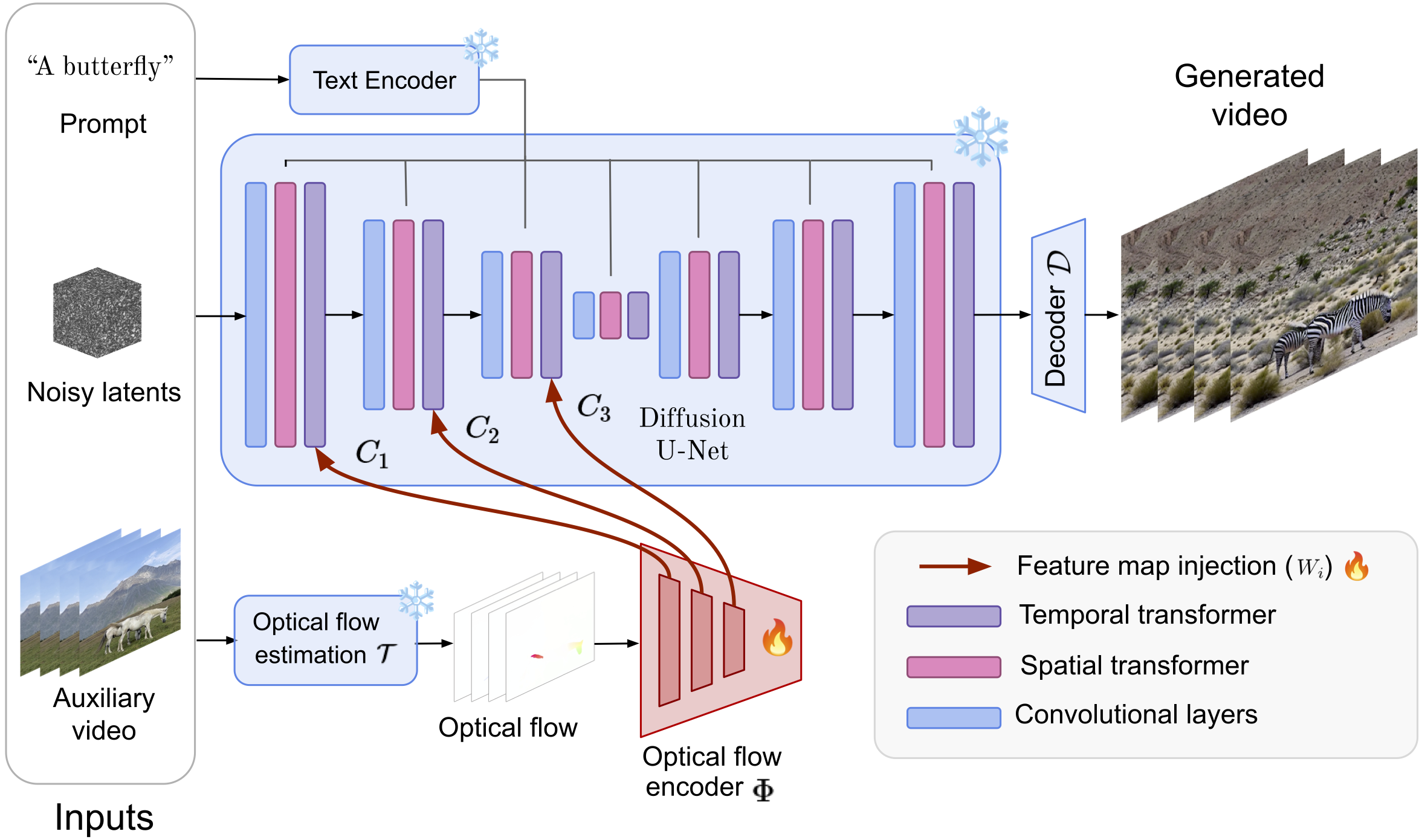

Model for OnlyFlow: Optical Flow based Motion Conditioning for Video Diffusion Models.

Project website

Code, available on Github

Model weight release, on HuggingFace

We release the model weights of our best training in fp32, fp16 ckpt and safetensors format. The model is trained on the Webvid-10M dataset on a 8 A100 node for 20h.

Here are examples of videos created by the model, with from left to right:

- input

- optical flow extracted from input

- output with onylflow influence (gamma parameter set to 0.)

- output with gamma = 0.5

- output with gamma = 0.75

- output with gamma = 1.

Usage for inference

You can use the gradio interface on our HuggingFace Space. You can also use the inference script in our Github repository to test the released model.

Please note that the model is really sensitive to the input video framerate. You should try downsampling the video to 8fps before using it if you don't get the desired results.

Next steps

We are working on improving OnlyFlow and train it for other base model than AnimateDiff.

We appreciate any help, feel free to reach out! You can contact us:

- On Twitter: @obv_research

- By mail: [email protected]

About Obvious Research

Obvious Research is an Artificial Intelligence research laboratory dedicated to creating new AI artistic tools, initiated by the artists’ trio Obvious, in partnership with La Sorbonne Université.

- Downloads last month

- 66

Model tree for obvious-research/onlyflow

Base model

stable-diffusion-v1-5/stable-diffusion-v1-5