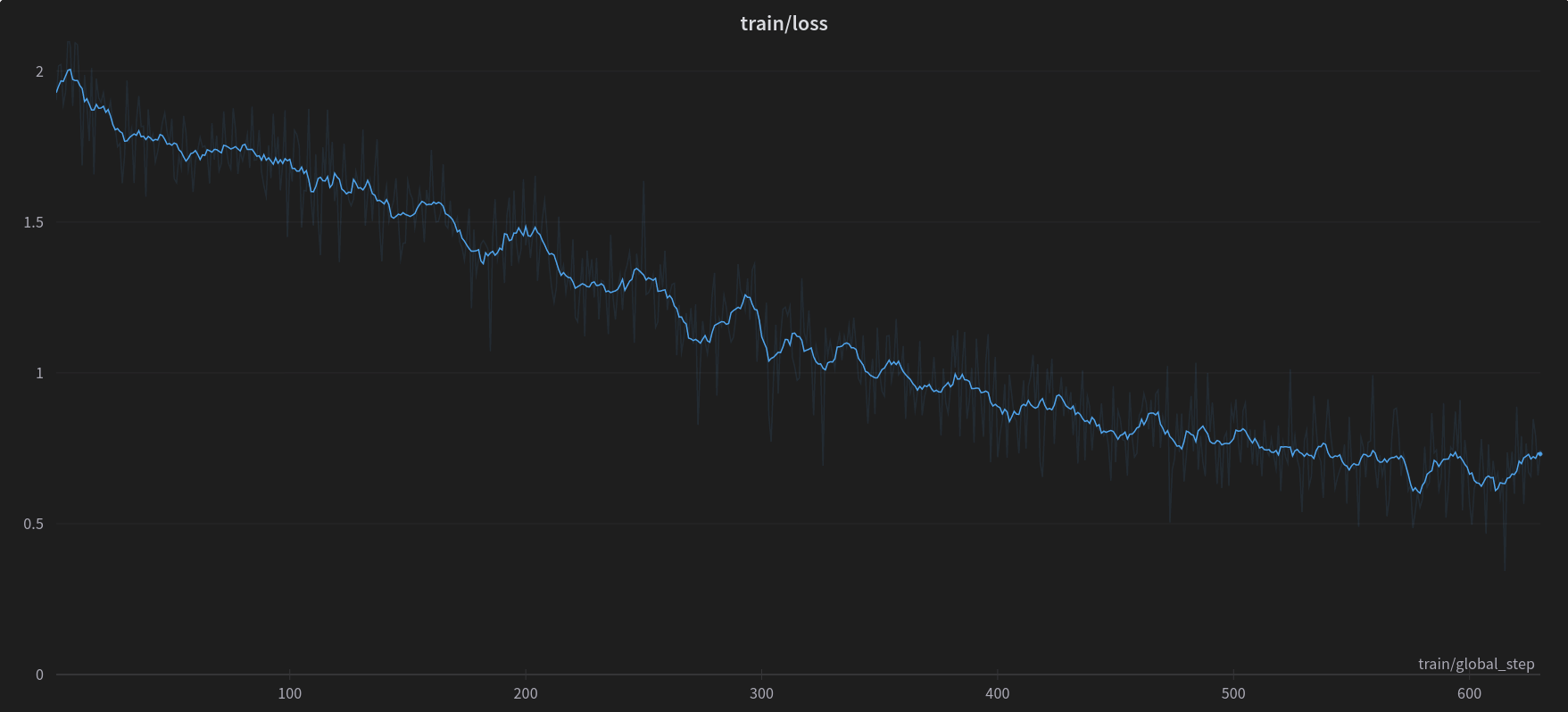

Trained on a 7900XTX.

Zeus-LLM-Trainer command to recreate:

python finetune.py --data_path "xzuyn/lima-multiturn-alpaca" --learning_rate 0.0001 --optim "paged_adamw_8bit" --train_4bit --lora_r 32 --lora_alpha 32 --prompt_template_name "alpaca_short" --num_train_epochs 15 --gradient_accumulation_steps 24 --per_device_train_batch_size 1 --logging_steps 1 --save_total_limit 20 --use_gradient_checkpointing True --save_and_eval_steps 41 --cutoff_len 4096 --val_set_size 0 --use_flash_attn True --base_model "meta-llama/Llama-2-7b-hf"

Inference Providers

NEW

This model is not currently available via any of the supported Inference Providers.

The model cannot be deployed to the HF Inference API:

The model has no library tag.