id

stringlengths 6

113

| author

stringlengths 2

36

| task_category

stringclasses 42

values | tags

listlengths 1

4.05k

| created_time

timestamp[ns, tz=UTC]date 2022-03-02 23:29:04

2025-04-10 08:38:38

| last_modified

stringdate 2020-05-14 13:13:12

2025-04-19 04:15:39

| downloads

int64 0

118M

| likes

int64 0

4.86k

| README

stringlengths 30

1.01M

| matched_bigbio_names

listlengths 1

8

⌀ | is_bionlp

stringclasses 3

values | model_cards

stringlengths 0

1M

| metadata

stringlengths 2

698k

| source

stringclasses 2

values | matched_task

listlengths 1

10

⌀ | __index_level_0__

int64 0

46.9k

|

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

Areeb-02/mpnet-base-GISTEmbedLoss-MSEE_Evaluator-salestax-docs

|

Areeb-02

|

sentence-similarity

|

[

"sentence-transformers",

"safetensors",

"mpnet",

"sentence-similarity",

"feature-extraction",

"generated_from_trainer",

"dataset_size:238",

"loss:GISTEmbedLoss",

"arxiv:1908.10084",

"arxiv:2402.16829",

"base_model:microsoft/mpnet-base",

"base_model:finetune:microsoft/mpnet-base",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | 2024-06-21T22:47:22Z |

2024-06-21T22:47:54+00:00

| 7 | 0 |

---

base_model: microsoft/mpnet-base

datasets: []

language: []

library_name: sentence-transformers

metrics:

- negative_mse

pipeline_tag: sentence-similarity

tags:

- sentence-transformers

- sentence-similarity

- feature-extraction

- generated_from_trainer

- dataset_size:238

- loss:GISTEmbedLoss

widget:

- source_sentence: What is the timeframe for opening a physical location in the Designated

Areas to be eligible for the credit under Section 960.1?

sentences:

- '(a) A person or combined group that opens a physical location in the Designated

Areas on or after January 1, 2023 through and including December 31, 2027, shall

be allowed

a credit against that person or combined group''s Gross Receipts Tax if the person

or

74SAN FIAT CU

2024 11AY 10 PM 1331

combined group did not have a physical location in the City for at least three

years prior to

DEPARTMENT OF ELECTIONS

opening the physical location. The credit under this Section 960.1 shall be an

annual credit

for each of up to three tax years immediately following the tax year in which

the person or

combined group opened the physical location in the Designated Areas, provided

the person or

combined group maintains a physical location in the Designated Areas in the tax

year that the

credit is taken. To be eligible for the credit, the person or combined group must

take the

credit for each tax year on an original Gross Receipts Tax return filed with the

Tax Collector.'

- '5222 (Nondepository Credit Intermediation), 5223 (Activities Related

to Credit Intermediation)), 533 (Lessors of Nonfinancial Intangible Assets (except

Copyrighted

Works)), 54 (Professional, Scientific, and Technical Services) but not including

541714 (Research and

Development in Biotechnology (except Nanobiotechnology)), 55 (Management of Companies

and

63SA

CL

2024 LAY 10 PA (30

Enterprises), 562 (Waste Management and Remediation Services), 61 (Educational

Services),

DEPARTMENT OF ELECTI

62 (Health Care and Social Assistance), and all business activities not otherwise

exempt and not

elsewhere subjected to a gross receipts tax rate by Sections 953.20 through 953.26

or an administrative

office tax under Section 953.8.

(c) The amount of taxable gross receipts from Category 5 Business Activities subject

to the

gross receipts tax shall be three-quarters of the amount determined under Section

956.1 plus

one-quarter of the amount determined under Section 956.2.

SEC. 953.25. GROSS RECEIPTS TAX APPLICABLE TO CATEGORY 6 BUSINESS

ACTIVITIES.

(a) The gross receipts tax rates applicable to Category 6 Business Activities

are:

(1) For tax years 2025 and 2026:

1.5% for taxable gross receipts between $0 and $1,000,000

1.5% for taxable gross receipts between $1,000,000.01 and $2,500,000

3% for taxable gross receipts between $2,500,000.01 and $25,000,000

2.352% for taxable gross receipts between $25,000,000.01 and $50,000,000

3.024% for taxable gross receipts between $50,000,000.01 and $75,000,000

3.024% for taxable gross receipts between $75,000,000.01 and $100,000,000

3.36% for taxable gross receipts between $100,000,000.01 and $150,000,000

3.36% for taxable gross receipts between $150,000,000.01 and $250,000,000

3.36% for taxable gross receipts between $250,000,000.01 and $500,000,000

3.'

- 'Except as otherwise provided in this Section 855

and Section 856 of this Article 12, and Section 76.3 of Article 2 of this Business

and Tax Regulations

Code, the annual fee for obtaining a registration certificate for the 2025-2026

registration year,

payable in advance, shall be as follows:

San Francisco Gross Receipts for the

Immediately Preceding Tax Year

$0 to $100,000

$100,000.01 to $250,000

Annual Registration Fee

$41

$71

$250,000.01 to $500,000

$120

$500,000.01 to $750,000

$240

$750,000.01 to $1,000,000

$330

$1,000,000.01 to $1,500,000

$469

$1,500,000.01 to $2,000,000

$656

$2,000,000.01 to $2,500,000

$848

$2,500,000.01 to $5,000,000

$1,414

$5,000,000.01 to $7,500,000

$600

$7,500,000.01 to $15,000,000

$1,500

$15,000,000.01 to $25,000,000

$4,875

25$25,000,000.01 to $50,000,000

$50,000,000.01 to $100,000,000

$100,000,000.01 to $200,000,000

$200,000,000.01 and over

SAN KAL CO

2724 11 10 11 129

$15,000

DEPARTMENT

$30,000

$37,500

$45,000

(d) Fee for Registration Years Beginning On or After April 1, 2026. Except as

otherwise

provided in this Section 855 and Section 856 of this Article 12, and Section 76.3

of Article 2 of this

Business and Tax Regulations Code, the annual fee for obtaining a registration

certificate for

registration years beginning on or after April 1, 2026, payable in advance, shall

be as follows:

San Francisco Gross Receipts for the

Immediately Preceding Tax Year

$0 to $100,'

- source_sentence: How is the overpaid executive administrative office tax measured

for individuals or combined groups, and what other administrative office taxes

might they also be liable for?

sentences:

- '(b) Combined gross receipts are the total worldwide gross receipts of the person

and

all related entities to the person, unless the election provided for in California

Revenue and

Taxation Code Section 25110 is in effect for the person, in which case combined

gross

receipts shall be computed consistently with the water''s edge election, as set

forth therein.

(c) Combined payroll is the total worldwide compensation paid by the person and

all

related entities to the person, unless the election provided for in California

Revenue and

Taxation Code Section 25110 is in effect for the person, in which case combined

payroll shall

be computed consistently with the water''s edge election, as set forth therein.

A person who

72SALA 50

has no combined payroll in a tax year shall have no gross receipts under this

Section for that

DEPARTMENT OF LECT

tax year.

(d) Payroll in the City is the total amount paid for compensation in the City

by the

person and by all related entities to the person.

(e) Compensation paid in the City shall be determined as follows:set forth in

Section 953.8)(4).

(1) Where compensation is paid by reason of work performed or services rendered

by

an individual wholly within the City, all of the compensation for such individual

shall be attributable to

the City.

(2) Where compensation is paid by reason of work performed or services rendered

by

an individual partly within and partly without the City, the portion of such compensation

attributable to

the City shall be determined as follows:

(A) Except as otherwise provided in this Section 956.2(e), the portion of such

compensation attributable to the City shall be the portion of such compensation

which the total number

of working hours employed within the City bears to the total number of working

hours within and

without the City.

(B) If the amount of such compensation depends on the volume of business

transacted by such individual, then the portion of such compensation attributable

to the City shall be

the portion of such compensation which the volume of business transacted by such

individual in the

City bears to the volume of business transacted by such individual within and

without the City.'

- 'If the person or combined group did not file a

7SANT

V

772 MAY 10 TH 1:28

return for the preceding tax year and did not request an extension under Section

6.9-4 to file that

return, the person or combined group shall be deemed to have filed a return showing

no

liability for purposes of this Section 6.9-Isubsection (c)(3)(C)(ii), and no estimated

tax payments

of that tax shall be due for the current tax year.

(D) Lessor of Residential Real Estate Exemption. Notwithstanding

any other provision in this Section 6.9-1(c)(3), a lessor of residential real

estate, as defined in

Section 954.1 of Article 12-A-1, shall not be required to make estimated tax payments

under

this Section 6.9-1(c)(3), but shall pay its full Gross Receipts Tax liability,

Homelessness Gross

Receipts Tax liability, and Overpaid Executive Gross Receipts Tax liability on

or before the

last day of February of the succeeding year, or the extended deadline in Section

6.9-4, if the

lessor''s gross receipts within the City shown on the lessor''s return for either

the current tax

year or the preceding tax year did not exceed the threshold in Section 954.1(b)

of

Article 12-A-1.

*

*

(g) Business Registration Certificate; Annual Due Date.

(1) For registration years ending on or before June 30, 2025 and the

2025-2026 registration year, eExcept for any extensions granted under Section

6.9-4 or as

otherwise provided in this Business and Tax Regulations Code, returns and payments

of the

Business Registration Fee (Article 12) shall be filed annually and are due and

payable, and

shall be delinquent if not submitted and paid to the Tax Collector, on or before

the last day of

May preceding the registration year commencing July 1 of that year.'

- 'DEPARTMENT OF ELECTION

Article 12-A-1, shall pay an annual overpaid executive administrative office tax

if the Executive

Pay Ratio for the tax year of that person or the combined group of which it is

a part

exceeds 100:1. This overpaid executive administrative office tax shall be measured

by the

person''s total payroll expense, as defined in Section 953.8(f) of Article 12-A-1,

that is

attributable to the City. If a person is a member of a combined group, then its

tax shall be

measured by the total payroll expense of the combined group attributable to the

City. Such

person or combined group shall pay only the overpaid executive administrative

office tax, and

not the tax imposed under other subsections of this Section 3303, but a person

or combined

group may be liable for the administrative office tax imposed by Section 953.8

of

Article 12-A-1 and the homelessness administrative office tax imposed by Section

2804(d) or

Section 2804.9 of Article 28 in addition to the overpaid executive administrative

office tax

imposed by this subsection (dg). Unless specified otherwise, this overpaid executive

administrative office tax shall be considered part of the Overpaid Executive Gross

Receipts

Tax for all purposes.'

- source_sentence: What are the homelessness gross receipts tax rates applicable to

Category 1 Business Activities for tax years beginning on or after January 1,

2025, as outlined in Section 2804.3 of the same code?

sentences:

- 'For purposes

of serving a notice of a deficiency determination under this Section 6.11-2, the

date that a return was

due shall be:

(1) For tax years and tax periods ending on or before December 31, 2021, and

registration years ending on or before June 30, 2023, the due date of the return

without regard to any

extensions under Section 6.9-4;

(2) For tax years and tax periods beginning on or after January 1, 2022, and

registration years beginning on or after July 1, 2023 and ending on or before

March 31, 2026, except

for the taxes for the years and periods subject to subsection (a)(3) of this Section

6.11-2, the due date of

the return including any extensions; or

(3) For the Gross Receipts Tax (Article 12-A-1) (including the tax on administrative

office business activities in Section 953.8 of Article 12-A-1), the Early Care

and Education Commercial

Rents Tax (Article 21), the Homelessness Gross Receipts Tax (Article 28) (including

the tax on

administrative office business activities in Section 2804(d) or Section 2804.9

of Article 28), the

Cannabis Business Tax (Article 30), and the Overpaid Executive Gross Receipts

Tax (Article 33)

(including the tax on administrative office business activities in Section 3303(g))

for tax years

beginning on or after January 1, 2025, and for the Business Registration Fee (Article

12) for

registration years beginning on or after April 1, 2026, November 30 of the calendar

year in which the

return for such respective tax was originally due.

13SAN

CO

22 MAY (b) The notice of any determination under this Section 6.11-2 may be served

upon the

DEMANTHER OF CLIENT OFF

taxpayer or other affected person personally or by mail; if by mail, service shall

be:

(1) to the last known address that appears in the Tax Collector''s records,

provided there is such an address in the Tax Collector''s records, or

(2) to an address that the Tax Collector concludes, in the Tax Collector''s

discretion, is the last known address of the person(s).'

- 'If the person or combined group did not file a

7SANT

V

772 MAY 10 TH 1:28

return for the preceding tax year and did not request an extension under Section

6.9-4 to file that

return, the person or combined group shall be deemed to have filed a return showing

no

liability for purposes of this Section 6.9-Isubsection (c)(3)(C)(ii), and no estimated

tax payments

of that tax shall be due for the current tax year.

(D) Lessor of Residential Real Estate Exemption. Notwithstanding

any other provision in this Section 6.9-1(c)(3), a lessor of residential real

estate, as defined in

Section 954.1 of Article 12-A-1, shall not be required to make estimated tax payments

under

this Section 6.9-1(c)(3), but shall pay its full Gross Receipts Tax liability,

Homelessness Gross

Receipts Tax liability, and Overpaid Executive Gross Receipts Tax liability on

or before the

last day of February of the succeeding year, or the extended deadline in Section

6.9-4, if the

lessor''s gross receipts within the City shown on the lessor''s return for either

the current tax

year or the preceding tax year did not exceed the threshold in Section 954.1(b)

of

Article 12-A-1.

*

*

(g) Business Registration Certificate; Annual Due Date.

(1) For registration years ending on or before June 30, 2025 and the

2025-2026 registration year, eExcept for any extensions granted under Section

6.9-4 or as

otherwise provided in this Business and Tax Regulations Code, returns and payments

of the

Business Registration Fee (Article 12) shall be filed annually and are due and

payable, and

shall be delinquent if not submitted and paid to the Tax Collector, on or before

the last day of

May preceding the registration year commencing July 1 of that year.'

- '(a) The homelessness gross receipts tax rates applicable to Category 1 Business

Activities for

tax years beginning on or after January 1, 2025 are:

0% for taxable gross receipts between $0 and $1,000,000

0% for taxable gross receipts between $1,000,000.01 and $2,500,000

0% for taxable gross receipts between $2,500,000.01 and $25,000,000

0.164% for taxable gross receipts between $25,000,000.01 and $50,000,000

0.164% for taxable gross receipts between $50,000,000.01 and $75,000,000

0.164% for taxable gross receipts between $75,000,000.01 and $100,000,000

0.164% for taxable gross receipts between $100,000,000.01 and $150,000,000

0.246% for taxable gross receipts between $150,000,000.01 and $250,000,000

0.328% for taxable gross receipts between $250,000,000.01 and $500,000,000

0.41% for taxable gross receipts between $500,000,000.01 and $1,000,000,000

0.492% for taxable gross receipts over $1,000,000,000

(b) "Category 1 Business Activities" has the same meaning as in Section 953.20(b)

of

Article 12-A-1 of this Business and Tax Regulations Code.

(c) The amount of taxable gross receipts from Category 1 Business Activities subject

to the

homelessness gross receipts tax shall be three-quarters of the amount determined

under Section 956.1

of Article 12-A-1 plus one-quarter of the amount determined under Section 956.2

of Article 12-A-1.

84SAL

SEC. 2804.3. HOMELESSNESS GROSS RECEIPTS TAX APPLICABLE TO CATEGORY 2

BUSINESS ACTIVITIES.'

- source_sentence: What are the different gross receipts tax rates applicable to private

education and health services, administrative and support services, and miscellaneous

business activities as outlined in Section 953.4?

sentences:

- '(b) For only so long as and to the extent that the City is prohibited from imposing

the

Homelessness Gross Receipts Tax, any person upon whom the City is prohibited under

the

Constitution or laws of the State of California or the Constitution or laws of

the United States

from imposing the Homelessness Gross Receipts Tax shall be exempt from the

Homelessness Gross Receipts Tax.

(c) For purposes of this Article 28, gross receipts shall not include receipts

that are

excluded from gross receipts for purposes of the gross receipts tax imposed by

Article 12-A-1,

and also shall not include receipts subject to a gross receipts tax on commercial

rents

imposed as a result of a measure adopted by the voters of San Francisco in the

June 5, 2018

election.

(d) This Section 2805 shall apply to tax years ending on or before December 31,

2024.

SEC. 2805.3. EXEMPTIONS AND EXCLUSIONS.

(a) An organization that is exempt from income taxation by Chapter 4 (commencing

with

Section 23701) of Part 11 of Division 2 of the California Revenue and Taxation

Code or Subchapter F

(commencing with Section 501) of Chapter 1 of Subtitle A of the Internal Revenue

Code of 1986, as

amended, as qualified by Sections 502, 503, 504, and 508 of the Internal Revenue

Code of 1986, as

amended, shall be exempt from taxation under this Article 28, only so long as

those exemptions

continue to exist under state or federal law.

(b) For purposes of this Article 28, gross receipts as defined in Section 952.3

shall not include

receipts from business activities if, and only so long as and to the extent that,

the City is prohibited

93SA

01:32

from taxing such receipts under the Constitution or laws of the United States

or under the Constitution

or laws of the State of California.

(c) Rent Controlled Buildings Exclusion. A person subject to the homelessness

gross receipts

tax may exclude from gross receipts in any tax year 50% of the total amount received

from the rental of

real property to tenants in occupancy at any location in the City, which is subject

to limits on rent

increases pursuant to the Residential Rent Stabilization and Arbitration Ordinance,

Administrative

Code, Chapter 37, Section 37.1 et seq.'

- '10 that the

90% gross receipts threshold has been met for tax year 2023, and for tax year

2024 if the Controller

does not certify under Section 953.10 that the 95% gross receipts threshold has

been met for tax

year 2024:

42SAN LANCI Co

FILED

2024 MAY 10 PH 1:29

0.45% (e.g. $4.50 per $1,000) for taxable gross receipts between $0 and $1,000,000

DEPARTMENT OF ELECTIONS

0.488% (e.g. $4.88 per $1,000) for taxable gross receipts between $1,000,000.01

and

$2,500,000

0.488% (e.g. $4.88 per $1,000) for taxable gross receipts between $2,500,000.01

and

$25,000,000

0.6% (e.g. $6 per $1,000) for taxable gross receipts over $25,000,000

(D) For tax year 2024 if the Controller certifies under Section 953.10 that the

95% gross receipts threshold has been met for tax year 2024, and for tax years

beginning on or after

January 1, 2025:

0.465% (e.g. $4.65 per $1,000) for taxable gross receipts between $0 and $1,000,000

0.504% (e.g. $5.04 per $1,000) for taxable gross receipts between $1,000,000.01

and

$2,500,000

0.504% (e.g. $5.04 per $1,000) for taxable gross receipts between $2,500,000.01

and

$25,000,000

0.62% (e.g. $6.20 per $1,000) for taxable gross receipts over $25,000,000

SEC. 953.4. GROSS RECEIPTS TAX APPLICABLE TO PRIVATE EDUCATION AND

HEALTH SERVICES; ADMINISTRATIVE AND SUPPORT SERVICES; AND

MISCELLANEOUS BUSINESS ACTIVITIES.

(a) The gross receipts tax rates applicable to the business activities of private

education and health services, administrative and support services,'

- 'SARI

до

202 BAY 10 FA 1:32

year by 52, and dividing the result by the number of weeks that the employee was

employed by that

person or combined group during the tax year.

"Highest-Paid Managerial Employee" means the individual employee or officer of

a

person or combined group with managerial responsibility in a business function

who received the most

Compensation for a tax year. For purposes of determining the Highest-Paid Managerial

Employee and

the Compensation of such employee, Compensation shall not be annualized or converted

to a full-time

equivalency.

SEC. 3303. IMPOSITION OF TAX.

(a) Except as otherwise provided in this Article 33, commencing with tax years

beginning on or after January 1, 2022, for the privilege of engaging in business

in the City, the

City imposes an annual Overpaid Executive Gross Receipts Tax on each person engaging

in

business within the City where the Executive Pay Ratio for the tax year of that

person or the

combined group of which it is a part exceeds 100:1.'

- source_sentence: Based on the context information provided, what are the different

gross receipts tax rates for businesses in San Francisco for tax years 2022, 2023,

and 2024?

sentences:

- '$9.75 per $1,000) for taxable gross receipts over $25,000,000

44SANCO

2024 NAY LO

(D) For tax year 2024 if the Controller certifies under Section 953.10 that the

DEPARTMENT OF

95% gross receipts threshold has been met for tax year 2024, and for tax years

beginning on or after

January 1, 2025:

0.814% (e.g. $8.14 per $1,000) for taxable gross receipts between $0 and $1,000,000

0.853% (e.g. $8.53 per $1,000) for taxable gross receipts between $1,000,000.01

and

$2,500,000

0.93% (e.g. $9.30 per $1,000) for taxable gross receipts between $2,500,000.01

and

$25,000,000

1.008% (e.g. $10.08 per $1,000) for taxable gross receipts over $25,000,000

(3) For all business activities not otherwise exempt and not elsewhere

subjected to a gross receipts tax rate or an administrative office tax by this

Article 12-A-1:

(B) For tax years 2022 and, if the Controller does not certify under

Section 953.10 that the 90% gross receipts threshold has been met for tax year

2023, for tax

year 2023:

0.788% (e.g. $7.88 per $1,000) for taxable gross receipts between $0 and $1,000,000

0.825% (e.g. $8.25 per $1,000) for taxable gross receipts between $1,000,000.01

and

$2,500,000

0.9% (e.g. $9 per $1,000) for taxable gross receipts between $2,500,000.01 and

$25,000,000

0.975% (e.g. $9.75 per $1,000) for taxable gross receipts over $25,000,000

(C) For tax year 2023 if the Controller certifies under Section 953.10 that the

90% gross receipts threshold has been met for tax year 2023,'

- '(d) In no event shall the credit under this Section 960.4 reduce a person or

combined group''s

Gross Receipts Tax liability to less than $0 for any tax year. The credit under

this Section shall not be

refundable and may not be carried forward to a subsequent year.

SEC. 966. CONTROLLER REPORTS.

The Controller shall prepare reports by September 1, 2026, and September 1, 2027,

respectively, that discuss current economic conditions in the City and the performance

of the tax system

revised by the voters in the ordinance adding this Section 966.

Section 6. Article 21 of the Business and Tax Regulations Code is hereby amended

by

revising Section 2106 to read as follows:

SEC. 2106. SMALL BUSINESS EXEMPTION.

(a) For tax years ending on or before December 31, 2024, nNotwithstanding any

other

provision of this Article 21, a person or combined group exempt from payment of

the gross

receipts tax under Section 954.1 of Article 12-A-1, as amended from time to time,

shall also

be exempt from payment of the Early Care and Education Commercial Rents Tax.

79SAN

DL W(b) For tax years beginning on or after January 1, 2025, notwithstanding any

other provision

of this Article 21, a "small business enterprise" shall be exempt from payment

of the Early Care and

Education Commercial Rents Tax. For purposes of this subsection (b), the term

"small business

enterprise" shall mean any person or combined group whose gross receipts within

the City, determined

under Article 12-A-1, did not exceed $2,325,000, adjusted annually in accordance

with the increase in

the Consumer Price Index: All Urban Consumers for the San Francisco/Oakland/Hayward

Area for All

Items as reported by the United States Bureau of Labor Statistics, or any successor

to that index, as of

December 31 of the calendar year two years prior to the tax year, beginning with

tax year 2026, and

rounded to the nearest $10,000. This subsection (b) shall not apply to a person

or combined group

subject to a tax on administrative office business activities in Section 953.8

of Article 12-A-1.

Section 7.'

- '(1) For tax years ending on or before December 31, 2024, the overpaid executive

administrative office tax shall be calculated as follows:

(+4) 0.4% of the person or combined group''s total payroll expense

attributable to the City for a tax year if the person or combined group has an

Executive Pay

Ratio for that tax year of greater than 100:1, but less than or equal to 200:1;

(2B) 0.8% of the person or combined group''s total payroll expense

attributable to the City for a tax year if the person or combined group has an

Executive Pay

Ratio for that tax year of greater than 200:1, but less than or equal to 300:1;

(3C) 1.2% of the person or combined group''s total payroll expense

attributable to the City for a tax year if the person or combined group has an

Executive Pay

Ratio for that tax year of greater than 300:1, but less than or equal to 400:1;

103SAULAMIC 50

10 PM 1:32

(4D) 1.6% of the person or combined group''s total payroll expense

DEPARTMENT OF ELECTIONS

attributable to the City for a tax year if the person or combined group has an

Executive Pay

Ratio for that tax year of greater than 400:1, but less than or equal to 500:1;

(5E) 2% of the person or combined group''s total payroll expense

attributable to the City for a tax year if the person or combined group has an

Executive Pay

Ratio for that tax year of greater than 500:1, but less than or equal to 600:1;

or

(6F) 2.4% of the person or combined group''s total payroll expense

attributable to the City for a tax year if the person or combined group has an

Executive Pay

Ratio for that tax year of greater than 600:1.'

model-index:

- name: SentenceTransformer based on microsoft/mpnet-base

results:

- task:

type: knowledge-distillation

name: Knowledge Distillation

dataset:

name: stsb dev

type: stsb-dev

metrics:

- type: negative_mse

value: -2.4281997233629227

name: Negative Mse

---

# SentenceTransformer based on microsoft/mpnet-base

This is a [sentence-transformers](https://www.SBERT.net) model finetuned from [microsoft/mpnet-base](https://huggingface.co/microsoft/mpnet-base). It maps sentences & paragraphs to a 768-dimensional dense vector space and can be used for semantic textual similarity, semantic search, paraphrase mining, text classification, clustering, and more.

## Model Details

### Model Description

- **Model Type:** Sentence Transformer

- **Base model:** [microsoft/mpnet-base](https://huggingface.co/microsoft/mpnet-base) <!-- at revision 6996ce1e91bd2a9c7d7f61daec37463394f73f09 -->

- **Maximum Sequence Length:** 512 tokens

- **Output Dimensionality:** 768 tokens

- **Similarity Function:** Cosine Similarity

<!-- - **Training Dataset:** Unknown -->

<!-- - **Language:** Unknown -->

<!-- - **License:** Unknown -->

### Model Sources

- **Documentation:** [Sentence Transformers Documentation](https://sbert.net)

- **Repository:** [Sentence Transformers on GitHub](https://github.com/UKPLab/sentence-transformers)

- **Hugging Face:** [Sentence Transformers on Hugging Face](https://huggingface.co/models?library=sentence-transformers)

### Full Model Architecture

```

SentenceTransformer(

(0): Transformer({'max_seq_length': 512, 'do_lower_case': False}) with Transformer model: MPNetModel

(1): Pooling({'word_embedding_dimension': 768, 'pooling_mode_cls_token': False, 'pooling_mode_mean_tokens': True, 'pooling_mode_max_tokens': False, 'pooling_mode_mean_sqrt_len_tokens': False, 'pooling_mode_weightedmean_tokens': False, 'pooling_mode_lasttoken': False, 'include_prompt': True})

)

```

## Usage

### Direct Usage (Sentence Transformers)

First install the Sentence Transformers library:

```bash

pip install -U sentence-transformers

```

Then you can load this model and run inference.

```python

from sentence_transformers import SentenceTransformer

# Download from the 🤗 Hub

model = SentenceTransformer("Areeb-02/mpnet-base-GISTEmbedLoss-MSEE_Evaluator-salestax-docs")

# Run inference

sentences = [

'Based on the context information provided, what are the different gross receipts tax rates for businesses in San Francisco for tax years 2022, 2023, and 2024?',

'$9.75 per $1,000) for taxable gross receipts over $25,000,000\n44SANCO\n2024 NAY LO\n(D) For tax year 2024 if the Controller certifies under Section 953.10 that the\nDEPARTMENT OF\n95% gross receipts threshold has been met for tax year 2024, and for tax years beginning on or after\nJanuary 1, 2025:\n0.814% (e.g. $8.14 per $1,000) for taxable gross receipts between $0 and $1,000,000\n0.853% (e.g. $8.53 per $1,000) for taxable gross receipts between $1,000,000.01 and\n$2,500,000\n0.93% (e.g. $9.30 per $1,000) for taxable gross receipts between $2,500,000.01 and\n$25,000,000\n1.008% (e.g. $10.08 per $1,000) for taxable gross receipts over $25,000,000\n(3) For all business activities not otherwise exempt and not elsewhere\nsubjected to a gross receipts tax rate or an administrative office tax by this Article 12-A-1:\n(B) For tax years 2022 and, if the Controller does not certify under\nSection 953.10 that the 90% gross receipts threshold has been met for tax year 2023, for tax\nyear 2023:\n0.788% (e.g. $7.88 per $1,000) for taxable gross receipts between $0 and $1,000,000\n0.825% (e.g. $8.25 per $1,000) for taxable gross receipts between $1,000,000.01 and\n$2,500,000\n0.9% (e.g. $9 per $1,000) for taxable gross receipts between $2,500,000.01 and\n$25,000,000\n0.975% (e.g. $9.75 per $1,000) for taxable gross receipts over $25,000,000\n(C) For tax year 2023 if the Controller certifies under Section 953.10 that the\n90% gross receipts threshold has been met for tax year 2023,',

'(d) In no event shall the credit under this Section 960.4 reduce a person or combined group\'s\nGross Receipts Tax liability to less than $0 for any tax year. The credit under this Section shall not be\nrefundable and may not be carried forward to a subsequent year.\nSEC. 966. CONTROLLER REPORTS.\nThe Controller shall prepare reports by September 1, 2026, and September 1, 2027,\nrespectively, that discuss current economic conditions in the City and the performance of the tax system\nrevised by the voters in the ordinance adding this Section 966.\nSection 6. Article 21 of the Business and Tax Regulations Code is hereby amended by\nrevising Section 2106 to read as follows:\nSEC. 2106. SMALL BUSINESS EXEMPTION.\n(a) For tax years ending on or before December 31, 2024, nNotwithstanding any other\nprovision of this Article 21, a person or combined group exempt from payment of the gross\nreceipts tax under Section 954.1 of Article 12-A-1, as amended from time to time, shall also\nbe exempt from payment of the Early Care and Education Commercial Rents Tax.\n79SAN\nDL W(b) For tax years beginning on or after January 1, 2025, notwithstanding any other provision\nof this Article 21, a "small business enterprise" shall be exempt from payment of the Early Care and\nEducation Commercial Rents Tax. For purposes of this subsection (b), the term "small business\nenterprise" shall mean any person or combined group whose gross receipts within the City, determined\nunder Article 12-A-1, did not exceed $2,325,000, adjusted annually in accordance with the increase in\nthe Consumer Price Index: All Urban Consumers for the San Francisco/Oakland/Hayward Area for All\nItems as reported by the United States Bureau of Labor Statistics, or any successor to that index, as of\nDecember 31 of the calendar year two years prior to the tax year, beginning with tax year 2026, and\nrounded to the nearest $10,000. This subsection (b) shall not apply to a person or combined group\nsubject to a tax on administrative office business activities in Section 953.8 of Article 12-A-1.\nSection 7.',

]

embeddings = model.encode(sentences)

print(embeddings.shape)

# [3, 768]

# Get the similarity scores for the embeddings

similarities = model.similarity(embeddings, embeddings)

print(similarities.shape)

# [3, 3]

```

<!--

### Direct Usage (Transformers)

<details><summary>Click to see the direct usage in Transformers</summary>

</details>

-->

<!--

### Downstream Usage (Sentence Transformers)

You can finetune this model on your own dataset.

<details><summary>Click to expand</summary>

</details>

-->

<!--

### Out-of-Scope Use

*List how the model may foreseeably be misused and address what users ought not to do with the model.*

-->

## Evaluation

### Metrics

#### Knowledge Distillation

* Dataset: `stsb-dev`

* Evaluated with [<code>MSEEvaluator</code>](https://sbert.net/docs/package_reference/sentence_transformer/evaluation.html#sentence_transformers.evaluation.MSEEvaluator)

| Metric | Value |

|:-----------------|:------------|

| **negative_mse** | **-2.4282** |

<!--

## Bias, Risks and Limitations

*What are the known or foreseeable issues stemming from this model? You could also flag here known failure cases or weaknesses of the model.*

-->

<!--

### Recommendations

*What are recommendations with respect to the foreseeable issues? For example, filtering explicit content.*

-->

## Training Details

### Training Dataset

#### Unnamed Dataset

* Size: 238 training samples

* Columns: <code>sentence1</code> and <code>sentence2</code>

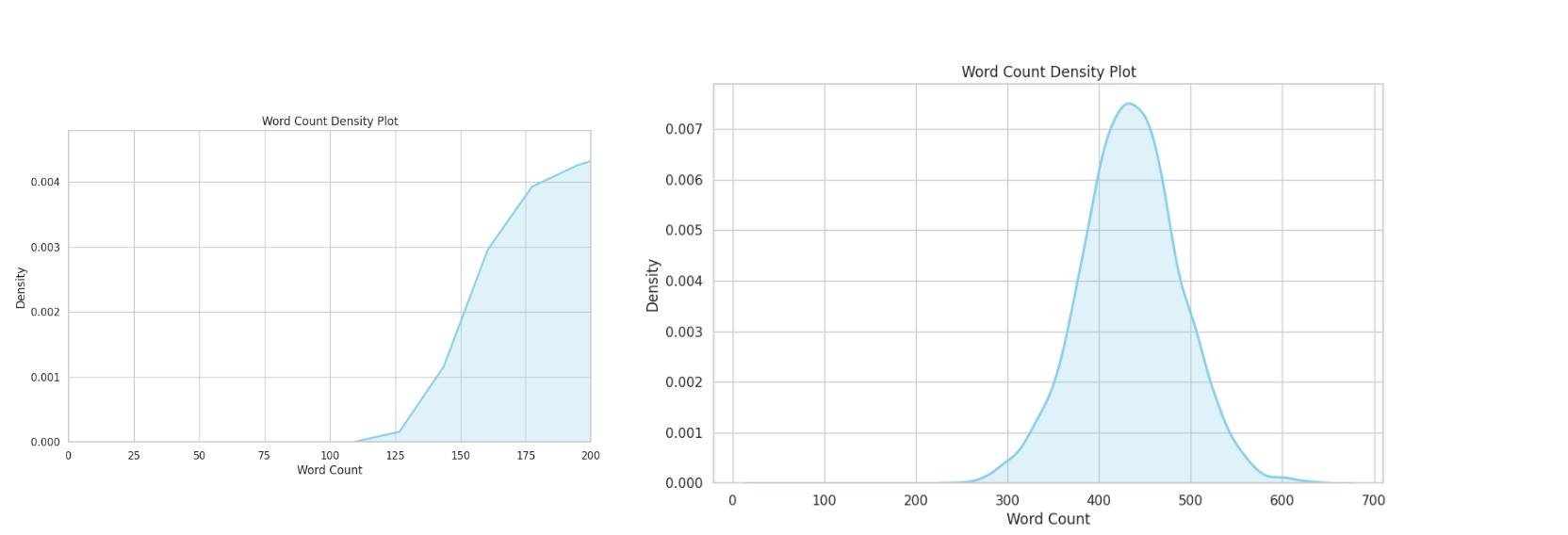

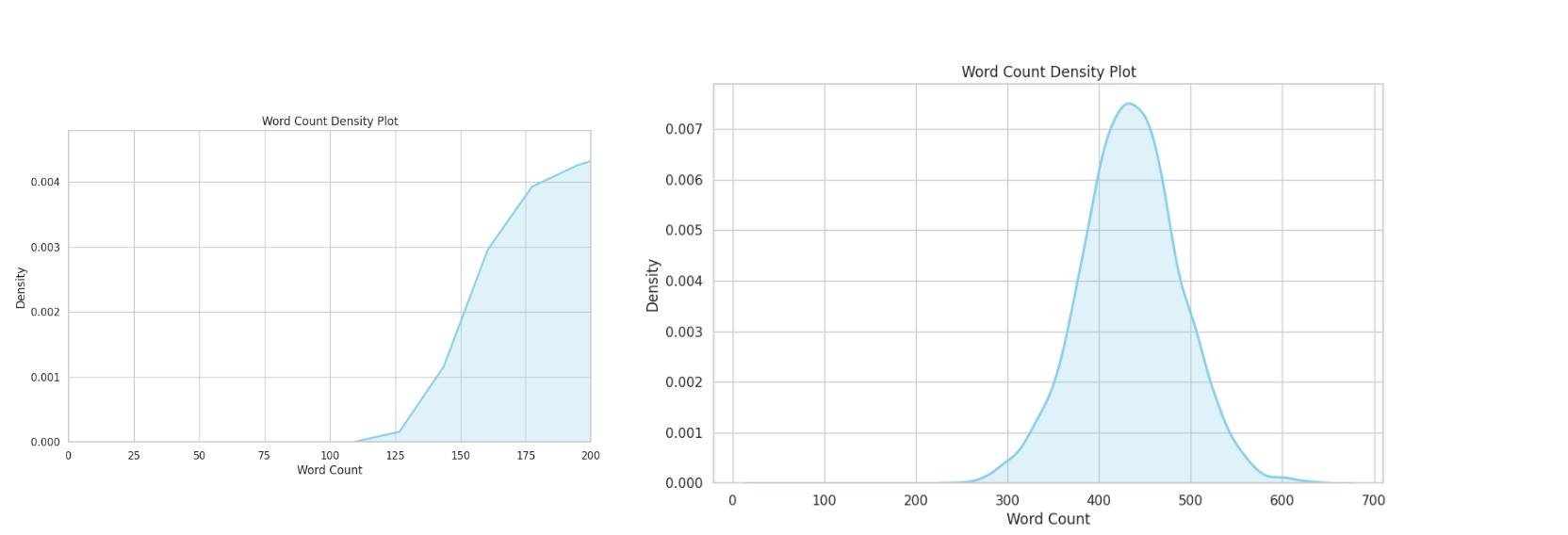

* Approximate statistics based on the first 1000 samples:

| | sentence1 | sentence2 |

|:--------|:-----------------------------------------------------------------------------------|:------------------------------------------------------------------------------------|

| type | string | string |

| details | <ul><li>min: 5 tokens</li><li>mean: 41.95 tokens</li><li>max: 219 tokens</li></ul> | <ul><li>min: 63 tokens</li><li>mean: 426.3 tokens</li><li>max: 512 tokens</li></ul> |

* Samples:

| sentence1 | sentence2 |

|:----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|:---------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|

| <code>What types of businesses are subject to the gross receipts tax in San Francisco, and how is their San Francisco gross receipts calculated? What are the current rates for this tax, and are there any exemptions or scheduled increases?</code> | <code>The Way It Is Now<br>CHANGES TO BUSINESS TAXES<br>The City collects various business taxes on an annual basis including:<br>O<br>•<br>SAN FRANCISCO<br>FILED<br>2024 MAY 15 PM 3:10<br>DEPARTMENT OF ELECTIONS<br>A gross receipts tax that is a percentage of a business's San Francisco gross receipts.<br>Depending on business type, the City determines a business's San Francisco gross<br>receipts based on sales in San Francisco, payroll expenses for employees working there,<br>or both. Rates range from 0.053% to 1.008% and are scheduled to increase in coming<br>years. Rates depend on business type, and higher rates apply as a business generates<br>more gross receipts. For 2023, most businesses with gross receipts up to $2.19 million<br>are exempt.<br>A homelessness gross receipts tax that is an additional tax on businesses with San<br>Francisco gross receipts over $50 million. Rates range from 0.175% to 0.69%.<br>An overpaid executive gross receipts tax that is an additional tax on businesses that pay<br>their highest-paid managerial employee much higher than the median compensation they<br>pay their San Francisco employees. Rates are between 0.1% and 0.6%.<br>A business registration fee that is an additional tax. For most businesses the fee is<br>currently between $47 and $45,150, based on business type and amount of gross receipts.<br>• An administrative office tax on payroll expenses that certain large businesses pay instead<br>of these other business taxes. The combined rates in 2024 range from 3.04% to 5.44%,<br>and in 2025 are scheduled to range from 3.11% to 5.51%. Business registration fees for<br>these businesses currently range from $19,682 to $45,928.<br>State law limits the total revenue, including tax revenue, the City may spend each year. The<br>voters may approve increases to this limit for up to four years.</code> |

| <code>What is the homelessness gross receipts tax, and which businesses are required to pay it? What are the current rates for this tax, and how do they vary based on the amount of San Francisco gross receipts? Are there any exemptions or scheduled increases for this tax?</code> | <code>The Way It Is Now<br>CHANGES TO BUSINESS TAXES<br>The City collects various business taxes on an annual basis including:<br>O<br>•<br>SAN FRANCISCO<br>FILED<br>2024 MAY 15 PM 3:10<br>DEPARTMENT OF ELECTIONS<br>A gross receipts tax that is a percentage of a business's San Francisco gross receipts.<br>Depending on business type, the City determines a business's San Francisco gross<br>receipts based on sales in San Francisco, payroll expenses for employees working there,<br>or both. Rates range from 0.053% to 1.008% and are scheduled to increase in coming<br>years. Rates depend on business type, and higher rates apply as a business generates<br>more gross receipts. For 2023, most businesses with gross receipts up to $2.19 million<br>are exempt.<br>A homelessness gross receipts tax that is an additional tax on businesses with San<br>Francisco gross receipts over $50 million. Rates range from 0.175% to 0.69%.<br>An overpaid executive gross receipts tax that is an additional tax on businesses that pay<br>their highest-paid managerial employee much higher than the median compensation they<br>pay their San Francisco employees. Rates are between 0.1% and 0.6%.<br>A business registration fee that is an additional tax. For most businesses the fee is<br>currently between $47 and $45,150, based on business type and amount of gross receipts.<br>• An administrative office tax on payroll expenses that certain large businesses pay instead<br>of these other business taxes. The combined rates in 2024 range from 3.04% to 5.44%,<br>and in 2025 are scheduled to range from 3.11% to 5.51%. Business registration fees for<br>these businesses currently range from $19,682 to $45,928.<br>State law limits the total revenue, including tax revenue, the City may spend each year. The<br>voters may approve increases to this limit for up to four years.</code> |

| <code>What is the proposed measure that voters may approve to change the City's business taxes in San Francisco?</code> | <code>The<br>voters may approve increases to this limit for up to four years.<br>The Proposal<br>The proposed measure would change the City's business taxes to:<br>•<br>For the gross receipts tax:<br>○ recategorize business types, reducing the number from 14 to seven;<br>determine San Francisco gross receipts for some businesses based less on payroll<br>expenses and more on sales;<br>o change rates to between 0.1% and 3.716%; and<br>exempt most businesses with gross receipts up to $5 million (increased by<br>inflation).<br>Apply the homelessness gross receipts tax on business activities with San Francisco gross<br>receipts over $25 million, at rates between 0.162% and 1.64%.<br>Modify how the City calculates the overpaid executive gross receipts tax and who pays<br>that tax, and set rates between 0.02% and 0.129%.<br>Adjust business registration fees to between $55 and $60,000 (increased by inflation).Adjust the administrative office tax rates for certain large businesses to range from 2.97%<br>to 3.694%, and the business registration fees for these taxpayers to between $500 and<br>$35,000 (increased by inflation).<br>Make administrative and other changes to the City's business taxes.<br>The homelessness gross receipts tax would continue to fund services for people experiencing<br>homelessness and homelessness prevention. The City would use the other taxes for general<br>government purposes.<br>All these taxes would apply indefinitely until repealed.<br>This proposal would increase the City's spending limit for four years.SALITA CO<br>2024 MAY 10 PH 1:27<br>DEPARTMENT OF ELECTI<br>"Local Small Business Tax Cut Ordinance"<br>Be it ordained by the People of the City and County of San Francisco:<br>NOTE:<br>Unchanged Code text and uncodified text are in plain font.<br>Additions to Codes are in single-underline italics Times New Roman font.<br>Deletions to Codes are in strikethrough italics Times New Roman font.<br>Asterisks (* * * *) indicate the omission of unchanged Code<br>subsections or parts of tables.<br>Section 1. Title. This initiative is known and may be referred to as the "Local Small<br>Business Tax Cut Ordinance."<br>Section 2. Article 2 of the Business and Tax Regulations Code is hereby amended by<br>revising Section 76.3 to read as follows:<br>SEC. 76.3.</code> |

* Loss: [<code>GISTEmbedLoss</code>](https://sbert.net/docs/package_reference/sentence_transformer/losses.html#gistembedloss) with these parameters:

```json

{'guide': SentenceTransformer(

(0): Transformer({'max_seq_length': 512, 'do_lower_case': True}) with Transformer model: BertModel

(1): Pooling({'word_embedding_dimension': 768, 'pooling_mode_cls_token': True, 'pooling_mode_mean_tokens': False, 'pooling_mode_max_tokens': False, 'pooling_mode_mean_sqrt_len_tokens': False, 'pooling_mode_weightedmean_tokens': False, 'pooling_mode_lasttoken': False, 'include_prompt': True})

(2): Normalize()

), 'temperature': 0.01}

```

### Training Hyperparameters

#### Non-Default Hyperparameters

- `eval_strategy`: steps

- `per_device_train_batch_size`: 16

- `per_device_eval_batch_size`: 16

- `num_train_epochs`: 1

- `warmup_ratio`: 0.1

#### All Hyperparameters

<details><summary>Click to expand</summary>

- `overwrite_output_dir`: False

- `do_predict`: False

- `eval_strategy`: steps

- `prediction_loss_only`: True

- `per_device_train_batch_size`: 16

- `per_device_eval_batch_size`: 16

- `per_gpu_train_batch_size`: None

- `per_gpu_eval_batch_size`: None

- `gradient_accumulation_steps`: 1

- `eval_accumulation_steps`: None

- `learning_rate`: 5e-05

- `weight_decay`: 0.0

- `adam_beta1`: 0.9

- `adam_beta2`: 0.999

- `adam_epsilon`: 1e-08

- `max_grad_norm`: 1.0

- `num_train_epochs`: 1

- `max_steps`: -1

- `lr_scheduler_type`: linear

- `lr_scheduler_kwargs`: {}

- `warmup_ratio`: 0.1

- `warmup_steps`: 0

- `log_level`: passive

- `log_level_replica`: warning

- `log_on_each_node`: True

- `logging_nan_inf_filter`: True

- `save_safetensors`: True

- `save_on_each_node`: False

- `save_only_model`: False

- `restore_callback_states_from_checkpoint`: False

- `no_cuda`: False

- `use_cpu`: False

- `use_mps_device`: False

- `seed`: 42

- `data_seed`: None

- `jit_mode_eval`: False

- `use_ipex`: False

- `bf16`: False

- `fp16`: False

- `fp16_opt_level`: O1

- `half_precision_backend`: auto

- `bf16_full_eval`: False

- `fp16_full_eval`: False

- `tf32`: None

- `local_rank`: 0

- `ddp_backend`: None

- `tpu_num_cores`: None

- `tpu_metrics_debug`: False

- `debug`: []

- `dataloader_drop_last`: False

- `dataloader_num_workers`: 0

- `dataloader_prefetch_factor`: None

- `past_index`: -1

- `disable_tqdm`: False

- `remove_unused_columns`: True

- `label_names`: None

- `load_best_model_at_end`: False

- `ignore_data_skip`: False

- `fsdp`: []

- `fsdp_min_num_params`: 0

- `fsdp_config`: {'min_num_params': 0, 'xla': False, 'xla_fsdp_v2': False, 'xla_fsdp_grad_ckpt': False}

- `fsdp_transformer_layer_cls_to_wrap`: None

- `accelerator_config`: {'split_batches': False, 'dispatch_batches': None, 'even_batches': True, 'use_seedable_sampler': True, 'non_blocking': False, 'gradient_accumulation_kwargs': None}

- `deepspeed`: None

- `label_smoothing_factor`: 0.0

- `optim`: adamw_torch

- `optim_args`: None

- `adafactor`: False

- `group_by_length`: False

- `length_column_name`: length

- `ddp_find_unused_parameters`: None

- `ddp_bucket_cap_mb`: None

- `ddp_broadcast_buffers`: False

- `dataloader_pin_memory`: True

- `dataloader_persistent_workers`: False

- `skip_memory_metrics`: True

- `use_legacy_prediction_loop`: False

- `push_to_hub`: False

- `resume_from_checkpoint`: None

- `hub_model_id`: None

- `hub_strategy`: every_save

- `hub_private_repo`: False

- `hub_always_push`: False

- `gradient_checkpointing`: False

- `gradient_checkpointing_kwargs`: None

- `include_inputs_for_metrics`: False

- `eval_do_concat_batches`: True

- `fp16_backend`: auto

- `push_to_hub_model_id`: None

- `push_to_hub_organization`: None

- `mp_parameters`:

- `auto_find_batch_size`: False

- `full_determinism`: False

- `torchdynamo`: None

- `ray_scope`: last

- `ddp_timeout`: 1800

- `torch_compile`: False

- `torch_compile_backend`: None

- `torch_compile_mode`: None

- `dispatch_batches`: None

- `split_batches`: None

- `include_tokens_per_second`: False

- `include_num_input_tokens_seen`: False

- `neftune_noise_alpha`: None

- `optim_target_modules`: None

- `batch_eval_metrics`: False

- `batch_sampler`: batch_sampler

- `multi_dataset_batch_sampler`: proportional

</details>

### Training Logs

| Epoch | Step | stsb-dev_negative_mse |

|:-----:|:----:|:---------------------:|

| 0 | 0 | -2.4282 |

### Framework Versions

- Python: 3.10.12

- Sentence Transformers: 3.0.1

- Transformers: 4.41.2

- PyTorch: 2.3.0+cu121

- Accelerate: 0.31.0

- Datasets: 2.20.0

- Tokenizers: 0.19.1

## Citation

### BibTeX

#### Sentence Transformers

```bibtex

@inproceedings{reimers-2019-sentence-bert,

title = "Sentence-BERT: Sentence Embeddings using Siamese BERT-Networks",

author = "Reimers, Nils and Gurevych, Iryna",

booktitle = "Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing",

month = "11",

year = "2019",

publisher = "Association for Computational Linguistics",

url = "https://arxiv.org/abs/1908.10084",

}

```

#### GISTEmbedLoss

```bibtex

@misc{solatorio2024gistembed,

title={GISTEmbed: Guided In-sample Selection of Training Negatives for Text Embedding Fine-tuning},

author={Aivin V. Solatorio},

year={2024},

eprint={2402.16829},

archivePrefix={arXiv},

primaryClass={cs.LG}

}

```

<!--

## Glossary

*Clearly define terms in order to be accessible across audiences.*

-->

<!--

## Model Card Authors

*Lists the people who create the model card, providing recognition and accountability for the detailed work that goes into its construction.*

-->

<!--

## Model Card Contact

*Provides a way for people who have updates to the Model Card, suggestions, or questions, to contact the Model Card authors.*

-->

| null |

Non_BioNLP

|

# SentenceTransformer based on microsoft/mpnet-base

This is a [sentence-transformers](https://www.SBERT.net) model finetuned from [microsoft/mpnet-base](https://huggingface.co/microsoft/mpnet-base). It maps sentences & paragraphs to a 768-dimensional dense vector space and can be used for semantic textual similarity, semantic search, paraphrase mining, text classification, clustering, and more.

## Model Details

### Model Description

- **Model Type:** Sentence Transformer

- **Base model:** [microsoft/mpnet-base](https://huggingface.co/microsoft/mpnet-base) <!-- at revision 6996ce1e91bd2a9c7d7f61daec37463394f73f09 -->

- **Maximum Sequence Length:** 512 tokens

- **Output Dimensionality:** 768 tokens

- **Similarity Function:** Cosine Similarity

<!-- - **Training Dataset:** Unknown -->

<!-- - **Language:** Unknown -->

<!-- - **License:** Unknown -->

### Model Sources

- **Documentation:** [Sentence Transformers Documentation](https://sbert.net)

- **Repository:** [Sentence Transformers on GitHub](https://github.com/UKPLab/sentence-transformers)

- **Hugging Face:** [Sentence Transformers on Hugging Face](https://huggingface.co/models?library=sentence-transformers)

### Full Model Architecture

```

SentenceTransformer(

(0): Transformer({'max_seq_length': 512, 'do_lower_case': False}) with Transformer model: MPNetModel

(1): Pooling({'word_embedding_dimension': 768, 'pooling_mode_cls_token': False, 'pooling_mode_mean_tokens': True, 'pooling_mode_max_tokens': False, 'pooling_mode_mean_sqrt_len_tokens': False, 'pooling_mode_weightedmean_tokens': False, 'pooling_mode_lasttoken': False, 'include_prompt': True})

)

```

## Usage

### Direct Usage (Sentence Transformers)

First install the Sentence Transformers library:

```bash

pip install -U sentence-transformers

```

Then you can load this model and run inference.

```python

from sentence_transformers import SentenceTransformer

# Download from the 🤗 Hub

model = SentenceTransformer("Areeb-02/mpnet-base-GISTEmbedLoss-MSEE_Evaluator-salestax-docs")

# Run inference

sentences = [

'Based on the context information provided, what are the different gross receipts tax rates for businesses in San Francisco for tax years 2022, 2023, and 2024?',

'$9.75 per $1,000) for taxable gross receipts over $25,000,000\n44SANCO\n2024 NAY LO\n(D) For tax year 2024 if the Controller certifies under Section 953.10 that the\nDEPARTMENT OF\n95% gross receipts threshold has been met for tax year 2024, and for tax years beginning on or after\nJanuary 1, 2025:\n0.814% (e.g. $8.14 per $1,000) for taxable gross receipts between $0 and $1,000,000\n0.853% (e.g. $8.53 per $1,000) for taxable gross receipts between $1,000,000.01 and\n$2,500,000\n0.93% (e.g. $9.30 per $1,000) for taxable gross receipts between $2,500,000.01 and\n$25,000,000\n1.008% (e.g. $10.08 per $1,000) for taxable gross receipts over $25,000,000\n(3) For all business activities not otherwise exempt and not elsewhere\nsubjected to a gross receipts tax rate or an administrative office tax by this Article 12-A-1:\n(B) For tax years 2022 and, if the Controller does not certify under\nSection 953.10 that the 90% gross receipts threshold has been met for tax year 2023, for tax\nyear 2023:\n0.788% (e.g. $7.88 per $1,000) for taxable gross receipts between $0 and $1,000,000\n0.825% (e.g. $8.25 per $1,000) for taxable gross receipts between $1,000,000.01 and\n$2,500,000\n0.9% (e.g. $9 per $1,000) for taxable gross receipts between $2,500,000.01 and\n$25,000,000\n0.975% (e.g. $9.75 per $1,000) for taxable gross receipts over $25,000,000\n(C) For tax year 2023 if the Controller certifies under Section 953.10 that the\n90% gross receipts threshold has been met for tax year 2023,',

'(d) In no event shall the credit under this Section 960.4 reduce a person or combined group\'s\nGross Receipts Tax liability to less than $0 for any tax year. The credit under this Section shall not be\nrefundable and may not be carried forward to a subsequent year.\nSEC. 966. CONTROLLER REPORTS.\nThe Controller shall prepare reports by September 1, 2026, and September 1, 2027,\nrespectively, that discuss current economic conditions in the City and the performance of the tax system\nrevised by the voters in the ordinance adding this Section 966.\nSection 6. Article 21 of the Business and Tax Regulations Code is hereby amended by\nrevising Section 2106 to read as follows:\nSEC. 2106. SMALL BUSINESS EXEMPTION.\n(a) For tax years ending on or before December 31, 2024, nNotwithstanding any other\nprovision of this Article 21, a person or combined group exempt from payment of the gross\nreceipts tax under Section 954.1 of Article 12-A-1, as amended from time to time, shall also\nbe exempt from payment of the Early Care and Education Commercial Rents Tax.\n79SAN\nDL W(b) For tax years beginning on or after January 1, 2025, notwithstanding any other provision\nof this Article 21, a "small business enterprise" shall be exempt from payment of the Early Care and\nEducation Commercial Rents Tax. For purposes of this subsection (b), the term "small business\nenterprise" shall mean any person or combined group whose gross receipts within the City, determined\nunder Article 12-A-1, did not exceed $2,325,000, adjusted annually in accordance with the increase in\nthe Consumer Price Index: All Urban Consumers for the San Francisco/Oakland/Hayward Area for All\nItems as reported by the United States Bureau of Labor Statistics, or any successor to that index, as of\nDecember 31 of the calendar year two years prior to the tax year, beginning with tax year 2026, and\nrounded to the nearest $10,000. This subsection (b) shall not apply to a person or combined group\nsubject to a tax on administrative office business activities in Section 953.8 of Article 12-A-1.\nSection 7.',

]

embeddings = model.encode(sentences)

print(embeddings.shape)

# [3, 768]

# Get the similarity scores for the embeddings

similarities = model.similarity(embeddings, embeddings)

print(similarities.shape)

# [3, 3]

```

<!--

### Direct Usage (Transformers)

<details><summary>Click to see the direct usage in Transformers</summary>

</details>

-->

<!--

### Downstream Usage (Sentence Transformers)

You can finetune this model on your own dataset.

<details><summary>Click to expand</summary>

</details>

-->

<!--

### Out-of-Scope Use

*List how the model may foreseeably be misused and address what users ought not to do with the model.*

-->

## Evaluation

### Metrics

#### Knowledge Distillation

* Dataset: `stsb-dev`

* Evaluated with [<code>MSEEvaluator</code>](https://sbert.net/docs/package_reference/sentence_transformer/evaluation.html#sentence_transformers.evaluation.MSEEvaluator)

| Metric | Value |

|:-----------------|:------------|

| **negative_mse** | **-2.4282** |

<!--

## Bias, Risks and Limitations

*What are the known or foreseeable issues stemming from this model? You could also flag here known failure cases or weaknesses of the model.*

-->

<!--

### Recommendations

*What are recommendations with respect to the foreseeable issues? For example, filtering explicit content.*

-->

## Training Details

### Training Dataset

#### Unnamed Dataset

* Size: 238 training samples

* Columns: <code>sentence1</code> and <code>sentence2</code>

* Approximate statistics based on the first 1000 samples:

| | sentence1 | sentence2 |

|:--------|:-----------------------------------------------------------------------------------|:------------------------------------------------------------------------------------|

| type | string | string |

| details | <ul><li>min: 5 tokens</li><li>mean: 41.95 tokens</li><li>max: 219 tokens</li></ul> | <ul><li>min: 63 tokens</li><li>mean: 426.3 tokens</li><li>max: 512 tokens</li></ul> |

* Samples:

| sentence1 | sentence2 |

|:----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|:---------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|

| <code>What types of businesses are subject to the gross receipts tax in San Francisco, and how is their San Francisco gross receipts calculated? What are the current rates for this tax, and are there any exemptions or scheduled increases?</code> | <code>The Way It Is Now<br>CHANGES TO BUSINESS TAXES<br>The City collects various business taxes on an annual basis including:<br>O<br>•<br>SAN FRANCISCO<br>FILED<br>2024 MAY 15 PM 3:10<br>DEPARTMENT OF ELECTIONS<br>A gross receipts tax that is a percentage of a business's San Francisco gross receipts.<br>Depending on business type, the City determines a business's San Francisco gross<br>receipts based on sales in San Francisco, payroll expenses for employees working there,<br>or both. Rates range from 0.053% to 1.008% and are scheduled to increase in coming<br>years. Rates depend on business type, and higher rates apply as a business generates<br>more gross receipts. For 2023, most businesses with gross receipts up to $2.19 million<br>are exempt.<br>A homelessness gross receipts tax that is an additional tax on businesses with San<br>Francisco gross receipts over $50 million. Rates range from 0.175% to 0.69%.<br>An overpaid executive gross receipts tax that is an additional tax on businesses that pay<br>their highest-paid managerial employee much higher than the median compensation they<br>pay their San Francisco employees. Rates are between 0.1% and 0.6%.<br>A business registration fee that is an additional tax. For most businesses the fee is<br>currently between $47 and $45,150, based on business type and amount of gross receipts.<br>• An administrative office tax on payroll expenses that certain large businesses pay instead<br>of these other business taxes. The combined rates in 2024 range from 3.04% to 5.44%,<br>and in 2025 are scheduled to range from 3.11% to 5.51%. Business registration fees for<br>these businesses currently range from $19,682 to $45,928.<br>State law limits the total revenue, including tax revenue, the City may spend each year. The<br>voters may approve increases to this limit for up to four years.</code> |

| <code>What is the homelessness gross receipts tax, and which businesses are required to pay it? What are the current rates for this tax, and how do they vary based on the amount of San Francisco gross receipts? Are there any exemptions or scheduled increases for this tax?</code> | <code>The Way It Is Now<br>CHANGES TO BUSINESS TAXES<br>The City collects various business taxes on an annual basis including:<br>O<br>•<br>SAN FRANCISCO<br>FILED<br>2024 MAY 15 PM 3:10<br>DEPARTMENT OF ELECTIONS<br>A gross receipts tax that is a percentage of a business's San Francisco gross receipts.<br>Depending on business type, the City determines a business's San Francisco gross<br>receipts based on sales in San Francisco, payroll expenses for employees working there,<br>or both. Rates range from 0.053% to 1.008% and are scheduled to increase in coming<br>years. Rates depend on business type, and higher rates apply as a business generates<br>more gross receipts. For 2023, most businesses with gross receipts up to $2.19 million<br>are exempt.<br>A homelessness gross receipts tax that is an additional tax on businesses with San<br>Francisco gross receipts over $50 million. Rates range from 0.175% to 0.69%.<br>An overpaid executive gross receipts tax that is an additional tax on businesses that pay<br>their highest-paid managerial employee much higher than the median compensation they<br>pay their San Francisco employees. Rates are between 0.1% and 0.6%.<br>A business registration fee that is an additional tax. For most businesses the fee is<br>currently between $47 and $45,150, based on business type and amount of gross receipts.<br>• An administrative office tax on payroll expenses that certain large businesses pay instead<br>of these other business taxes. The combined rates in 2024 range from 3.04% to 5.44%,<br>and in 2025 are scheduled to range from 3.11% to 5.51%. Business registration fees for<br>these businesses currently range from $19,682 to $45,928.<br>State law limits the total revenue, including tax revenue, the City may spend each year. The<br>voters may approve increases to this limit for up to four years.</code> |

| <code>What is the proposed measure that voters may approve to change the City's business taxes in San Francisco?</code> | <code>The<br>voters may approve increases to this limit for up to four years.<br>The Proposal<br>The proposed measure would change the City's business taxes to:<br>•<br>For the gross receipts tax:<br>○ recategorize business types, reducing the number from 14 to seven;<br>determine San Francisco gross receipts for some businesses based less on payroll<br>expenses and more on sales;<br>o change rates to between 0.1% and 3.716%; and<br>exempt most businesses with gross receipts up to $5 million (increased by<br>inflation).<br>Apply the homelessness gross receipts tax on business activities with San Francisco gross<br>receipts over $25 million, at rates between 0.162% and 1.64%.<br>Modify how the City calculates the overpaid executive gross receipts tax and who pays<br>that tax, and set rates between 0.02% and 0.129%.<br>Adjust business registration fees to between $55 and $60,000 (increased by inflation).Adjust the administrative office tax rates for certain large businesses to range from 2.97%<br>to 3.694%, and the business registration fees for these taxpayers to between $500 and<br>$35,000 (increased by inflation).<br>Make administrative and other changes to the City's business taxes.<br>The homelessness gross receipts tax would continue to fund services for people experiencing<br>homelessness and homelessness prevention. The City would use the other taxes for general<br>government purposes.<br>All these taxes would apply indefinitely until repealed.<br>This proposal would increase the City's spending limit for four years.SALITA CO<br>2024 MAY 10 PH 1:27<br>DEPARTMENT OF ELECTI<br>"Local Small Business Tax Cut Ordinance"<br>Be it ordained by the People of the City and County of San Francisco:<br>NOTE:<br>Unchanged Code text and uncodified text are in plain font.<br>Additions to Codes are in single-underline italics Times New Roman font.<br>Deletions to Codes are in strikethrough italics Times New Roman font.<br>Asterisks (* * * *) indicate the omission of unchanged Code<br>subsections or parts of tables.<br>Section 1. Title. This initiative is known and may be referred to as the "Local Small<br>Business Tax Cut Ordinance."<br>Section 2. Article 2 of the Business and Tax Regulations Code is hereby amended by<br>revising Section 76.3 to read as follows:<br>SEC. 76.3.</code> |

* Loss: [<code>GISTEmbedLoss</code>](https://sbert.net/docs/package_reference/sentence_transformer/losses.html#gistembedloss) with these parameters:

```json

{'guide': SentenceTransformer(

(0): Transformer({'max_seq_length': 512, 'do_lower_case': True}) with Transformer model: BertModel

(1): Pooling({'word_embedding_dimension': 768, 'pooling_mode_cls_token': True, 'pooling_mode_mean_tokens': False, 'pooling_mode_max_tokens': False, 'pooling_mode_mean_sqrt_len_tokens': False, 'pooling_mode_weightedmean_tokens': False, 'pooling_mode_lasttoken': False, 'include_prompt': True})

(2): Normalize()

), 'temperature': 0.01}

```

### Training Hyperparameters

#### Non-Default Hyperparameters

- `eval_strategy`: steps

- `per_device_train_batch_size`: 16

- `per_device_eval_batch_size`: 16

- `num_train_epochs`: 1

- `warmup_ratio`: 0.1

#### All Hyperparameters

<details><summary>Click to expand</summary>

- `overwrite_output_dir`: False

- `do_predict`: False

- `eval_strategy`: steps

- `prediction_loss_only`: True

- `per_device_train_batch_size`: 16

- `per_device_eval_batch_size`: 16

- `per_gpu_train_batch_size`: None

- `per_gpu_eval_batch_size`: None

- `gradient_accumulation_steps`: 1

- `eval_accumulation_steps`: None

- `learning_rate`: 5e-05

- `weight_decay`: 0.0

- `adam_beta1`: 0.9

- `adam_beta2`: 0.999

- `adam_epsilon`: 1e-08

- `max_grad_norm`: 1.0

- `num_train_epochs`: 1

- `max_steps`: -1

- `lr_scheduler_type`: linear

- `lr_scheduler_kwargs`: {}

- `warmup_ratio`: 0.1

- `warmup_steps`: 0

- `log_level`: passive

- `log_level_replica`: warning

- `log_on_each_node`: True

- `logging_nan_inf_filter`: True

- `save_safetensors`: True

- `save_on_each_node`: False

- `save_only_model`: False

- `restore_callback_states_from_checkpoint`: False

- `no_cuda`: False

- `use_cpu`: False

- `use_mps_device`: False

- `seed`: 42

- `data_seed`: None

- `jit_mode_eval`: False

- `use_ipex`: False

- `bf16`: False

- `fp16`: False

- `fp16_opt_level`: O1

- `half_precision_backend`: auto

- `bf16_full_eval`: False

- `fp16_full_eval`: False

- `tf32`: None

- `local_rank`: 0

- `ddp_backend`: None

- `tpu_num_cores`: None

- `tpu_metrics_debug`: False

- `debug`: []

- `dataloader_drop_last`: False

- `dataloader_num_workers`: 0

- `dataloader_prefetch_factor`: None

- `past_index`: -1

- `disable_tqdm`: False

- `remove_unused_columns`: True

- `label_names`: None

- `load_best_model_at_end`: False

- `ignore_data_skip`: False

- `fsdp`: []

- `fsdp_min_num_params`: 0

- `fsdp_config`: {'min_num_params': 0, 'xla': False, 'xla_fsdp_v2': False, 'xla_fsdp_grad_ckpt': False}

- `fsdp_transformer_layer_cls_to_wrap`: None

- `accelerator_config`: {'split_batches': False, 'dispatch_batches': None, 'even_batches': True, 'use_seedable_sampler': True, 'non_blocking': False, 'gradient_accumulation_kwargs': None}

- `deepspeed`: None

- `label_smoothing_factor`: 0.0

- `optim`: adamw_torch

- `optim_args`: None

- `adafactor`: False

- `group_by_length`: False

- `length_column_name`: length

- `ddp_find_unused_parameters`: None

- `ddp_bucket_cap_mb`: None

- `ddp_broadcast_buffers`: False

- `dataloader_pin_memory`: True

- `dataloader_persistent_workers`: False

- `skip_memory_metrics`: True

- `use_legacy_prediction_loop`: False

- `push_to_hub`: False

- `resume_from_checkpoint`: None

- `hub_model_id`: None

- `hub_strategy`: every_save

- `hub_private_repo`: False

- `hub_always_push`: False

- `gradient_checkpointing`: False

- `gradient_checkpointing_kwargs`: None

- `include_inputs_for_metrics`: False

- `eval_do_concat_batches`: True

- `fp16_backend`: auto

- `push_to_hub_model_id`: None

- `push_to_hub_organization`: None

- `mp_parameters`:

- `auto_find_batch_size`: False

- `full_determinism`: False

- `torchdynamo`: None

- `ray_scope`: last

- `ddp_timeout`: 1800

- `torch_compile`: False

- `torch_compile_backend`: None

- `torch_compile_mode`: None

- `dispatch_batches`: None

- `split_batches`: None

- `include_tokens_per_second`: False

- `include_num_input_tokens_seen`: False

- `neftune_noise_alpha`: None

- `optim_target_modules`: None

- `batch_eval_metrics`: False

- `batch_sampler`: batch_sampler

- `multi_dataset_batch_sampler`: proportional

</details>

### Training Logs

| Epoch | Step | stsb-dev_negative_mse |

|:-----:|:----:|:---------------------:|

| 0 | 0 | -2.4282 |

### Framework Versions

- Python: 3.10.12

- Sentence Transformers: 3.0.1

- Transformers: 4.41.2

- PyTorch: 2.3.0+cu121

- Accelerate: 0.31.0

- Datasets: 2.20.0

- Tokenizers: 0.19.1

## Citation

### BibTeX

#### Sentence Transformers

```bibtex

@inproceedings{reimers-2019-sentence-bert,

title = "Sentence-BERT: Sentence Embeddings using Siamese BERT-Networks",

author = "Reimers, Nils and Gurevych, Iryna",

booktitle = "Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing",

month = "11",

year = "2019",

publisher = "Association for Computational Linguistics",

url = "https://arxiv.org/abs/1908.10084",

}

```

#### GISTEmbedLoss

```bibtex

@misc{solatorio2024gistembed,

title={GISTEmbed: Guided In-sample Selection of Training Negatives for Text Embedding Fine-tuning},

author={Aivin V. Solatorio},

year={2024},

eprint={2402.16829},

archivePrefix={arXiv},

primaryClass={cs.LG}

}

```

<!--

## Glossary

*Clearly define terms in order to be accessible across audiences.*

-->

<!--

## Model Card Authors

*Lists the people who create the model card, providing recognition and accountability for the detailed work that goes into its construction.*

-->

<!--

## Model Card Contact

*Provides a way for people who have updates to the Model Card, suggestions, or questions, to contact the Model Card authors.*

-->

|