id

stringlengths 6

113

| author

stringlengths 2

36

| task_category

stringclasses 42

values | tags

listlengths 1

4.05k

| created_time

timestamp[ns, tz=UTC]date 2022-03-02 23:29:04

2025-04-10 08:38:38

| last_modified

stringdate 2020-05-14 13:13:12

2025-04-19 04:15:39

| downloads

int64 0

118M

| likes

int64 0

4.86k

| README

stringlengths 30

1.01M

| matched_bigbio_names

listlengths 1

8

⌀ | is_bionlp

stringclasses 3

values | model_cards

stringlengths 0

1M

| metadata

stringlengths 2

698k

| source

stringclasses 2

values | matched_task

listlengths 1

10

⌀ | __index_level_0__

int64 0

46.9k

|

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

Tnt3o5/tnt_v5_lega_new_tokens

|

Tnt3o5

|

sentence-similarity

|

[

"sentence-transformers",

"safetensors",

"roberta",

"sentence-similarity",

"feature-extraction",

"generated_from_trainer",

"dataset_size:101442",

"loss:MatryoshkaLoss",

"loss:MultipleNegativesRankingLoss",

"arxiv:1908.10084",

"arxiv:2205.13147",

"arxiv:1705.00652",

"base_model:Tnt3o5/tnt_v4_lega_new_tokens",

"base_model:finetune:Tnt3o5/tnt_v4_lega_new_tokens",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | 2024-11-11T21:55:48Z |

2024-11-11T21:56:06+00:00

| 4 | 0 |

---

base_model: Tnt3o5/tnt_v4_lega_new_tokens

library_name: sentence-transformers

metrics:

- cosine_accuracy@1

- cosine_accuracy@3

- cosine_accuracy@5

- cosine_accuracy@10

- cosine_precision@1

- cosine_precision@3

- cosine_precision@5

- cosine_precision@10

- cosine_recall@1

- cosine_recall@3

- cosine_recall@5

- cosine_recall@10

- cosine_ndcg@10

- cosine_mrr@10

- cosine_map@100

pipeline_tag: sentence-similarity

tags:

- sentence-transformers

- sentence-similarity

- feature-extraction

- generated_from_trainer

- dataset_size:101442

- loss:MatryoshkaLoss

- loss:MultipleNegativesRankingLoss

widget:

- source_sentence: Ai có quyền điều_chỉnh Mệnh_lệnh vận_chuyển vật_liệu nổ công_nghiệp

trong doanh_nghiệp Quân_đội ?

sentences:

- 'Quyền đăng_ký sáng_chế , kiểu_dáng công_nghiệp , thiết_kế bố_trí Tổ_chức , cá_nhân

sau đây có quyền đăng_ký sáng_chế , kiểu_dáng công_nghiệp , thiết_kế bố_trí :

Tác giả_tạo ra sáng_chế , kiểu_dáng công_nghiệp , thiết_kế bố_trí bằng công_sức

và chi_phí của mình ; Tổ_chức , cá_nhân đầu_tư kinh_phí , phương_tiện vật_chất

cho tác_giả dưới hình_thức giao việc , thuê việc , tổ_chức , cá_nhân được giao

quản_lý nguồn gen cung_cấp nguồn gen , tri_thức truyền_thống về nguồn gen theo

hợp_đồng tiếp_cận nguồn gen và chia_sẻ lợi_ích , trừ trường_hợp các bên có thỏa_thuận

khác hoặc trường_hợp quy_định tại Điều_86a của Luật này . Trường_hợp nhiều tổ_chức

, cá_nhân cùng nhau tạo ra hoặc đầu_tư để tạo ra sáng_chế , kiểu_dáng công_nghiệp

, thiết_kế bố_trí thì các tổ_chức , cá_nhân đó đều có quyền đăng_ký và quyền đăng_ký

đó chỉ được thực_hiện nếu được tất_cả các tổ_chức , cá_nhân đó đồng_ý . Tổ_chức

, cá_nhân có quyền đăng_ký quy_định tại Điều này có quyền chuyển_giao quyền đăng_ký

cho tổ_chức , cá_nhân khác dưới hình_thức hợp_đồng bằng văn_bản , để thừa_kế hoặc

kế_thừa theo quy_định của pháp_luật , kể_cả trường_hợp đã nộp đơn đăng_ký .'

- 'Nhiệm_vụ cụ_thể của các thành_viên Hội_đồng Ngoài việc thực_hiện các nhiệm_vụ

quy_định tại Điều_5 của Quy_chế này , Thành_viên Hội_đồng còn có nhiệm_vụ cụ_thể

sau đây : Thành_viên Hội_đồng là Lãnh_đạo Vụ Pháp_chế có nhiệm_vụ giúp Chủ_tịch

, Phó Chủ_tịch Hội_đồng , Hội_đồng , điều_hành các công_việc thường_xuyên của

Hội_đồng ; trực_tiếp lãnh_đạo Tổ Thường_trực ; giải_quyết công_việc đột_xuất của

Hội_đồng khi cả Chủ_tịch và Phó Chủ_tịch Hội đồng_đều đi vắng . Thành_viên Hội_đồng

là Lãnh_đạo Vụ An_toàn giao_thông có nhiệm_vụ trực_tiếp theo_dõi , đôn_đốc , kiểm_tra

và phối_hợp với thủ_trưởng các cơ_quan , đơn_vị thuộc Bộ , Thành_viên Hội_đồng

là Lãnh_đạo Văn_phòng Ủy_ban ATGTQG , Giám_đốc Sở GTVT , Chủ_tịch Tập_đoàn VINASHIN

, Tổng giám_đốc các Tổng Công_ty : Hàng_hải Việt_Nam , Đường_sắt Việt_Nam , Hàng_không

Việt_Nam chỉ_đạo công_tác tuyên_truyền PBGDPL về trật_tự , an_toàn giao_thông

.'

- Cấp , điều_chỉnh , thu_hồi và tạm ngừng cấp_Mệnh lệnh vận_chuyển vật_liệu nổ công_nghiệp

, tiền chất thuốc_nổ Tổng_Tham_mưu_trưởng cấp , điều_chỉnh , thu_hồi hoặc ủy_quyền

cho người chỉ_huy cơ_quan , đơn_vị thuộc quyền dưới một cấp cấp , điều_chỉnh ,

thu_hồi Mệnh_lệnh vận_chuyển vật_liệu nổ công_nghiệp , tiền chất thuốc_nổ cho

cá 5 doanh_nghiệp trực_thuộc Bộ Quốc_phòng và các doanh_nghiệp cổ_phần có vốn

nhà_nước do Bộ Quốc_phòng làm đại_diện chủ sở_hữu . Đối_với trường_hợp đột_xuất

khác không có trong kế_hoạch được Tổng_Tham_mưu_trưởng phê_duyệt như quy_định

tại Điều_5 Thông_tư này , cơ_quan , đơn_vị , doanh_nghiệp cấp dưới báo_cáo cơ_quan

, đơn_vị , doanh_nghiệp trực_thuộc Bộ Quốc_phòng đề_nghị Tổng_Tham_mưu_trưởng

cấp_Mệnh lệnh vận_chuyển vật_liệu nổ công_nghiệp , tiền chất thuốc_nổ . Người

chỉ_huy cơ_quan , đơn_vị ( không phải doanh nghiệ trực_thuộc Bộ Quốc_phòng căn_cứ

vào kế_hoạch được Tổng_Tham_mưu_trưởng phê_duyệt , thực_hiện hoặc ủy_quyền cho

người chỉ_huy cơ_quan , đơn_vị thuộc quyền dưới một cấp cấp , điều_chỉnh , thu_hồi

Mệnh_lệnh vận_chuyển vật_liệu nổ công_nghiệp , tiền chất thuốc_nổ cho đối_tượng

thuộc phạm_vi quản_lý .

- source_sentence: Ai có quyền quyết_định phong quân_hàm Đại_tá đối_với sĩ_quan Quân_đội

giữ chức_vụ Chính_ủy Lữ_đoàn ?

sentences:

- 'Thẩm_quyền quyết_định đối_với sĩ_quan Thẩm_quyền bổ_nhiệm , miễn_nhiệm , cách_chức

, phong , thăng , giáng , tước quân_hàm đối_với sĩ_quan được quy_định như sau

: Chủ_tịch_nước bổ_nhiệm , miễn_nhiệm , cách_chức Tổng_Tham_mưu_trưởng , Chủ_nhiệm

Tổng_Cục_Chính_trị ; phong , thăng , giáng , tước quân_hàm Cấp tướng , Chuẩn Đô_đốc

, Phó Đô_đốc , Đô_đốc Hải_quân ; Thủ_tướng_Chính_phủ bổ_nhiệm , miễn_nhiệm , cách_chức

Thứ_trưởng ; Phó_Tổng_Tham_mưu_trưởng , Phó Chủ_nhiệm Tổng_Cục_Chính_trị ; Giám_đốc

, Chính_ủy Học_viện Quốc_phòng ; Chủ_nhiệm Tổng_cục , Tổng cục_trưởng , Chính_ủy

Tổng_cục ; Tư_lệnh , Chính_ủy Quân_khu ; Tư_lệnh , Chính_ủy Quân_chủng ; Tư_lệnh

, Chính_ủy Bộ_đội Biên_phòng ; Tư_lệnh , Chính_ủy Cảnh_sát biển Việt_Nam ; Trưởng_Ban

Cơ_yếu Chính_phủ và các chức_vụ khác theo quy_định của Cấp có thẩm_quyền ; Bộ_trưởng_Bộ_Quốc_phòng

bổ_nhiệm , miễn_nhiệm , cách_chức các chức_vụ và phong , thăng , giáng , tước

các Cấp_bậc quân_hàm còn lại và nâng lương sĩ_quan ; Việc bổ_nhiệm , miễn_nhiệm

, cách_chức các chức_vụ thuộc ngành Kiểm_sát , Toà_án , Thi_hành án trong quân_đội

được thực_hiện theo quy_định của pháp_luật . Cấp có thẩm_quyền quyết_định bổ_nhiệm

đến chức_vụ nào thì có quyền miễn_nhiệm , cách_chức , giáng chức , quyết_định

kéo_dài thời_hạn phục_vụ tại_ngũ , điều_động , biệt_phái , giao chức_vụ thấp hơn

, cho thôi phục_vụ tại_ngũ , chuyển ngạch và giải ngạch sĩ_quan dự_bị đến chức_vụ

đó .'

- 'Nhiệm_vụ , quyền_hạn của Tổng Giám_đốc Trình Hội_đồng thành_viên VNPT để Hội_đồng

thành_viên Trình cơ_quan nhà_nước có thẩm_quyền quyết_định hoặc phê_duyệt các

nội_dung thuộc quyền của chủ sở_hữu đối_với VNPT theo quy_định của Điều_lệ này

. Trình Hội_đồng thành_viên VNPT xem_xét , quyết_định các nội_dung thuộc thẩm_quyền

của Hội_đồng thành_viên VNPT. Ban_hành quy_chế quản_lý nội_bộ sau khi Hội_đồng

thành_viên thông_qua . Theo phân_cấp hoặc ủy_quyền theo quy_định của Điều_lệ này

, Quy_chế_tài_chính , các quy_chế quản_lý nội_bộ của VNPT và các quy_định khác

của pháp_luật , Tổng Giám_đốc quyết_định : Các dự_án đầu_tư ; hợp_đồng mua , bán

tài_sản . Các hợp_đồng vay , thuê , cho thuê và hợp_đồng khác . Phương_án sử_dụng

vốn , tài_sản của VNPT để góp vốn , mua cổ_phần của các doanh_nghiệp . Ban_hành

các quy_định , quy Trình nội_bộ phục_vụ công_tác quản_lý , Điều_hành sản_xuất

kinh_doanh của VNPT. Quyết_định thành_lập , giải_thể , tổ_chức lại các đơn_vị

kinh_tế hạch_toán phụ_thuộc đơn_vị trực_thuộc của VNPT.'

- 'Thẩm_quyền quyết_định đối_với sĩ_quan Thẩm_quyền bổ_nhiệm , miễn_nhiệm , cách_chức

, phong , thăng , giáng , tước quân_hàm đối_với sĩ_quan được quy_định như sau

: Chủ_tịch_nước bổ_nhiệm , miễn_nhiệm , cách_chức Tổng_Tham_mưu_trưởng , Chủ_nhiệm

Tổng_Cục_Chính_trị ; phong , thăng , giáng , tước quân_hàm Cấp tướng , Chuẩn Đô_đốc

, Phó Đô_đốc , Đô_đốc Hải_quân ; Thủ_tướng_Chính_phủ bổ_nhiệm , miễn_nhiệm , cách_chức

Thứ_trưởng ; Phó_Tổng_Tham_mưu_trưởng , Phó Chủ_nhiệm Tổng_Cục_Chính_trị ; Giám_đốc

, Chính_ủy Học_viện Quốc_phòng ; Chủ_nhiệm Tổng_cục , Tổng cục_trưởng , Chính_ủy

Tổng_cục ; Tư_lệnh , Chính_ủy Quân_khu ; Tư_lệnh , Chính_ủy Quân_chủng ; Tư_lệnh

, Chính_ủy Bộ_đội Biên_phòng ; Tư_lệnh , Chính_ủy Cảnh_sát biển Việt_Nam ; Trưởng_Ban

Cơ_yếu Chính_phủ và các chức_vụ khác theo quy_định của Cấp có thẩm_quyền ; Bộ_trưởng_Bộ_Quốc_phòng

bổ_nhiệm , miễn_nhiệm , cách_chức các chức_vụ và phong , thăng , giáng , tước

các Cấp_bậc quân_hàm còn lại và nâng lương sĩ_quan ; Việc bổ_nhiệm , miễn_nhiệm

, cách_chức các chức_vụ thuộc ngành Kiểm_sát , Toà_án , Thi_hành án trong quân_đội

được thực_hiện theo quy_định của pháp_luật . Cấp có thẩm_quyền quyết_định bổ_nhiệm

đến chức_vụ nào thì có quyền miễn_nhiệm , cách_chức , giáng chức , quyết_định

kéo_dài thời_hạn phục_vụ tại_ngũ , điều_động , biệt_phái , giao chức_vụ thấp hơn

, cho thôi phục_vụ tại_ngũ , chuyển ngạch và giải ngạch sĩ_quan dự_bị đến chức_vụ

đó .'

- source_sentence: Ai có quyền quyết_định thành_lập Hội_đồng Giám_định y_khoa cấp

tỉnh ? Hội_đồng có tư_cách pháp_nhân không ?

sentences:

- Thẩm_quyền thành_lập Hội_đồng giám_định y_khoa các cấp Hội_đồng giám_định y_khoa

cấp tỉnh do cơ_quan chuyên_môn thuộc Ủy_ban_nhân_dân tỉnh quyết_định thành_lập

. Hội_đồng giám_định y_khoa cấp trung_ương do Bộ_Y_tế quyết_định thành_lập . Bộ

Quốc_phòng , Bộ_Công_An , Bộ_Giao_thông_Vận_tải căn_cứ quy_định của Thông_tư này

để quyết_định thành_lập Hội_đồng giám_định y_khoa các Bộ theo quy_định tại điểm_b

Khoản_2 Điều_161 Nghị_định số 131/2021/NĐCP.

- Thẩm_quyền phong , thăng , giáng , tước cấp_bậc hàm , nâng lương sĩ_quan , hạ

sĩ_quan , chiến_sĩ ; bổ_nhiệm , miễn_nhiệm , cách_chức , giáng chức các chức_vụ

; bổ_nhiệm , miễn_nhiệm chức_danh trong Công_an nhân_dân Chủ_tịch_nước phong ,

thăng cấp_bậc hàm_cấp tướng đối_với sĩ_quan Công_an nhân_dân . Thủ_tướng_Chính_phủ

bổ_nhiệm chức_vụ Thứ_trưởng Bộ_Công_An ; quyết_định nâng lương cấp_bậc hàm Đại_tướng

, Thượng_tướng . Bộ_trưởng Bộ_Công_An quyết_định nâng lương cấp_bậc hàm Trung_tướng

, Thiếu_tướng ; quy_định việc phong , thăng , nâng lương các cấp_bậc hàm , bổ_nhiệm

các chức_vụ , chức_danh còn lại trong Công_an nhân_dân . Người có thẩm_quyền phong

, thăng cấp_bậc hàm nào thì có thẩm_quyền giáng , tước cấp_bậc hàm đó ; mỗi lần

chỉ được thăng , giáng 01 cấp_bậc hàm , trừ trường_hợp đặc_biệt mới xét thăng

, giáng nhiều cấp_bậc hàm . Người có thẩm_quyền bổ_nhiệm chức_vụ nào thì có thẩm_quyền

miễn_nhiệm , cách_chức , giáng chức đối_với chức_vụ đó . Người có thẩm_quyền bổ_nhiệm

chức_danh nào thì có thẩm_quyền miễn_nhiệm đối_với chức_danh đó .

- Thẩm_quyền duyệt kế_hoạch Đại_hội Đoàn các cấp Ban Thường_vụ Đoàn cấp trên trực_tiếp

có trách_nhiệm và thẩm_quyền duyệt kế_hoạch Đại_hội Đoàn các đơn_vị trực_thuộc

. Ban Bí_thư Trung_ương Đoàn duyệt kế_hoạch Đại_hội Đoàn cấp tỉnh .

- source_sentence: Ai có quyền ký hợp_đồng cộng tác_viên với người đáp_ứng đủ tiêu_chuẩn

có nguyện_vọng làm Cộng tác_viên pháp điển ?

sentences:

- 'Thẩm_quyền lập biên_bản_vi_phạm hành_chính trong lĩnh_vực Kiểm_toán_Nhà_nước_Người

có thẩm_quyền lập biên_bản_vi_phạm hành_chính trong lĩnh_vực Kiểm_toán_Nhà_nước

quy_định tại Điều_15 của Pháp_lệnh số { 04 / 2023 / UBTVQH15 , } bao_gồm : Kiểm

toán_viên nhà_nước ; Tổ_trưởng tổ kiểm_toán ; Phó trưởng_đoàn kiểm_toán ; Trưởng_đoàn

kiểm_toán ; đ ) Kiểm toán_trưởng . Trường_hợp người đang thi_hành nhiệm_vụ kiểm_toán

, kiểm_tra thực_hiện kết_luận , kiến_nghị kiểm_toán , nhiệm_vụ tiếp_nhận báo_cáo

cáo định_kỳ hoặc nhiệm_vụ khác mà không phải là người có thẩm_quyền lập biên_bản_vi_phạm

hành_chính , nếu phát_hiện_hành_vi vi_phạm hành_chính trong lĩnh_vực Kiểm_toán_Nhà_nước

thì phải lập biên_bản làm_việc để ghi_nhận sự_việc và chuyển ngay biên_bản làm_việc

đến người có thẩm_quyền để lập biên_bản_vi_phạm hành_chính theo quy_định .'

- '" Điều Đăng_ký_kết_hôn Việc kết_hôn phải được đăng_ký và do cơ_quan nhà_nước

có thẩm_Quyền thực_hiện theo quy_định của Luật này và pháp Luật về hộ_tịch . Việc

kết_hôn không được đăng_ký theo quy_định tại khoản này thì không có giá_trị pháp_lý

. Vợ_chồng đã ly_hôn muốn xác_lập lại quan_hệ vợ_chồng thì phải đăng_ký kết_hôn

. Điều Giải_quyết hậu_quả của việc nam , nữ chung sống với nhau như vợ_chồng mà

không đăng_ký kết_hôn Nam , nữ có đủ điều_kiện kết_hôn theo quy_định của Luật

này chung sống với nhau như vợ_chồng mà không đăng_ký kết_hôn thì không làm phát_sinh

Quyền , nghĩa_vụ giữa vợ và chồng . Quyền , nghĩa_vụ đối_với con , tài_sản , nghĩa_vụ

và hợp_đồng giữa các bên được giải_quyết theo quy_định tại Điều_15 và Điều_16

của Luật này . Trong trường_hợp nam , nữ chung sống với nhau như vợ_chồng theo

quy_định tại Khoản 1_Điều này nhưng sau đó thực_hiện việc đăng_ký kết_hôn theo

quy_định của pháp Luật thì quan_hệ hôn_nhân được xác_lập từ thời điểm đăng_ký

kết_hôn . "'

- Thẩm_quyền , trách_nhiệm của các đơn_vị thuộc Bộ_Tư_pháp trong việc quản_lý ,

sử_dụng Cộng tác_viên Các đơn_vị thuộc Bộ_Tư_pháp Thủ_trưởng đơn_vị thực_hiện

pháp điển có quyền ký hợp_đồng cộng_tác với người đáp_ứng đủ tiêu_chuẩn quy_định

tại Điều_2 Quy_chế này , có nguyện_vọng làm Cộng tác_viên theo nhu_cầu thực_tế

và phạm_vi , tính_chất công_việc thực_hiện pháp điển của đơn_vị ; thông_báo cho

Cục Kiểm_tra văn_bản quy_phạm pháp_luật về việc ký hợp_đồng thuê Cộng tác_viên

và tình_hình thực_hiện công_việc của Cộng tác_viên . Đơn_vị thực_hiện pháp điển

không được sử_dụng cán_bộ , công_chức , viên_chức thuộc biên_chế của đơn_vị làm

Cộng tác_viên với đơn_vị mình . Thủ_trưởng đơn_vị thuộc Bộ_Tư_pháp thực_hiện pháp

điển có_thể tham_khảo Danh_sách nguồn Cộng tác_viên do Cục Kiểm_tra văn_bản quy_phạm

pháp_luật lập để ký hợp_đồng thuê Cộng tác_viên thực_hiện công_tác pháp điển thuộc

thẩm_quyền , trách_nhiệm của đơn_vị mình .

- source_sentence: Ai có quyền_hủy bỏ kết_quả bầu_cử và quyết_định bầu_cử lại đại_biểu

Quốc_hội ?

sentences:

- '" Điều Thẩm_quyền quyết_định tạm hoãn gọi nhập_ngũ , miễn gọi nhập_ngũ và công_nhận

hoàn_thành nghĩa_vụ quân_sự tại_ngũ Chủ_tịch Ủy_ban_nhân_dân cấp huyện quyết_định

tạm hoãn gọi nhập_ngũ và miễn gọi nhập_ngũ đối_với công_dân quy_định tại Điều_41

của Luật này . Chỉ huy_trưởng Ban chỉ_huy quân_sự cấp huyện quyết_định công_nhận

hoàn_thành nghĩa_vụ quân_sự tại_ngũ đối_với công_dân quy_định tại Khoản_4 Điều_4

của Luật này . "'

- Cơ_cấu tổ_chức Tổng cục_trưởng Tổng_cục Hải_quan quy_định nhiệm_vụ và quyền_hạn

của các Phòng , Đội , Hải_Đội thuộc và trực_thuộc Cục Điều_tra chống buôn_lậu

.

- Hủy_bỏ kết_quả bầu_cử và quyết_định bầu_cử lại Hội_đồng_Bầu_cử_Quốc_gia tự mình

hoặc theo đề_nghị của Ủy_ban_Thường_vụ_Quốc_hội , Chính_phủ , Ủy_ban trung_ương

Mặt_trận_Tổ_quốc Việt_Nam , Ủy_ban bầu_cử ở tỉnh Hủy_bỏ kết_quả bầu_cử ở khu_vực

bỏ_phiếu , đơn_vị bầu_cử có vi_phạm_pháp_luật nghiêm_trọng và quyết_định ngày

bầu_cử lại ở khu_vực bỏ_phiếu , đơn_vị bầu_cử đó . Trong trường_hợp bầu_cử lại

thì ngày bầu_cử được tiến_hành chậm nhất là 15 ngày sau ngày bầu_cử đầu_tiên .

Trong cuộc bầu_cử lại , cử_tri chỉ chọn bầu trong danh_sách những người ứng_cử

tại cuộc bầu_cử đầu_tiên .

model-index:

- name: SentenceTransformer based on Tnt3o5/tnt_v4_lega_new_tokens

results:

- task:

type: information-retrieval

name: Information Retrieval

dataset:

name: dim 256

type: dim_256

metrics:

- type: cosine_accuracy@1

value: 0.4254

name: Cosine Accuracy@1

- type: cosine_accuracy@3

value: 0.6052

name: Cosine Accuracy@3

- type: cosine_accuracy@5

value: 0.6636

name: Cosine Accuracy@5

- type: cosine_accuracy@10

value: 0.7248

name: Cosine Accuracy@10

- type: cosine_precision@1

value: 0.4254

name: Cosine Precision@1

- type: cosine_precision@3

value: 0.20706666666666665

name: Cosine Precision@3

- type: cosine_precision@5

value: 0.13752

name: Cosine Precision@5

- type: cosine_precision@10

value: 0.07594

name: Cosine Precision@10

- type: cosine_recall@1

value: 0.4051

name: Cosine Recall@1

- type: cosine_recall@3

value: 0.58215

name: Cosine Recall@3

- type: cosine_recall@5

value: 0.6421

name: Cosine Recall@5

- type: cosine_recall@10

value: 0.7052

name: Cosine Recall@10

- type: cosine_ndcg@10

value: 0.5619612781230402

name: Cosine Ndcg@10

- type: cosine_mrr@10

value: 0.526433492063493

name: Cosine Mrr@10

- type: cosine_map@100

value: 0.514814431994549

name: Cosine Map@100

- task:

type: information-retrieval

name: Information Retrieval

dataset:

name: dim 128

type: dim_128

metrics:

- type: cosine_accuracy@1

value: 0.4264

name: Cosine Accuracy@1

- type: cosine_accuracy@3

value: 0.6

name: Cosine Accuracy@3

- type: cosine_accuracy@5

value: 0.662

name: Cosine Accuracy@5

- type: cosine_accuracy@10

value: 0.7194

name: Cosine Accuracy@10

- type: cosine_precision@1

value: 0.4264

name: Cosine Precision@1

- type: cosine_precision@3

value: 0.2053333333333333

name: Cosine Precision@3

- type: cosine_precision@5

value: 0.13707999999999998

name: Cosine Precision@5

- type: cosine_precision@10

value: 0.07544

name: Cosine Precision@10

- type: cosine_recall@1

value: 0.40606666666666663

name: Cosine Recall@1

- type: cosine_recall@3

value: 0.57705

name: Cosine Recall@3

- type: cosine_recall@5

value: 0.6404666666666667

name: Cosine Recall@5

- type: cosine_recall@10

value: 0.70015

name: Cosine Recall@10

- type: cosine_ndcg@10

value: 0.5591685699820262

name: Cosine Ndcg@10

- type: cosine_mrr@10

value: 0.5244388095238101

name: Cosine Mrr@10

- type: cosine_map@100

value: 0.5128272708639572

name: Cosine Map@100

- task:

type: information-retrieval

name: Information Retrieval

dataset:

name: dim 64

type: dim_64

metrics:

- type: cosine_accuracy@1

value: 0.4076

name: Cosine Accuracy@1

- type: cosine_accuracy@3

value: 0.5866

name: Cosine Accuracy@3

- type: cosine_accuracy@5

value: 0.6478

name: Cosine Accuracy@5

- type: cosine_accuracy@10

value: 0.708

name: Cosine Accuracy@10

- type: cosine_precision@1

value: 0.4076

name: Cosine Precision@1

- type: cosine_precision@3

value: 0.20026666666666665

name: Cosine Precision@3

- type: cosine_precision@5

value: 0.13403999999999996

name: Cosine Precision@5

- type: cosine_precision@10

value: 0.0741

name: Cosine Precision@10

- type: cosine_recall@1

value: 0.38761666666666666

name: Cosine Recall@1

- type: cosine_recall@3

value: 0.5637666666666666

name: Cosine Recall@3

- type: cosine_recall@5

value: 0.6255666666666667

name: Cosine Recall@5

- type: cosine_recall@10

value: 0.6879833333333333

name: Cosine Recall@10

- type: cosine_ndcg@10

value: 0.5444437738024127

name: Cosine Ndcg@10

- type: cosine_mrr@10

value: 0.5090488888888896

name: Cosine Mrr@10

- type: cosine_map@100

value: 0.49745729547355066

name: Cosine Map@100

---

# SentenceTransformer based on Tnt3o5/tnt_v4_lega_new_tokens

This is a [sentence-transformers](https://www.SBERT.net) model finetuned from [Tnt3o5/tnt_v4_lega_new_tokens](https://huggingface.co/Tnt3o5/tnt_v4_lega_new_tokens). It maps sentences & paragraphs to a 768-dimensional dense vector space and can be used for semantic textual similarity, semantic search, paraphrase mining, text classification, clustering, and more.

## Model Details

### Model Description

- **Model Type:** Sentence Transformer

- **Base model:** [Tnt3o5/tnt_v4_lega_new_tokens](https://huggingface.co/Tnt3o5/tnt_v4_lega_new_tokens) <!-- at revision 289ae9c89e03b40e6aa02c8a8b307759eff5ad5b -->

- **Maximum Sequence Length:** 256 tokens

- **Output Dimensionality:** 768 dimensions

- **Similarity Function:** Cosine Similarity

<!-- - **Training Dataset:** Unknown -->

<!-- - **Language:** Unknown -->

<!-- - **License:** Unknown -->

### Model Sources

- **Documentation:** [Sentence Transformers Documentation](https://sbert.net)

- **Repository:** [Sentence Transformers on GitHub](https://github.com/UKPLab/sentence-transformers)

- **Hugging Face:** [Sentence Transformers on Hugging Face](https://huggingface.co/models?library=sentence-transformers)

### Full Model Architecture

```

SentenceTransformer(

(0): Transformer({'max_seq_length': 256, 'do_lower_case': False}) with Transformer model: RobertaModel

(1): Pooling({'word_embedding_dimension': 768, 'pooling_mode_cls_token': False, 'pooling_mode_mean_tokens': True, 'pooling_mode_max_tokens': False, 'pooling_mode_mean_sqrt_len_tokens': False, 'pooling_mode_weightedmean_tokens': False, 'pooling_mode_lasttoken': False, 'include_prompt': True})

)

```

## Usage

### Direct Usage (Sentence Transformers)

First install the Sentence Transformers library:

```bash

pip install -U sentence-transformers

```

Then you can load this model and run inference.

```python

from sentence_transformers import SentenceTransformer

# Download from the 🤗 Hub

model = SentenceTransformer("Tnt3o5/tnt_v5_lega_new_tokens")

# Run inference

sentences = [

'Ai có quyền_hủy bỏ kết_quả bầu_cử và quyết_định bầu_cử lại đại_biểu Quốc_hội ?',

'Hủy_bỏ kết_quả bầu_cử và quyết_định bầu_cử lại Hội_đồng_Bầu_cử_Quốc_gia tự mình hoặc theo đề_nghị của Ủy_ban_Thường_vụ_Quốc_hội , Chính_phủ , Ủy_ban trung_ương Mặt_trận_Tổ_quốc Việt_Nam , Ủy_ban bầu_cử ở tỉnh Hủy_bỏ kết_quả bầu_cử ở khu_vực bỏ_phiếu , đơn_vị bầu_cử có vi_phạm_pháp_luật nghiêm_trọng và quyết_định ngày bầu_cử lại ở khu_vực bỏ_phiếu , đơn_vị bầu_cử đó . Trong trường_hợp bầu_cử lại thì ngày bầu_cử được tiến_hành chậm nhất là 15 ngày sau ngày bầu_cử đầu_tiên . Trong cuộc bầu_cử lại , cử_tri chỉ chọn bầu trong danh_sách những người ứng_cử tại cuộc bầu_cử đầu_tiên .',

'Cơ_cấu tổ_chức Tổng cục_trưởng Tổng_cục Hải_quan quy_định nhiệm_vụ và quyền_hạn của các Phòng , Đội , Hải_Đội thuộc và trực_thuộc Cục Điều_tra chống buôn_lậu .',

]

embeddings = model.encode(sentences)

print(embeddings.shape)

# [3, 768]

# Get the similarity scores for the embeddings

similarities = model.similarity(embeddings, embeddings)

print(similarities.shape)

# [3, 3]

```

<!--

### Direct Usage (Transformers)

<details><summary>Click to see the direct usage in Transformers</summary>

</details>

-->

<!--

### Downstream Usage (Sentence Transformers)

You can finetune this model on your own dataset.

<details><summary>Click to expand</summary>

</details>

-->

<!--

### Out-of-Scope Use

*List how the model may foreseeably be misused and address what users ought not to do with the model.*

-->

## Evaluation

### Metrics

#### Information Retrieval

* Datasets: `dim_256`, `dim_128` and `dim_64`

* Evaluated with [<code>InformationRetrievalEvaluator</code>](https://sbert.net/docs/package_reference/sentence_transformer/evaluation.html#sentence_transformers.evaluation.InformationRetrievalEvaluator)

| Metric | dim_256 | dim_128 | dim_64 |

|:--------------------|:----------|:-----------|:-----------|

| cosine_accuracy@1 | 0.4254 | 0.4264 | 0.4076 |

| cosine_accuracy@3 | 0.6052 | 0.6 | 0.5866 |

| cosine_accuracy@5 | 0.6636 | 0.662 | 0.6478 |

| cosine_accuracy@10 | 0.7248 | 0.7194 | 0.708 |

| cosine_precision@1 | 0.4254 | 0.4264 | 0.4076 |

| cosine_precision@3 | 0.2071 | 0.2053 | 0.2003 |

| cosine_precision@5 | 0.1375 | 0.1371 | 0.134 |

| cosine_precision@10 | 0.0759 | 0.0754 | 0.0741 |

| cosine_recall@1 | 0.4051 | 0.4061 | 0.3876 |

| cosine_recall@3 | 0.5821 | 0.577 | 0.5638 |

| cosine_recall@5 | 0.6421 | 0.6405 | 0.6256 |

| cosine_recall@10 | 0.7052 | 0.7002 | 0.688 |

| **cosine_ndcg@10** | **0.562** | **0.5592** | **0.5444** |

| cosine_mrr@10 | 0.5264 | 0.5244 | 0.509 |

| cosine_map@100 | 0.5148 | 0.5128 | 0.4975 |

<!--

## Bias, Risks and Limitations

*What are the known or foreseeable issues stemming from this model? You could also flag here known failure cases or weaknesses of the model.*

-->

<!--

### Recommendations

*What are recommendations with respect to the foreseeable issues? For example, filtering explicit content.*

-->

## Training Details

### Training Dataset

#### Unnamed Dataset

* Size: 101,442 training samples

* Columns: <code>anchor</code> and <code>positive</code>

* Approximate statistics based on the first 1000 samples:

| | anchor | positive |

|:--------|:----------------------------------------------------------------------------------|:------------------------------------------------------------------------------------|

| type | string | string |

| details | <ul><li>min: 7 tokens</li><li>mean: 20.75 tokens</li><li>max: 46 tokens</li></ul> | <ul><li>min: 10 tokens</li><li>mean: 155.2 tokens</li><li>max: 256 tokens</li></ul> |

* Samples:

| anchor | positive |

|:-----------------------------------------------------------------------------------------------------------|:-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|

| <code>" Người_lớn ( trên 16 tuổi ) " được hiểu là “ Người_lớn và trẻ_em trên 16 tuổi ”</code> | <code>" Khi triển_khai “ Hướng_dẫn quản_lý tại nhà đối_với người mắc COVID - 19 ” , đề_nghị hướng_dẫn , làm rõ một_số nội_dung như sau : . Mục 3 “ Người_lớn ( trên 16 tuổ ” : đề_nghị hướng_dẫn là “ Người_lớn và trẻ_em trên 16 tuổi ” . "</code> |

| <code>03 Quy_chuẩn kỹ_thuật quốc_gia được ban_hành tại Thông_tư 04 là Quy_chuẩn nào ?</code> | <code>Ban_hành kèm theo Thông_tư này 03 Quy_chuẩn kỹ_thuật quốc_gia sau : Quy_chuẩn kỹ_thuật quốc_gia về bộ trục bánh_xe của đầu_máy , toa_xe Số_hiệu : QCVN 110 : 2023/BGTVT. Quy_chuẩn kỹ_thuật quốc_gia về bộ móc_nối , đỡ đấm của đầu_máy , toa_xe Số_hiệu : QCVN 111 : 2023/BGTVT. Quy_chuẩn kỹ_thuật quốc_gia về van hãm sử_dụng trên đầu_máy , toa_xe Số_hiệu : QCVN 112 : 2023/BGTVT.</code> |

| <code>03 Tổng công_ty Cảng hàng_không thực_hiện hợp_nhất có trách_nhiệm như thế_nào theo quy_định ?</code> | <code>Các Tổng công_ty thực_hiện hợp_nhất nêu tại Điều_1 Quyết_định này có trách_nhiệm chuyển_giao nguyên_trạng toàn_bộ tài_sản , tài_chính , lao_động , đất_đai , dự_án đang triển_khai , các quyền , nghĩa_vụ và lợi_ích hợp_pháp khác sang Tổng công_ty Cảng hàng_không Việt_Nam . Trong thời_gian chưa chuyển_giao , Chủ_tịch Hội_đồng thành_viên , Tổng giám_đốc và các cá_nhân có liên_quan của 03 Tổng công_ty thực_hiện hợp_nhất chịu trách_nhiệm quản_lý toàn_bộ tài_sản , tiền vốn của Tổng công_ty , không để hư_hỏng , hao_hụt , thất_thoát .</code> |

* Loss: [<code>MatryoshkaLoss</code>](https://sbert.net/docs/package_reference/sentence_transformer/losses.html#matryoshkaloss) with these parameters:

```json

{

"loss": "MultipleNegativesRankingLoss",

"matryoshka_dims": [

256,

128,

64

],

"matryoshka_weights": [

1,

1,

1

],

"n_dims_per_step": -1

}

```

### Evaluation Dataset

#### Unnamed Dataset

* Size: 4,450 evaluation samples

* Columns: <code>anchor</code> and <code>positive</code>

* Approximate statistics based on the first 1000 samples:

| | anchor | positive |

|:--------|:----------------------------------------------------------------------------------|:------------------------------------------------------------------------------------|

| type | string | string |

| details | <ul><li>min: 7 tokens</li><li>mean: 20.75 tokens</li><li>max: 46 tokens</li></ul> | <ul><li>min: 10 tokens</li><li>mean: 155.2 tokens</li><li>max: 256 tokens</li></ul> |

* Samples:

| anchor | positive |

|:-----------------------------------------------------------------------------------------------------------|:-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|

| <code>" Người_lớn ( trên 16 tuổi ) " được hiểu là “ Người_lớn và trẻ_em trên 16 tuổi ”</code> | <code>" Khi triển_khai “ Hướng_dẫn quản_lý tại nhà đối_với người mắc COVID - 19 ” , đề_nghị hướng_dẫn , làm rõ một_số nội_dung như sau : . Mục 3 “ Người_lớn ( trên 16 tuổ ” : đề_nghị hướng_dẫn là “ Người_lớn và trẻ_em trên 16 tuổi ” . "</code> |

| <code>03 Quy_chuẩn kỹ_thuật quốc_gia được ban_hành tại Thông_tư 04 là Quy_chuẩn nào ?</code> | <code>Ban_hành kèm theo Thông_tư này 03 Quy_chuẩn kỹ_thuật quốc_gia sau : Quy_chuẩn kỹ_thuật quốc_gia về bộ trục bánh_xe của đầu_máy , toa_xe Số_hiệu : QCVN 110 : 2023/BGTVT. Quy_chuẩn kỹ_thuật quốc_gia về bộ móc_nối , đỡ đấm của đầu_máy , toa_xe Số_hiệu : QCVN 111 : 2023/BGTVT. Quy_chuẩn kỹ_thuật quốc_gia về van hãm sử_dụng trên đầu_máy , toa_xe Số_hiệu : QCVN 112 : 2023/BGTVT.</code> |

| <code>03 Tổng công_ty Cảng hàng_không thực_hiện hợp_nhất có trách_nhiệm như thế_nào theo quy_định ?</code> | <code>Các Tổng công_ty thực_hiện hợp_nhất nêu tại Điều_1 Quyết_định này có trách_nhiệm chuyển_giao nguyên_trạng toàn_bộ tài_sản , tài_chính , lao_động , đất_đai , dự_án đang triển_khai , các quyền , nghĩa_vụ và lợi_ích hợp_pháp khác sang Tổng công_ty Cảng hàng_không Việt_Nam . Trong thời_gian chưa chuyển_giao , Chủ_tịch Hội_đồng thành_viên , Tổng giám_đốc và các cá_nhân có liên_quan của 03 Tổng công_ty thực_hiện hợp_nhất chịu trách_nhiệm quản_lý toàn_bộ tài_sản , tiền vốn của Tổng công_ty , không để hư_hỏng , hao_hụt , thất_thoát .</code> |

* Loss: [<code>MatryoshkaLoss</code>](https://sbert.net/docs/package_reference/sentence_transformer/losses.html#matryoshkaloss) with these parameters:

```json

{

"loss": "MultipleNegativesRankingLoss",

"matryoshka_dims": [

256,

128,

64

],

"matryoshka_weights": [

1,

1,

1

],

"n_dims_per_step": -1

}

```

### Training Hyperparameters

#### Non-Default Hyperparameters

- `eval_strategy`: steps

- `per_device_train_batch_size`: 16

- `per_device_eval_batch_size`: 16

- `gradient_accumulation_steps`: 8

- `learning_rate`: 2e-05

- `weight_decay`: 0.01

- `max_grad_norm`: 0.1

- `max_steps`: 1200

- `lr_scheduler_type`: cosine

- `warmup_ratio`: 0.15

- `fp16`: True

- `load_best_model_at_end`: True

- `optim`: adamw_torch_fused

- `gradient_checkpointing`: True

- `batch_sampler`: no_duplicates

#### All Hyperparameters

<details><summary>Click to expand</summary>

- `overwrite_output_dir`: False

- `do_predict`: False

- `eval_strategy`: steps

- `prediction_loss_only`: True

- `per_device_train_batch_size`: 16

- `per_device_eval_batch_size`: 16

- `per_gpu_train_batch_size`: None

- `per_gpu_eval_batch_size`: None

- `gradient_accumulation_steps`: 8

- `eval_accumulation_steps`: None

- `torch_empty_cache_steps`: None

- `learning_rate`: 2e-05

- `weight_decay`: 0.01

- `adam_beta1`: 0.9

- `adam_beta2`: 0.999

- `adam_epsilon`: 1e-08

- `max_grad_norm`: 0.1

- `num_train_epochs`: 3.0

- `max_steps`: 1200

- `lr_scheduler_type`: cosine

- `lr_scheduler_kwargs`: {}

- `warmup_ratio`: 0.15

- `warmup_steps`: 0

- `log_level`: passive

- `log_level_replica`: warning

- `log_on_each_node`: True

- `logging_nan_inf_filter`: True

- `save_safetensors`: True

- `save_on_each_node`: False

- `save_only_model`: False

- `restore_callback_states_from_checkpoint`: False

- `no_cuda`: False

- `use_cpu`: False

- `use_mps_device`: False

- `seed`: 42

- `data_seed`: None

- `jit_mode_eval`: False

- `use_ipex`: False

- `bf16`: False

- `fp16`: True

- `fp16_opt_level`: O1

- `half_precision_backend`: auto

- `bf16_full_eval`: False

- `fp16_full_eval`: False

- `tf32`: None

- `local_rank`: 0

- `ddp_backend`: None

- `tpu_num_cores`: None

- `tpu_metrics_debug`: False

- `debug`: []

- `dataloader_drop_last`: False

- `dataloader_num_workers`: 0

- `dataloader_prefetch_factor`: None

- `past_index`: -1

- `disable_tqdm`: False

- `remove_unused_columns`: True

- `label_names`: None

- `load_best_model_at_end`: True

- `ignore_data_skip`: False

- `fsdp`: []

- `fsdp_min_num_params`: 0

- `fsdp_config`: {'min_num_params': 0, 'xla': False, 'xla_fsdp_v2': False, 'xla_fsdp_grad_ckpt': False}

- `fsdp_transformer_layer_cls_to_wrap`: None

- `accelerator_config`: {'split_batches': False, 'dispatch_batches': None, 'even_batches': True, 'use_seedable_sampler': True, 'non_blocking': False, 'gradient_accumulation_kwargs': None}

- `deepspeed`: None

- `label_smoothing_factor`: 0.0

- `optim`: adamw_torch_fused

- `optim_args`: None

- `adafactor`: False

- `group_by_length`: False

- `length_column_name`: length

- `ddp_find_unused_parameters`: None

- `ddp_bucket_cap_mb`: None

- `ddp_broadcast_buffers`: False

- `dataloader_pin_memory`: True

- `dataloader_persistent_workers`: False

- `skip_memory_metrics`: True

- `use_legacy_prediction_loop`: False

- `push_to_hub`: False

- `resume_from_checkpoint`: None

- `hub_model_id`: None

- `hub_strategy`: every_save

- `hub_private_repo`: False

- `hub_always_push`: False

- `gradient_checkpointing`: True

- `gradient_checkpointing_kwargs`: None

- `include_inputs_for_metrics`: False

- `eval_do_concat_batches`: True

- `fp16_backend`: auto

- `push_to_hub_model_id`: None

- `push_to_hub_organization`: None

- `mp_parameters`:

- `auto_find_batch_size`: False

- `full_determinism`: False

- `torchdynamo`: None

- `ray_scope`: last

- `ddp_timeout`: 1800

- `torch_compile`: False

- `torch_compile_backend`: None

- `torch_compile_mode`: None

- `dispatch_batches`: None

- `split_batches`: None

- `include_tokens_per_second`: False

- `include_num_input_tokens_seen`: False

- `neftune_noise_alpha`: None

- `optim_target_modules`: None

- `batch_eval_metrics`: False

- `eval_on_start`: False

- `use_liger_kernel`: False

- `eval_use_gather_object`: False

- `prompts`: None

- `batch_sampler`: no_duplicates

- `multi_dataset_batch_sampler`: proportional

</details>

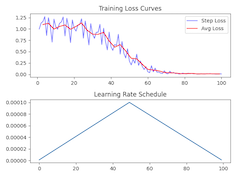

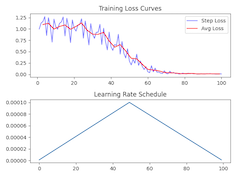

### Training Logs

| Epoch | Step | Training Loss | Validation Loss | dim_256_cosine_ndcg@10 | dim_128_cosine_ndcg@10 | dim_64_cosine_ndcg@10 |

|:----------:|:--------:|:-------------:|:---------------:|:----------------------:|:----------------------:|:---------------------:|

| 0.5047 | 400 | 0.4797 | 0.3000 | 0.5544 | 0.5504 | 0.5393 |

| 1.0090 | 800 | 0.4274 | 0.2888 | 0.5583 | 0.5534 | 0.5415 |

| **1.5136** | **1200** | **0.3211** | **0.2089** | **0.562** | **0.5592** | **0.5444** |

* The bold row denotes the saved checkpoint.

### Framework Versions

- Python: 3.10.14

- Sentence Transformers: 3.3.0

- Transformers: 4.45.1

- PyTorch: 2.4.0

- Accelerate: 0.34.2

- Datasets: 3.0.1

- Tokenizers: 0.20.0

## Citation

### BibTeX

#### Sentence Transformers

```bibtex

@inproceedings{reimers-2019-sentence-bert,

title = "Sentence-BERT: Sentence Embeddings using Siamese BERT-Networks",

author = "Reimers, Nils and Gurevych, Iryna",

booktitle = "Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing",

month = "11",

year = "2019",

publisher = "Association for Computational Linguistics",

url = "https://arxiv.org/abs/1908.10084",

}

```

#### MatryoshkaLoss

```bibtex

@misc{kusupati2024matryoshka,

title={Matryoshka Representation Learning},

author={Aditya Kusupati and Gantavya Bhatt and Aniket Rege and Matthew Wallingford and Aditya Sinha and Vivek Ramanujan and William Howard-Snyder and Kaifeng Chen and Sham Kakade and Prateek Jain and Ali Farhadi},

year={2024},

eprint={2205.13147},

archivePrefix={arXiv},

primaryClass={cs.LG}

}

```

#### MultipleNegativesRankingLoss

```bibtex

@misc{henderson2017efficient,

title={Efficient Natural Language Response Suggestion for Smart Reply},

author={Matthew Henderson and Rami Al-Rfou and Brian Strope and Yun-hsuan Sung and Laszlo Lukacs and Ruiqi Guo and Sanjiv Kumar and Balint Miklos and Ray Kurzweil},

year={2017},

eprint={1705.00652},

archivePrefix={arXiv},

primaryClass={cs.CL}

}

```

<!--

## Glossary

*Clearly define terms in order to be accessible across audiences.*

-->

<!--

## Model Card Authors

*Lists the people who create the model card, providing recognition and accountability for the detailed work that goes into its construction.*

-->

<!--

## Model Card Contact

*Provides a way for people who have updates to the Model Card, suggestions, or questions, to contact the Model Card authors.*

-->

|

[

"CHIA"

] |

Non_BioNLP

|

# SentenceTransformer based on Tnt3o5/tnt_v4_lega_new_tokens

This is a [sentence-transformers](https://www.SBERT.net) model finetuned from [Tnt3o5/tnt_v4_lega_new_tokens](https://huggingface.co/Tnt3o5/tnt_v4_lega_new_tokens). It maps sentences & paragraphs to a 768-dimensional dense vector space and can be used for semantic textual similarity, semantic search, paraphrase mining, text classification, clustering, and more.

## Model Details

### Model Description

- **Model Type:** Sentence Transformer

- **Base model:** [Tnt3o5/tnt_v4_lega_new_tokens](https://huggingface.co/Tnt3o5/tnt_v4_lega_new_tokens) <!-- at revision 289ae9c89e03b40e6aa02c8a8b307759eff5ad5b -->

- **Maximum Sequence Length:** 256 tokens

- **Output Dimensionality:** 768 dimensions

- **Similarity Function:** Cosine Similarity

<!-- - **Training Dataset:** Unknown -->

<!-- - **Language:** Unknown -->

<!-- - **License:** Unknown -->

### Model Sources

- **Documentation:** [Sentence Transformers Documentation](https://sbert.net)

- **Repository:** [Sentence Transformers on GitHub](https://github.com/UKPLab/sentence-transformers)

- **Hugging Face:** [Sentence Transformers on Hugging Face](https://huggingface.co/models?library=sentence-transformers)

### Full Model Architecture

```

SentenceTransformer(

(0): Transformer({'max_seq_length': 256, 'do_lower_case': False}) with Transformer model: RobertaModel

(1): Pooling({'word_embedding_dimension': 768, 'pooling_mode_cls_token': False, 'pooling_mode_mean_tokens': True, 'pooling_mode_max_tokens': False, 'pooling_mode_mean_sqrt_len_tokens': False, 'pooling_mode_weightedmean_tokens': False, 'pooling_mode_lasttoken': False, 'include_prompt': True})

)

```

## Usage

### Direct Usage (Sentence Transformers)

First install the Sentence Transformers library:

```bash

pip install -U sentence-transformers

```

Then you can load this model and run inference.

```python

from sentence_transformers import SentenceTransformer

# Download from the 🤗 Hub

model = SentenceTransformer("Tnt3o5/tnt_v5_lega_new_tokens")

# Run inference

sentences = [

'Ai có quyền_hủy bỏ kết_quả bầu_cử và quyết_định bầu_cử lại đại_biểu Quốc_hội ?',

'Hủy_bỏ kết_quả bầu_cử và quyết_định bầu_cử lại Hội_đồng_Bầu_cử_Quốc_gia tự mình hoặc theo đề_nghị của Ủy_ban_Thường_vụ_Quốc_hội , Chính_phủ , Ủy_ban trung_ương Mặt_trận_Tổ_quốc Việt_Nam , Ủy_ban bầu_cử ở tỉnh Hủy_bỏ kết_quả bầu_cử ở khu_vực bỏ_phiếu , đơn_vị bầu_cử có vi_phạm_pháp_luật nghiêm_trọng và quyết_định ngày bầu_cử lại ở khu_vực bỏ_phiếu , đơn_vị bầu_cử đó . Trong trường_hợp bầu_cử lại thì ngày bầu_cử được tiến_hành chậm nhất là 15 ngày sau ngày bầu_cử đầu_tiên . Trong cuộc bầu_cử lại , cử_tri chỉ chọn bầu trong danh_sách những người ứng_cử tại cuộc bầu_cử đầu_tiên .',

'Cơ_cấu tổ_chức Tổng cục_trưởng Tổng_cục Hải_quan quy_định nhiệm_vụ và quyền_hạn của các Phòng , Đội , Hải_Đội thuộc và trực_thuộc Cục Điều_tra chống buôn_lậu .',

]

embeddings = model.encode(sentences)

print(embeddings.shape)

# [3, 768]

# Get the similarity scores for the embeddings

similarities = model.similarity(embeddings, embeddings)

print(similarities.shape)

# [3, 3]

```

<!--

### Direct Usage (Transformers)

<details><summary>Click to see the direct usage in Transformers</summary>

</details>

-->

<!--

### Downstream Usage (Sentence Transformers)

You can finetune this model on your own dataset.

<details><summary>Click to expand</summary>

</details>

-->

<!--

### Out-of-Scope Use

*List how the model may foreseeably be misused and address what users ought not to do with the model.*

-->

## Evaluation

### Metrics

#### Information Retrieval

* Datasets: `dim_256`, `dim_128` and `dim_64`

* Evaluated with [<code>InformationRetrievalEvaluator</code>](https://sbert.net/docs/package_reference/sentence_transformer/evaluation.html#sentence_transformers.evaluation.InformationRetrievalEvaluator)

| Metric | dim_256 | dim_128 | dim_64 |

|:--------------------|:----------|:-----------|:-----------|

| cosine_accuracy@1 | 0.4254 | 0.4264 | 0.4076 |

| cosine_accuracy@3 | 0.6052 | 0.6 | 0.5866 |

| cosine_accuracy@5 | 0.6636 | 0.662 | 0.6478 |

| cosine_accuracy@10 | 0.7248 | 0.7194 | 0.708 |

| cosine_precision@1 | 0.4254 | 0.4264 | 0.4076 |

| cosine_precision@3 | 0.2071 | 0.2053 | 0.2003 |

| cosine_precision@5 | 0.1375 | 0.1371 | 0.134 |

| cosine_precision@10 | 0.0759 | 0.0754 | 0.0741 |

| cosine_recall@1 | 0.4051 | 0.4061 | 0.3876 |

| cosine_recall@3 | 0.5821 | 0.577 | 0.5638 |

| cosine_recall@5 | 0.6421 | 0.6405 | 0.6256 |

| cosine_recall@10 | 0.7052 | 0.7002 | 0.688 |

| **cosine_ndcg@10** | **0.562** | **0.5592** | **0.5444** |

| cosine_mrr@10 | 0.5264 | 0.5244 | 0.509 |

| cosine_map@100 | 0.5148 | 0.5128 | 0.4975 |

<!--

## Bias, Risks and Limitations

*What are the known or foreseeable issues stemming from this model? You could also flag here known failure cases or weaknesses of the model.*

-->

<!--

### Recommendations

*What are recommendations with respect to the foreseeable issues? For example, filtering explicit content.*

-->

## Training Details

### Training Dataset

#### Unnamed Dataset

* Size: 101,442 training samples

* Columns: <code>anchor</code> and <code>positive</code>

* Approximate statistics based on the first 1000 samples:

| | anchor | positive |

|:--------|:----------------------------------------------------------------------------------|:------------------------------------------------------------------------------------|

| type | string | string |

| details | <ul><li>min: 7 tokens</li><li>mean: 20.75 tokens</li><li>max: 46 tokens</li></ul> | <ul><li>min: 10 tokens</li><li>mean: 155.2 tokens</li><li>max: 256 tokens</li></ul> |

* Samples:

| anchor | positive |

|:-----------------------------------------------------------------------------------------------------------|:-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|

| <code>" Người_lớn ( trên 16 tuổi ) " được hiểu là “ Người_lớn và trẻ_em trên 16 tuổi ”</code> | <code>" Khi triển_khai “ Hướng_dẫn quản_lý tại nhà đối_với người mắc COVID - 19 ” , đề_nghị hướng_dẫn , làm rõ một_số nội_dung như sau : . Mục 3 “ Người_lớn ( trên 16 tuổ ” : đề_nghị hướng_dẫn là “ Người_lớn và trẻ_em trên 16 tuổi ” . "</code> |

| <code>03 Quy_chuẩn kỹ_thuật quốc_gia được ban_hành tại Thông_tư 04 là Quy_chuẩn nào ?</code> | <code>Ban_hành kèm theo Thông_tư này 03 Quy_chuẩn kỹ_thuật quốc_gia sau : Quy_chuẩn kỹ_thuật quốc_gia về bộ trục bánh_xe của đầu_máy , toa_xe Số_hiệu : QCVN 110 : 2023/BGTVT. Quy_chuẩn kỹ_thuật quốc_gia về bộ móc_nối , đỡ đấm của đầu_máy , toa_xe Số_hiệu : QCVN 111 : 2023/BGTVT. Quy_chuẩn kỹ_thuật quốc_gia về van hãm sử_dụng trên đầu_máy , toa_xe Số_hiệu : QCVN 112 : 2023/BGTVT.</code> |

| <code>03 Tổng công_ty Cảng hàng_không thực_hiện hợp_nhất có trách_nhiệm như thế_nào theo quy_định ?</code> | <code>Các Tổng công_ty thực_hiện hợp_nhất nêu tại Điều_1 Quyết_định này có trách_nhiệm chuyển_giao nguyên_trạng toàn_bộ tài_sản , tài_chính , lao_động , đất_đai , dự_án đang triển_khai , các quyền , nghĩa_vụ và lợi_ích hợp_pháp khác sang Tổng công_ty Cảng hàng_không Việt_Nam . Trong thời_gian chưa chuyển_giao , Chủ_tịch Hội_đồng thành_viên , Tổng giám_đốc và các cá_nhân có liên_quan của 03 Tổng công_ty thực_hiện hợp_nhất chịu trách_nhiệm quản_lý toàn_bộ tài_sản , tiền vốn của Tổng công_ty , không để hư_hỏng , hao_hụt , thất_thoát .</code> |

* Loss: [<code>MatryoshkaLoss</code>](https://sbert.net/docs/package_reference/sentence_transformer/losses.html#matryoshkaloss) with these parameters:

```json

{

"loss": "MultipleNegativesRankingLoss",

"matryoshka_dims": [

256,

128,

64

],

"matryoshka_weights": [

1,

1,

1

],

"n_dims_per_step": -1

}

```

### Evaluation Dataset

#### Unnamed Dataset

* Size: 4,450 evaluation samples

* Columns: <code>anchor</code> and <code>positive</code>

* Approximate statistics based on the first 1000 samples:

| | anchor | positive |

|:--------|:----------------------------------------------------------------------------------|:------------------------------------------------------------------------------------|

| type | string | string |

| details | <ul><li>min: 7 tokens</li><li>mean: 20.75 tokens</li><li>max: 46 tokens</li></ul> | <ul><li>min: 10 tokens</li><li>mean: 155.2 tokens</li><li>max: 256 tokens</li></ul> |

* Samples:

| anchor | positive |

|:-----------------------------------------------------------------------------------------------------------|:-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|

| <code>" Người_lớn ( trên 16 tuổi ) " được hiểu là “ Người_lớn và trẻ_em trên 16 tuổi ”</code> | <code>" Khi triển_khai “ Hướng_dẫn quản_lý tại nhà đối_với người mắc COVID - 19 ” , đề_nghị hướng_dẫn , làm rõ một_số nội_dung như sau : . Mục 3 “ Người_lớn ( trên 16 tuổ ” : đề_nghị hướng_dẫn là “ Người_lớn và trẻ_em trên 16 tuổi ” . "</code> |

| <code>03 Quy_chuẩn kỹ_thuật quốc_gia được ban_hành tại Thông_tư 04 là Quy_chuẩn nào ?</code> | <code>Ban_hành kèm theo Thông_tư này 03 Quy_chuẩn kỹ_thuật quốc_gia sau : Quy_chuẩn kỹ_thuật quốc_gia về bộ trục bánh_xe của đầu_máy , toa_xe Số_hiệu : QCVN 110 : 2023/BGTVT. Quy_chuẩn kỹ_thuật quốc_gia về bộ móc_nối , đỡ đấm của đầu_máy , toa_xe Số_hiệu : QCVN 111 : 2023/BGTVT. Quy_chuẩn kỹ_thuật quốc_gia về van hãm sử_dụng trên đầu_máy , toa_xe Số_hiệu : QCVN 112 : 2023/BGTVT.</code> |

| <code>03 Tổng công_ty Cảng hàng_không thực_hiện hợp_nhất có trách_nhiệm như thế_nào theo quy_định ?</code> | <code>Các Tổng công_ty thực_hiện hợp_nhất nêu tại Điều_1 Quyết_định này có trách_nhiệm chuyển_giao nguyên_trạng toàn_bộ tài_sản , tài_chính , lao_động , đất_đai , dự_án đang triển_khai , các quyền , nghĩa_vụ và lợi_ích hợp_pháp khác sang Tổng công_ty Cảng hàng_không Việt_Nam . Trong thời_gian chưa chuyển_giao , Chủ_tịch Hội_đồng thành_viên , Tổng giám_đốc và các cá_nhân có liên_quan của 03 Tổng công_ty thực_hiện hợp_nhất chịu trách_nhiệm quản_lý toàn_bộ tài_sản , tiền vốn của Tổng công_ty , không để hư_hỏng , hao_hụt , thất_thoát .</code> |

* Loss: [<code>MatryoshkaLoss</code>](https://sbert.net/docs/package_reference/sentence_transformer/losses.html#matryoshkaloss) with these parameters:

```json

{

"loss": "MultipleNegativesRankingLoss",

"matryoshka_dims": [

256,

128,

64

],

"matryoshka_weights": [

1,

1,

1

],

"n_dims_per_step": -1

}

```

### Training Hyperparameters

#### Non-Default Hyperparameters

- `eval_strategy`: steps

- `per_device_train_batch_size`: 16

- `per_device_eval_batch_size`: 16

- `gradient_accumulation_steps`: 8

- `learning_rate`: 2e-05

- `weight_decay`: 0.01

- `max_grad_norm`: 0.1

- `max_steps`: 1200

- `lr_scheduler_type`: cosine

- `warmup_ratio`: 0.15

- `fp16`: True

- `load_best_model_at_end`: True

- `optim`: adamw_torch_fused

- `gradient_checkpointing`: True

- `batch_sampler`: no_duplicates

#### All Hyperparameters

<details><summary>Click to expand</summary>

- `overwrite_output_dir`: False

- `do_predict`: False

- `eval_strategy`: steps

- `prediction_loss_only`: True

- `per_device_train_batch_size`: 16

- `per_device_eval_batch_size`: 16

- `per_gpu_train_batch_size`: None

- `per_gpu_eval_batch_size`: None

- `gradient_accumulation_steps`: 8

- `eval_accumulation_steps`: None

- `torch_empty_cache_steps`: None

- `learning_rate`: 2e-05

- `weight_decay`: 0.01

- `adam_beta1`: 0.9

- `adam_beta2`: 0.999

- `adam_epsilon`: 1e-08

- `max_grad_norm`: 0.1

- `num_train_epochs`: 3.0

- `max_steps`: 1200

- `lr_scheduler_type`: cosine

- `lr_scheduler_kwargs`: {}

- `warmup_ratio`: 0.15

- `warmup_steps`: 0

- `log_level`: passive

- `log_level_replica`: warning

- `log_on_each_node`: True

- `logging_nan_inf_filter`: True

- `save_safetensors`: True

- `save_on_each_node`: False

- `save_only_model`: False

- `restore_callback_states_from_checkpoint`: False

- `no_cuda`: False

- `use_cpu`: False

- `use_mps_device`: False

- `seed`: 42

- `data_seed`: None

- `jit_mode_eval`: False

- `use_ipex`: False

- `bf16`: False

- `fp16`: True

- `fp16_opt_level`: O1

- `half_precision_backend`: auto

- `bf16_full_eval`: False

- `fp16_full_eval`: False

- `tf32`: None

- `local_rank`: 0

- `ddp_backend`: None

- `tpu_num_cores`: None

- `tpu_metrics_debug`: False

- `debug`: []

- `dataloader_drop_last`: False

- `dataloader_num_workers`: 0

- `dataloader_prefetch_factor`: None

- `past_index`: -1

- `disable_tqdm`: False

- `remove_unused_columns`: True

- `label_names`: None

- `load_best_model_at_end`: True

- `ignore_data_skip`: False

- `fsdp`: []

- `fsdp_min_num_params`: 0

- `fsdp_config`: {'min_num_params': 0, 'xla': False, 'xla_fsdp_v2': False, 'xla_fsdp_grad_ckpt': False}

- `fsdp_transformer_layer_cls_to_wrap`: None

- `accelerator_config`: {'split_batches': False, 'dispatch_batches': None, 'even_batches': True, 'use_seedable_sampler': True, 'non_blocking': False, 'gradient_accumulation_kwargs': None}

- `deepspeed`: None

- `label_smoothing_factor`: 0.0

- `optim`: adamw_torch_fused

- `optim_args`: None

- `adafactor`: False

- `group_by_length`: False

- `length_column_name`: length

- `ddp_find_unused_parameters`: None

- `ddp_bucket_cap_mb`: None

- `ddp_broadcast_buffers`: False

- `dataloader_pin_memory`: True

- `dataloader_persistent_workers`: False

- `skip_memory_metrics`: True

- `use_legacy_prediction_loop`: False

- `push_to_hub`: False

- `resume_from_checkpoint`: None

- `hub_model_id`: None

- `hub_strategy`: every_save

- `hub_private_repo`: False

- `hub_always_push`: False

- `gradient_checkpointing`: True

- `gradient_checkpointing_kwargs`: None

- `include_inputs_for_metrics`: False

- `eval_do_concat_batches`: True

- `fp16_backend`: auto

- `push_to_hub_model_id`: None

- `push_to_hub_organization`: None

- `mp_parameters`:

- `auto_find_batch_size`: False

- `full_determinism`: False

- `torchdynamo`: None

- `ray_scope`: last

- `ddp_timeout`: 1800

- `torch_compile`: False

- `torch_compile_backend`: None

- `torch_compile_mode`: None

- `dispatch_batches`: None

- `split_batches`: None

- `include_tokens_per_second`: False

- `include_num_input_tokens_seen`: False

- `neftune_noise_alpha`: None

- `optim_target_modules`: None

- `batch_eval_metrics`: False

- `eval_on_start`: False

- `use_liger_kernel`: False

- `eval_use_gather_object`: False

- `prompts`: None

- `batch_sampler`: no_duplicates

- `multi_dataset_batch_sampler`: proportional

</details>

### Training Logs

| Epoch | Step | Training Loss | Validation Loss | dim_256_cosine_ndcg@10 | dim_128_cosine_ndcg@10 | dim_64_cosine_ndcg@10 |

|:----------:|:--------:|:-------------:|:---------------:|:----------------------:|:----------------------:|:---------------------:|

| 0.5047 | 400 | 0.4797 | 0.3000 | 0.5544 | 0.5504 | 0.5393 |

| 1.0090 | 800 | 0.4274 | 0.2888 | 0.5583 | 0.5534 | 0.5415 |

| **1.5136** | **1200** | **0.3211** | **0.2089** | **0.562** | **0.5592** | **0.5444** |

* The bold row denotes the saved checkpoint.

### Framework Versions

- Python: 3.10.14

- Sentence Transformers: 3.3.0

- Transformers: 4.45.1

- PyTorch: 2.4.0

- Accelerate: 0.34.2

- Datasets: 3.0.1

- Tokenizers: 0.20.0

## Citation

### BibTeX

#### Sentence Transformers

```bibtex

@inproceedings{reimers-2019-sentence-bert,

title = "Sentence-BERT: Sentence Embeddings using Siamese BERT-Networks",

author = "Reimers, Nils and Gurevych, Iryna",

booktitle = "Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing",

month = "11",

year = "2019",

publisher = "Association for Computational Linguistics",

url = "https://arxiv.org/abs/1908.10084",

}

```

#### MatryoshkaLoss

```bibtex

@misc{kusupati2024matryoshka,

title={Matryoshka Representation Learning},

author={Aditya Kusupati and Gantavya Bhatt and Aniket Rege and Matthew Wallingford and Aditya Sinha and Vivek Ramanujan and William Howard-Snyder and Kaifeng Chen and Sham Kakade and Prateek Jain and Ali Farhadi},

year={2024},

eprint={2205.13147},

archivePrefix={arXiv},

primaryClass={cs.LG}

}

```

#### MultipleNegativesRankingLoss

```bibtex

@misc{henderson2017efficient,

title={Efficient Natural Language Response Suggestion for Smart Reply},

author={Matthew Henderson and Rami Al-Rfou and Brian Strope and Yun-hsuan Sung and Laszlo Lukacs and Ruiqi Guo and Sanjiv Kumar and Balint Miklos and Ray Kurzweil},

year={2017},

eprint={1705.00652},

archivePrefix={arXiv},

primaryClass={cs.CL}

}

```

<!--

## Glossary

*Clearly define terms in order to be accessible across audiences.*

-->

<!--

## Model Card Authors

*Lists the people who create the model card, providing recognition and accountability for the detailed work that goes into its construction.*

-->

<!--

## Model Card Contact

*Provides a way for people who have updates to the Model Card, suggestions, or questions, to contact the Model Card authors.*

-->

|

{"base_model": "Tnt3o5/tnt_v4_lega_new_tokens", "library_name": "sentence-transformers", "metrics": ["cosine_accuracy@1", "cosine_accuracy@3", "cosine_accuracy@5", "cosine_accuracy@10", "cosine_precision@1", "cosine_precision@3", "cosine_precision@5", "cosine_precision@10", "cosine_recall@1", "cosine_recall@3", "cosine_recall@5", "cosine_recall@10", "cosine_ndcg@10", "cosine_mrr@10", "cosine_map@100"], "pipeline_tag": "sentence-similarity", "tags": ["sentence-transformers", "sentence-similarity", "feature-extraction", "generated_from_trainer", "dataset_size:101442", "loss:MatryoshkaLoss", "loss:MultipleNegativesRankingLoss"], "widget": [{"source_sentence": "Ai có quyền điều_chỉnh Mệnh_lệnh vận_chuyển vật_liệu nổ công_nghiệp trong doanh_nghiệp Quân_đội ?", "sentences": ["Quyền đăng_ký sáng_chế , kiểu_dáng công_nghiệp , thiết_kế bố_trí Tổ_chức , cá_nhân sau đây có quyền đăng_ký sáng_chế , kiểu_dáng công_nghiệp , thiết_kế bố_trí : Tác giả_tạo ra sáng_chế , kiểu_dáng công_nghiệp , thiết_kế bố_trí bằng công_sức và chi_phí của mình ; Tổ_chức , cá_nhân đầu_tư kinh_phí , phương_tiện vật_chất cho tác_giả dưới hình_thức giao việc , thuê việc , tổ_chức , cá_nhân được giao quản_lý nguồn gen cung_cấp nguồn gen , tri_thức truyền_thống về nguồn gen theo hợp_đồng tiếp_cận nguồn gen và chia_sẻ lợi_ích , trừ trường_hợp các bên có thỏa_thuận khác hoặc trường_hợp quy_định tại Điều_86a của Luật này . Trường_hợp nhiều tổ_chức , cá_nhân cùng nhau tạo ra hoặc đầu_tư để tạo ra sáng_chế , kiểu_dáng công_nghiệp , thiết_kế bố_trí thì các tổ_chức , cá_nhân đó đều có quyền đăng_ký và quyền đăng_ký đó chỉ được thực_hiện nếu được tất_cả các tổ_chức , cá_nhân đó đồng_ý . Tổ_chức , cá_nhân có quyền đăng_ký quy_định tại Điều này có quyền chuyển_giao quyền đăng_ký cho tổ_chức , cá_nhân khác dưới hình_thức hợp_đồng bằng văn_bản , để thừa_kế hoặc kế_thừa theo quy_định của pháp_luật , kể_cả trường_hợp đã nộp đơn đăng_ký .", "Nhiệm_vụ cụ_thể của các thành_viên Hội_đồng Ngoài việc thực_hiện các nhiệm_vụ quy_định tại Điều_5 của Quy_chế này , Thành_viên Hội_đồng còn có nhiệm_vụ cụ_thể sau đây : Thành_viên Hội_đồng là Lãnh_đạo Vụ Pháp_chế có nhiệm_vụ giúp Chủ_tịch , Phó Chủ_tịch Hội_đồng , Hội_đồng , điều_hành các công_việc thường_xuyên của Hội_đồng ; trực_tiếp lãnh_đạo Tổ Thường_trực ; giải_quyết công_việc đột_xuất của Hội_đồng khi cả Chủ_tịch và Phó Chủ_tịch Hội đồng_đều đi vắng . Thành_viên Hội_đồng là Lãnh_đạo Vụ An_toàn giao_thông có nhiệm_vụ trực_tiếp theo_dõi , đôn_đốc , kiểm_tra và phối_hợp với thủ_trưởng các cơ_quan , đơn_vị thuộc Bộ , Thành_viên Hội_đồng là Lãnh_đạo Văn_phòng Ủy_ban ATGTQG , Giám_đốc Sở GTVT , Chủ_tịch Tập_đoàn VINASHIN , Tổng giám_đốc các Tổng Công_ty : Hàng_hải Việt_Nam , Đường_sắt Việt_Nam , Hàng_không Việt_Nam chỉ_đạo công_tác tuyên_truyền PBGDPL về trật_tự , an_toàn giao_thông .", "Cấp , điều_chỉnh , thu_hồi và tạm ngừng cấp_Mệnh lệnh vận_chuyển vật_liệu nổ công_nghiệp , tiền chất thuốc_nổ Tổng_Tham_mưu_trưởng cấp , điều_chỉnh , thu_hồi hoặc ủy_quyền cho người chỉ_huy cơ_quan , đơn_vị thuộc quyền dưới một cấp cấp , điều_chỉnh , thu_hồi Mệnh_lệnh vận_chuyển vật_liệu nổ công_nghiệp , tiền chất thuốc_nổ cho cá 5 doanh_nghiệp trực_thuộc Bộ Quốc_phòng và các doanh_nghiệp cổ_phần có vốn nhà_nước do Bộ Quốc_phòng làm đại_diện chủ sở_hữu . Đối_với trường_hợp đột_xuất khác không có trong kế_hoạch được Tổng_Tham_mưu_trưởng phê_duyệt như quy_định tại Điều_5 Thông_tư này , cơ_quan , đơn_vị , doanh_nghiệp cấp dưới báo_cáo cơ_quan , đơn_vị , doanh_nghiệp trực_thuộc Bộ Quốc_phòng đề_nghị Tổng_Tham_mưu_trưởng cấp_Mệnh lệnh vận_chuyển vật_liệu nổ công_nghiệp , tiền chất thuốc_nổ . Người chỉ_huy cơ_quan , đơn_vị ( không phải doanh nghiệ trực_thuộc Bộ Quốc_phòng căn_cứ vào kế_hoạch được Tổng_Tham_mưu_trưởng phê_duyệt , thực_hiện hoặc ủy_quyền cho người chỉ_huy cơ_quan , đơn_vị thuộc quyền dưới một cấp cấp , điều_chỉnh , thu_hồi Mệnh_lệnh vận_chuyển vật_liệu nổ công_nghiệp , tiền chất thuốc_nổ cho đối_tượng thuộc phạm_vi quản_lý ."]}, {"source_sentence": "Ai có quyền quyết_định phong quân_hàm Đại_tá đối_với sĩ_quan Quân_đội giữ chức_vụ Chính_ủy Lữ_đoàn ?", "sentences": ["Thẩm_quyền quyết_định đối_với sĩ_quan Thẩm_quyền bổ_nhiệm , miễn_nhiệm , cách_chức , phong , thăng , giáng , tước quân_hàm đối_với sĩ_quan được quy_định như sau : Chủ_tịch_nước bổ_nhiệm , miễn_nhiệm , cách_chức Tổng_Tham_mưu_trưởng , Chủ_nhiệm Tổng_Cục_Chính_trị ; phong , thăng , giáng , tước quân_hàm Cấp tướng , Chuẩn Đô_đốc , Phó Đô_đốc , Đô_đốc Hải_quân ; Thủ_tướng_Chính_phủ bổ_nhiệm , miễn_nhiệm , cách_chức Thứ_trưởng ; Phó_Tổng_Tham_mưu_trưởng , Phó Chủ_nhiệm Tổng_Cục_Chính_trị ; Giám_đốc , Chính_ủy Học_viện Quốc_phòng ; Chủ_nhiệm Tổng_cục , Tổng cục_trưởng , Chính_ủy Tổng_cục ; Tư_lệnh , Chính_ủy Quân_khu ; Tư_lệnh , Chính_ủy Quân_chủng ; Tư_lệnh , Chính_ủy Bộ_đội Biên_phòng ; Tư_lệnh , Chính_ủy Cảnh_sát biển Việt_Nam ; Trưởng_Ban Cơ_yếu Chính_phủ và các chức_vụ khác theo quy_định của Cấp có thẩm_quyền ; Bộ_trưởng_Bộ_Quốc_phòng bổ_nhiệm , miễn_nhiệm , cách_chức các chức_vụ và phong , thăng , giáng , tước các Cấp_bậc quân_hàm còn lại và nâng lương sĩ_quan ; Việc bổ_nhiệm , miễn_nhiệm , cách_chức các chức_vụ thuộc ngành Kiểm_sát , Toà_án , Thi_hành án trong quân_đội được thực_hiện theo quy_định của pháp_luật . Cấp có thẩm_quyền quyết_định bổ_nhiệm đến chức_vụ nào thì có quyền miễn_nhiệm , cách_chức , giáng chức , quyết_định kéo_dài thời_hạn phục_vụ tại_ngũ , điều_động , biệt_phái , giao chức_vụ thấp hơn , cho thôi phục_vụ tại_ngũ , chuyển ngạch và giải ngạch sĩ_quan dự_bị đến chức_vụ đó .", "Nhiệm_vụ , quyền_hạn của Tổng Giám_đốc Trình Hội_đồng thành_viên VNPT để Hội_đồng thành_viên Trình cơ_quan nhà_nước có thẩm_quyền quyết_định hoặc phê_duyệt các nội_dung thuộc quyền của chủ sở_hữu đối_với VNPT theo quy_định của Điều_lệ này . Trình Hội_đồng thành_viên VNPT xem_xét , quyết_định các nội_dung thuộc thẩm_quyền của Hội_đồng thành_viên VNPT. Ban_hành quy_chế quản_lý nội_bộ sau khi Hội_đồng thành_viên thông_qua . Theo phân_cấp hoặc ủy_quyền theo quy_định của Điều_lệ này , Quy_chế_tài_chính , các quy_chế quản_lý nội_bộ của VNPT và các quy_định khác của pháp_luật , Tổng Giám_đốc quyết_định : Các dự_án đầu_tư ; hợp_đồng mua , bán tài_sản . Các hợp_đồng vay , thuê , cho thuê và hợp_đồng khác . Phương_án sử_dụng vốn , tài_sản của VNPT để góp vốn , mua cổ_phần của các doanh_nghiệp . Ban_hành các quy_định , quy Trình nội_bộ phục_vụ công_tác quản_lý , Điều_hành sản_xuất kinh_doanh của VNPT. Quyết_định thành_lập , giải_thể , tổ_chức lại các đơn_vị kinh_tế hạch_toán phụ_thuộc đơn_vị trực_thuộc của VNPT.", "Thẩm_quyền quyết_định đối_với sĩ_quan Thẩm_quyền bổ_nhiệm , miễn_nhiệm , cách_chức , phong , thăng , giáng , tước quân_hàm đối_với sĩ_quan được quy_định như sau : Chủ_tịch_nước bổ_nhiệm , miễn_nhiệm , cách_chức Tổng_Tham_mưu_trưởng , Chủ_nhiệm Tổng_Cục_Chính_trị ; phong , thăng , giáng , tước quân_hàm Cấp tướng , Chuẩn Đô_đốc , Phó Đô_đốc , Đô_đốc Hải_quân ; Thủ_tướng_Chính_phủ bổ_nhiệm , miễn_nhiệm , cách_chức Thứ_trưởng ; Phó_Tổng_Tham_mưu_trưởng , Phó Chủ_nhiệm Tổng_Cục_Chính_trị ; Giám_đốc , Chính_ủy Học_viện Quốc_phòng ; Chủ_nhiệm Tổng_cục , Tổng cục_trưởng , Chính_ủy Tổng_cục ; Tư_lệnh , Chính_ủy Quân_khu ; Tư_lệnh , Chính_ủy Quân_chủng ; Tư_lệnh , Chính_ủy Bộ_đội Biên_phòng ; Tư_lệnh , Chính_ủy Cảnh_sát biển Việt_Nam ; Trưởng_Ban Cơ_yếu Chính_phủ và các chức_vụ khác theo quy_định của Cấp có thẩm_quyền ; Bộ_trưởng_Bộ_Quốc_phòng bổ_nhiệm , miễn_nhiệm , cách_chức các chức_vụ và phong , thăng , giáng , tước các Cấp_bậc quân_hàm còn lại và nâng lương sĩ_quan ; Việc bổ_nhiệm , miễn_nhiệm , cách_chức các chức_vụ thuộc ngành Kiểm_sát , Toà_án , Thi_hành án trong quân_đội được thực_hiện theo quy_định của pháp_luật . Cấp có thẩm_quyền quyết_định bổ_nhiệm đến chức_vụ nào thì có quyền miễn_nhiệm , cách_chức , giáng chức , quyết_định kéo_dài thời_hạn phục_vụ tại_ngũ , điều_động , biệt_phái , giao chức_vụ thấp hơn , cho thôi phục_vụ tại_ngũ , chuyển ngạch và giải ngạch sĩ_quan dự_bị đến chức_vụ đó ."]}, {"source_sentence": "Ai có quyền quyết_định thành_lập Hội_đồng Giám_định y_khoa cấp tỉnh ? Hội_đồng có tư_cách pháp_nhân không ?", "sentences": ["Thẩm_quyền thành_lập Hội_đồng giám_định y_khoa các cấp Hội_đồng giám_định y_khoa cấp tỉnh do cơ_quan chuyên_môn thuộc Ủy_ban_nhân_dân tỉnh quyết_định thành_lập . Hội_đồng giám_định y_khoa cấp trung_ương do Bộ_Y_tế quyết_định thành_lập . Bộ Quốc_phòng , Bộ_Công_An , Bộ_Giao_thông_Vận_tải căn_cứ quy_định của Thông_tư này để quyết_định thành_lập Hội_đồng giám_định y_khoa các Bộ theo quy_định tại điểm_b Khoản_2 Điều_161 Nghị_định số 131/2021/NĐCP.", "Thẩm_quyền phong , thăng , giáng , tước cấp_bậc hàm , nâng lương sĩ_quan , hạ sĩ_quan , chiến_sĩ ; bổ_nhiệm , miễn_nhiệm , cách_chức , giáng chức các chức_vụ ; bổ_nhiệm , miễn_nhiệm chức_danh trong Công_an nhân_dân Chủ_tịch_nước phong , thăng cấp_bậc hàm_cấp tướng đối_với sĩ_quan Công_an nhân_dân . Thủ_tướng_Chính_phủ bổ_nhiệm chức_vụ Thứ_trưởng Bộ_Công_An ; quyết_định nâng lương cấp_bậc hàm Đại_tướng , Thượng_tướng . Bộ_trưởng Bộ_Công_An quyết_định nâng lương cấp_bậc hàm Trung_tướng , Thiếu_tướng ; quy_định việc phong , thăng , nâng lương các cấp_bậc hàm , bổ_nhiệm các chức_vụ , chức_danh còn lại trong Công_an nhân_dân . Người có thẩm_quyền phong , thăng cấp_bậc hàm nào thì có thẩm_quyền giáng , tước cấp_bậc hàm đó ; mỗi lần chỉ được thăng , giáng 01 cấp_bậc hàm , trừ trường_hợp đặc_biệt mới xét thăng , giáng nhiều cấp_bậc hàm . Người có thẩm_quyền bổ_nhiệm chức_vụ nào thì có thẩm_quyền miễn_nhiệm , cách_chức , giáng chức đối_với chức_vụ đó . Người có thẩm_quyền bổ_nhiệm chức_danh nào thì có thẩm_quyền miễn_nhiệm đối_với chức_danh đó .", "Thẩm_quyền duyệt kế_hoạch Đại_hội Đoàn các cấp Ban Thường_vụ Đoàn cấp trên trực_tiếp có trách_nhiệm và thẩm_quyền duyệt kế_hoạch Đại_hội Đoàn các đơn_vị trực_thuộc . Ban Bí_thư Trung_ương Đoàn duyệt kế_hoạch Đại_hội Đoàn cấp tỉnh ."]}, {"source_sentence": "Ai có quyền ký hợp_đồng cộng tác_viên với người đáp_ứng đủ tiêu_chuẩn có nguyện_vọng làm Cộng tác_viên pháp điển ?", "sentences": ["Thẩm_quyền lập biên_bản_vi_phạm hành_chính trong lĩnh_vực Kiểm_toán_Nhà_nước_Người có thẩm_quyền lập biên_bản_vi_phạm hành_chính trong lĩnh_vực Kiểm_toán_Nhà_nước quy_định tại Điều_15 của Pháp_lệnh số { 04 / 2023 / UBTVQH15 , } bao_gồm : Kiểm toán_viên nhà_nước ; Tổ_trưởng tổ kiểm_toán ; Phó trưởng_đoàn kiểm_toán ; Trưởng_đoàn kiểm_toán ; đ ) Kiểm toán_trưởng . Trường_hợp người đang thi_hành nhiệm_vụ kiểm_toán , kiểm_tra thực_hiện kết_luận , kiến_nghị kiểm_toán , nhiệm_vụ tiếp_nhận báo_cáo cáo định_kỳ hoặc nhiệm_vụ khác mà không phải là người có thẩm_quyền lập biên_bản_vi_phạm hành_chính , nếu phát_hiện_hành_vi vi_phạm hành_chính trong lĩnh_vực Kiểm_toán_Nhà_nước thì phải lập biên_bản làm_việc để ghi_nhận sự_việc và chuyển ngay biên_bản làm_việc đến người có thẩm_quyền để lập biên_bản_vi_phạm hành_chính theo quy_định .", "\" Điều Đăng_ký_kết_hôn Việc kết_hôn phải được đăng_ký và do cơ_quan nhà_nước có thẩm_Quyền thực_hiện theo quy_định của Luật này và pháp Luật về hộ_tịch . Việc kết_hôn không được đăng_ký theo quy_định tại khoản này thì không có giá_trị pháp_lý . Vợ_chồng đã ly_hôn muốn xác_lập lại quan_hệ vợ_chồng thì phải đăng_ký kết_hôn . Điều Giải_quyết hậu_quả của việc nam , nữ chung sống với nhau như vợ_chồng mà không đăng_ký kết_hôn Nam , nữ có đủ điều_kiện kết_hôn theo quy_định của Luật này chung sống với nhau như vợ_chồng mà không đăng_ký kết_hôn thì không làm phát_sinh Quyền , nghĩa_vụ giữa vợ và chồng . Quyền , nghĩa_vụ đối_với con , tài_sản , nghĩa_vụ và hợp_đồng giữa các bên được giải_quyết theo quy_định tại Điều_15 và Điều_16 của Luật này . Trong trường_hợp nam , nữ chung sống với nhau như vợ_chồng theo quy_định tại Khoản 1_Điều này nhưng sau đó thực_hiện việc đăng_ký kết_hôn theo quy_định của pháp Luật thì quan_hệ hôn_nhân được xác_lập từ thời điểm đăng_ký kết_hôn . \"", "Thẩm_quyền , trách_nhiệm của các đơn_vị thuộc Bộ_Tư_pháp trong việc quản_lý , sử_dụng Cộng tác_viên Các đơn_vị thuộc Bộ_Tư_pháp Thủ_trưởng đơn_vị thực_hiện pháp điển có quyền ký hợp_đồng cộng_tác với người đáp_ứng đủ tiêu_chuẩn quy_định tại Điều_2 Quy_chế này , có nguyện_vọng làm Cộng tác_viên theo nhu_cầu thực_tế và phạm_vi , tính_chất công_việc thực_hiện pháp điển của đơn_vị ; thông_báo cho Cục Kiểm_tra văn_bản quy_phạm pháp_luật về việc ký hợp_đồng thuê Cộng tác_viên và tình_hình thực_hiện công_việc của Cộng tác_viên . Đơn_vị thực_hiện pháp điển không được sử_dụng cán_bộ , công_chức , viên_chức thuộc biên_chế của đơn_vị làm Cộng tác_viên với đơn_vị mình . Thủ_trưởng đơn_vị thuộc Bộ_Tư_pháp thực_hiện pháp điển có_thể tham_khảo Danh_sách nguồn Cộng tác_viên do Cục Kiểm_tra văn_bản quy_phạm pháp_luật lập để ký hợp_đồng thuê Cộng tác_viên thực_hiện công_tác pháp điển thuộc thẩm_quyền , trách_nhiệm của đơn_vị mình ."]}, {"source_sentence": "Ai có quyền_hủy bỏ kết_quả bầu_cử và quyết_định bầu_cử lại đại_biểu Quốc_hội ?", "sentences": ["\" Điều Thẩm_quyền quyết_định tạm hoãn gọi nhập_ngũ , miễn gọi nhập_ngũ và công_nhận hoàn_thành nghĩa_vụ quân_sự tại_ngũ Chủ_tịch Ủy_ban_nhân_dân cấp huyện quyết_định tạm hoãn gọi nhập_ngũ và miễn gọi nhập_ngũ đối_với công_dân quy_định tại Điều_41 của Luật này . Chỉ huy_trưởng Ban chỉ_huy quân_sự cấp huyện quyết_định công_nhận hoàn_thành nghĩa_vụ quân_sự tại_ngũ đối_với công_dân quy_định tại Khoản_4 Điều_4 của Luật này . \"", "Cơ_cấu tổ_chức Tổng cục_trưởng Tổng_cục Hải_quan quy_định nhiệm_vụ và quyền_hạn của các Phòng , Đội , Hải_Đội thuộc và trực_thuộc Cục Điều_tra chống buôn_lậu .", "Hủy_bỏ kết_quả bầu_cử và quyết_định bầu_cử lại Hội_đồng_Bầu_cử_Quốc_gia tự mình hoặc theo đề_nghị của Ủy_ban_Thường_vụ_Quốc_hội , Chính_phủ , Ủy_ban trung_ương Mặt_trận_Tổ_quốc Việt_Nam , Ủy_ban bầu_cử ở tỉnh Hủy_bỏ kết_quả bầu_cử ở khu_vực bỏ_phiếu , đơn_vị bầu_cử có vi_phạm_pháp_luật nghiêm_trọng và quyết_định ngày bầu_cử lại ở khu_vực bỏ_phiếu , đơn_vị bầu_cử đó . Trong trường_hợp bầu_cử lại thì ngày bầu_cử được tiến_hành chậm nhất là 15 ngày sau ngày bầu_cử đầu_tiên . Trong cuộc bầu_cử lại , cử_tri chỉ chọn bầu trong danh_sách những người ứng_cử tại cuộc bầu_cử đầu_tiên ."]}], "model-index": [{"name": "SentenceTransformer based on Tnt3o5/tnt_v4_lega_new_tokens", "results": [{"task": {"type": "information-retrieval", "name": "Information Retrieval"}, "dataset": {"name": "dim 256", "type": "dim_256"}, "metrics": [{"type": "cosine_accuracy@1", "value": 0.4254, "name": "Cosine Accuracy@1"}, {"type": "cosine_accuracy@3", "value": 0.6052, "name": "Cosine Accuracy@3"}, {"type": "cosine_accuracy@5", "value": 0.6636, "name": "Cosine Accuracy@5"}, {"type": "cosine_accuracy@10", "value": 0.7248, "name": "Cosine Accuracy@10"}, {"type": "cosine_precision@1", "value": 0.4254, "name": "Cosine Precision@1"}, {"type": "cosine_precision@3", "value": 0.20706666666666665, "name": "Cosine Precision@3"}, {"type": "cosine_precision@5", "value": 0.13752, "name": "Cosine Precision@5"}, {"type": "cosine_precision@10", "value": 0.07594, "name": "Cosine Precision@10"}, {"type": "cosine_recall@1", "value": 0.4051, "name": "Cosine Recall@1"}, {"type": "cosine_recall@3", "value": 0.58215, "name": "Cosine Recall@3"}, {"type": "cosine_recall@5", "value": 0.6421, "name": "Cosine Recall@5"}, {"type": "cosine_recall@10", "value": 0.7052, "name": "Cosine Recall@10"}, {"type": "cosine_ndcg@10", "value": 0.5619612781230402, "name": "Cosine Ndcg@10"}, {"type": "cosine_mrr@10", "value": 0.526433492063493, "name": "Cosine Mrr@10"}, {"type": "cosine_map@100", "value": 0.514814431994549, "name": "Cosine Map@100"}]}, {"task": {"type": "information-retrieval", "name": "Information Retrieval"}, "dataset": {"name": "dim 128", "type": "dim_128"}, "metrics": [{"type": "cosine_accuracy@1", "value": 0.4264, "name": "Cosine Accuracy@1"}, {"type": "cosine_accuracy@3", "value": 0.6, "name": "Cosine Accuracy@3"}, {"type": "cosine_accuracy@5", "value": 0.662, "name": "Cosine Accuracy@5"}, {"type": "cosine_accuracy@10", "value": 0.7194, "name": "Cosine Accuracy@10"}, {"type": "cosine_precision@1", "value": 0.4264, "name": "Cosine Precision@1"}, {"type": "cosine_precision@3", "value": 0.2053333333333333, "name": "Cosine Precision@3"}, {"type": "cosine_precision@5", "value": 0.13707999999999998, "name": "Cosine Precision@5"}, {"type": "cosine_precision@10", "value": 0.07544, "name": "Cosine Precision@10"}, {"type": "cosine_recall@1", "value": 0.40606666666666663, "name": "Cosine Recall@1"}, {"type": "cosine_recall@3", "value": 0.57705, "name": "Cosine Recall@3"}, {"type": "cosine_recall@5", "value": 0.6404666666666667, "name": "Cosine Recall@5"}, {"type": "cosine_recall@10", "value": 0.70015, "name": "Cosine Recall@10"}, {"type": "cosine_ndcg@10", "value": 0.5591685699820262, "name": "Cosine Ndcg@10"}, {"type": "cosine_mrr@10", "value": 0.5244388095238101, "name": "Cosine Mrr@10"}, {"type": "cosine_map@100", "value": 0.5128272708639572, "name": "Cosine Map@100"}]}, {"task": {"type": "information-retrieval", "name": "Information Retrieval"}, "dataset": {"name": "dim 64", "type": "dim_64"}, "metrics": [{"type": "cosine_accuracy@1", "value": 0.4076, "name": "Cosine Accuracy@1"}, {"type": "cosine_accuracy@3", "value": 0.5866, "name": "Cosine Accuracy@3"}, {"type": "cosine_accuracy@5", "value": 0.6478, "name": "Cosine Accuracy@5"}, {"type": "cosine_accuracy@10", "value": 0.708, "name": "Cosine Accuracy@10"}, {"type": "cosine_precision@1", "value": 0.4076, "name": "Cosine Precision@1"}, {"type": "cosine_precision@3", "value": 0.20026666666666665, "name": "Cosine Precision@3"}, {"type": "cosine_precision@5", "value": 0.13403999999999996, "name": "Cosine Precision@5"}, {"type": "cosine_precision@10", "value": 0.0741, "name": "Cosine Precision@10"}, {"type": "cosine_recall@1", "value": 0.38761666666666666, "name": "Cosine Recall@1"}, {"type": "cosine_recall@3", "value": 0.5637666666666666, "name": "Cosine Recall@3"}, {"type": "cosine_recall@5", "value": 0.6255666666666667, "name": "Cosine Recall@5"}, {"type": "cosine_recall@10", "value": 0.6879833333333333, "name": "Cosine Recall@10"}, {"type": "cosine_ndcg@10", "value": 0.5444437738024127, "name": "Cosine Ndcg@10"}, {"type": "cosine_mrr@10", "value": 0.5090488888888896, "name": "Cosine Mrr@10"}, {"type": "cosine_map@100", "value": 0.49745729547355066, "name": "Cosine Map@100"}]}]}]}

|

dataset

| null | 400 |

Y-J-Ju/ModernBERT-base-ColBERT

|

Y-J-Ju

|

sentence-similarity

|

[

"PyLate",

"safetensors",

"modernbert",

"ColBERT",

"sentence-transformers",

"sentence-similarity",

"feature-extraction",

"generated_from_trainer",

"dataset_size:808728",

"loss:Distillation",

"en",

"dataset:lightonai/ms-marco-en-bge",

"arxiv:1908.10084",

"base_model:answerdotai/ModernBERT-base",

"base_model:finetune:answerdotai/ModernBERT-base",

"region:us"

] | 2025-01-03T05:46:23Z |

2025-01-20T13:40:43+00:00

| 297 | 6 |

---

base_model: answerdotai/ModernBERT-base

datasets:

- lightonai/ms-marco-en-bge

language:

- en

library_name: PyLate

pipeline_tag: sentence-similarity

tags:

- ColBERT

- PyLate

- sentence-transformers

- sentence-similarity

- feature-extraction

- generated_from_trainer