id

stringlengths 6

113

| author

stringlengths 2

36

| task_category

stringclasses 42

values | tags

listlengths 1

4.05k

| created_time

timestamp[ns, tz=UTC]date 2022-03-02 23:29:04

2025-04-10 08:38:38

| last_modified

stringdate 2020-05-14 13:13:12

2025-04-19 04:15:39

| downloads

int64 0

118M

| likes

int64 0

4.86k

| README

stringlengths 30

1.01M

| matched_bigbio_names

listlengths 1

8

⌀ | is_bionlp

stringclasses 3

values | model_cards

stringlengths 0

1M

| metadata

stringlengths 2

698k

| source

stringclasses 2

values | matched_task

listlengths 1

10

⌀ | __index_level_0__

int64 0

46.9k

|

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

interneuronai/az-pandalm

|

interneuronai

| null |

[

"peft",

"safetensors",

"base_model:WeOpenML/PandaLM-7B-v1",

"base_model:adapter:WeOpenML/PandaLM-7B-v1",

"region:us"

] | 2024-03-09T13:03:56Z |

2024-03-09T18:08:52+00:00

| 1 | 0 |

---

base_model: WeOpenML/PandaLM-7B-v1

library_name: peft

---

Model Details

Original Model: WeOpenML/PandaLM-7B-v1

Fine-Tuned For: Azerbaijani language understanding and generation

Dataset Used: Azerbaijani translation of the Stanford Alpaca dataset

Fine-Tuning Method: Self-instruct method

This model, is part of the ["project/Barbarossa"](https://github.com/Alas-Development-Center/project-barbarossa) initiative, aimed at enhancing natural language processing capabilities for the Azerbaijani language. By fine-tuning this model on the Azerbaijani translation of the Stanford Alpaca dataset using the self-instruct method, we've made significant strides in improving AI's understanding and generation of Azerbaijani text.

__Our primary objective with this model is to offer insights into the feasibility and outcomes of fine-tuning large language models (LLMs) for the Azerbaijani language. The fine-tuning process was undertaken with limited resources, providing valuable learnings rather than creating a model ready for production use. Therefore, we recommend treating this model as a reference or a guide to understanding the potential and challenges involved in fine-tuning LLMs for specific languages. It serves as a foundational step towards further research and development rather than a direct solution for production environments.__

This project is a proud product of the [Alas Development Center (ADC)](https://az.linkedin.com/company/alas-development-center?trk=ppro_cprof). We are thrilled to offer these finely-tuned large language models to the public, free of charge.

How to use?

```

from transformers import AutoConfig, AutoModelForCausalLM, AutoTokenizer, pipeline

model_path = "alasdevcenter/az-pandalm"

model = AutoModelForCausalLM.from_pretrained(model_path)

tokenizer = AutoTokenizer.from_pretrained(model_path)

pipe = pipeline(task="text-generation", model=model, tokenizer=tokenizer, max_length=200)

instruction = "Təbiətin qorunması "

formatted_prompt = f"""Aşağıda daha çox kontekst təmin edən təlimat var. Sorğunu adekvat şəkildə tamamlayan cavab yazın.

### Təlimat:

{instruction}

### Cavab:

"""

result = pipe(formatted_prompt)

print(result[0]['generated_text'])

```

| null |

Non_BioNLP

|

Model Details

Original Model: WeOpenML/PandaLM-7B-v1

Fine-Tuned For: Azerbaijani language understanding and generation

Dataset Used: Azerbaijani translation of the Stanford Alpaca dataset

Fine-Tuning Method: Self-instruct method

This model, is part of the ["project/Barbarossa"](https://github.com/Alas-Development-Center/project-barbarossa) initiative, aimed at enhancing natural language processing capabilities for the Azerbaijani language. By fine-tuning this model on the Azerbaijani translation of the Stanford Alpaca dataset using the self-instruct method, we've made significant strides in improving AI's understanding and generation of Azerbaijani text.

__Our primary objective with this model is to offer insights into the feasibility and outcomes of fine-tuning large language models (LLMs) for the Azerbaijani language. The fine-tuning process was undertaken with limited resources, providing valuable learnings rather than creating a model ready for production use. Therefore, we recommend treating this model as a reference or a guide to understanding the potential and challenges involved in fine-tuning LLMs for specific languages. It serves as a foundational step towards further research and development rather than a direct solution for production environments.__

This project is a proud product of the [Alas Development Center (ADC)](https://az.linkedin.com/company/alas-development-center?trk=ppro_cprof). We are thrilled to offer these finely-tuned large language models to the public, free of charge.

How to use?

```

from transformers import AutoConfig, AutoModelForCausalLM, AutoTokenizer, pipeline

model_path = "alasdevcenter/az-pandalm"

model = AutoModelForCausalLM.from_pretrained(model_path)

tokenizer = AutoTokenizer.from_pretrained(model_path)

pipe = pipeline(task="text-generation", model=model, tokenizer=tokenizer, max_length=200)

instruction = "Təbiətin qorunması "

formatted_prompt = f"""Aşağıda daha çox kontekst təmin edən təlimat var. Sorğunu adekvat şəkildə tamamlayan cavab yazın.

### Təlimat:

{instruction}

### Cavab:

"""

result = pipe(formatted_prompt)

print(result[0]['generated_text'])

```

|

{"base_model": "WeOpenML/PandaLM-7B-v1", "library_name": "peft"}

|

task

|

[

"TRANSLATION"

] | 45,791 |

joelsolomon/marian-finetuned-kde4-en-to-fr

|

joelsolomon

|

translation

|

[

"transformers",

"tensorboard",

"safetensors",

"marian",

"text2text-generation",

"translation",

"generated_from_trainer",

"base_model:Helsinki-NLP/opus-mt-en-fr",

"base_model:finetune:Helsinki-NLP/opus-mt-en-fr",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | 2024-12-01T19:01:12Z |

2024-12-01T23:02:55+00:00

| 4 | 0 |

---

base_model: Helsinki-NLP/opus-mt-en-fr

library_name: transformers

license: apache-2.0

metrics:

- bleu

tags:

- translation

- generated_from_trainer

model-index:

- name: marian-finetuned-kde4-en-to-fr

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# marian-finetuned-kde4-en-to-fr

This model is a fine-tuned version of [Helsinki-NLP/opus-mt-en-fr](https://huggingface.co/Helsinki-NLP/opus-mt-en-fr) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 0.8554

- Model Preparation Time: 0.0039

- Bleu: 52.9020

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 32

- eval_batch_size: 64

- seed: 42

- optimizer: Use adamw_torch with betas=(0.9,0.999) and epsilon=1e-08 and optimizer_args=No additional optimizer arguments

- lr_scheduler_type: linear

- num_epochs: 3

- mixed_precision_training: Native AMP

### Training results

### Framework versions

- Transformers 4.46.2

- Pytorch 2.5.1+cu121

- Tokenizers 0.20.3

| null |

Non_BioNLP

|

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# marian-finetuned-kde4-en-to-fr

This model is a fine-tuned version of [Helsinki-NLP/opus-mt-en-fr](https://huggingface.co/Helsinki-NLP/opus-mt-en-fr) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 0.8554

- Model Preparation Time: 0.0039

- Bleu: 52.9020

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 32

- eval_batch_size: 64

- seed: 42

- optimizer: Use adamw_torch with betas=(0.9,0.999) and epsilon=1e-08 and optimizer_args=No additional optimizer arguments

- lr_scheduler_type: linear

- num_epochs: 3

- mixed_precision_training: Native AMP

### Training results

### Framework versions

- Transformers 4.46.2

- Pytorch 2.5.1+cu121

- Tokenizers 0.20.3

|

{"base_model": "Helsinki-NLP/opus-mt-en-fr", "library_name": "transformers", "license": "apache-2.0", "metrics": ["bleu"], "tags": ["translation", "generated_from_trainer"], "model-index": [{"name": "marian-finetuned-kde4-en-to-fr", "results": []}]}

|

task

|

[

"TRANSLATION"

] | 45,792 |

google/t5-efficient-tiny-nh16

|

google

|

text2text-generation

|

[

"transformers",

"pytorch",

"tf",

"jax",

"t5",

"text2text-generation",

"deep-narrow",

"en",

"dataset:c4",

"arxiv:2109.10686",

"license:apache-2.0",

"autotrain_compatible",

"text-generation-inference",

"region:us"

] | 2022-03-02T23:29:05Z |

2023-01-24T16:51:06+00:00

| 116 | 0 |

---

datasets:

- c4

language:

- en

license: apache-2.0

tags:

- deep-narrow

inference: false

---

# T5-Efficient-TINY-NH16 (Deep-Narrow version)

T5-Efficient-TINY-NH16 is a variation of [Google's original T5](https://ai.googleblog.com/2020/02/exploring-transfer-learning-with-t5.html) following the [T5 model architecture](https://huggingface.co/docs/transformers/model_doc/t5).

It is a *pretrained-only* checkpoint and was released with the

paper **[Scale Efficiently: Insights from Pre-training and Fine-tuning Transformers](https://arxiv.org/abs/2109.10686)**

by *Yi Tay, Mostafa Dehghani, Jinfeng Rao, William Fedus, Samira Abnar, Hyung Won Chung, Sharan Narang, Dani Yogatama, Ashish Vaswani, Donald Metzler*.

In a nutshell, the paper indicates that a **Deep-Narrow** model architecture is favorable for **downstream** performance compared to other model architectures

of similar parameter count.

To quote the paper:

> We generally recommend a DeepNarrow strategy where the model’s depth is preferentially increased

> before considering any other forms of uniform scaling across other dimensions. This is largely due to

> how much depth influences the Pareto-frontier as shown in earlier sections of the paper. Specifically, a

> tall small (deep and narrow) model is generally more efficient compared to the base model. Likewise,

> a tall base model might also generally more efficient compared to a large model. We generally find

> that, regardless of size, even if absolute performance might increase as we continue to stack layers,

> the relative gain of Pareto-efficiency diminishes as we increase the layers, converging at 32 to 36

> layers. Finally, we note that our notion of efficiency here relates to any one compute dimension, i.e.,

> params, FLOPs or throughput (speed). We report all three key efficiency metrics (number of params,

> FLOPS and speed) and leave this decision to the practitioner to decide which compute dimension to

> consider.

To be more precise, *model depth* is defined as the number of transformer blocks that are stacked sequentially.

A sequence of word embeddings is therefore processed sequentially by each transformer block.

## Details model architecture

This model checkpoint - **t5-efficient-tiny-nh16** - is of model type **Tiny** with the following variations:

- **nh** is **16**

It has **25.02** million parameters and thus requires *ca.* **100.07 MB** of memory in full precision (*fp32*)

or **50.04 MB** of memory in half precision (*fp16* or *bf16*).

A summary of the *original* T5 model architectures can be seen here:

| Model | nl (el/dl) | ff | dm | kv | nh | #Params|

| ----| ---- | ---- | ---- | ---- | ---- | ----|

| Tiny | 4/4 | 1024 | 256 | 32 | 4 | 16M|

| Mini | 4/4 | 1536 | 384 | 32 | 8 | 31M|

| Small | 6/6 | 2048 | 512 | 32 | 8 | 60M|

| Base | 12/12 | 3072 | 768 | 64 | 12 | 220M|

| Large | 24/24 | 4096 | 1024 | 64 | 16 | 738M|

| Xl | 24/24 | 16384 | 1024 | 128 | 32 | 3B|

| XXl | 24/24 | 65536 | 1024 | 128 | 128 | 11B|

whereas the following abbreviations are used:

| Abbreviation | Definition |

| ----| ---- |

| nl | Number of transformer blocks (depth) |

| dm | Dimension of embedding vector (output vector of transformers block) |

| kv | Dimension of key/value projection matrix |

| nh | Number of attention heads |

| ff | Dimension of intermediate vector within transformer block (size of feed-forward projection matrix) |

| el | Number of transformer blocks in the encoder (encoder depth) |

| dl | Number of transformer blocks in the decoder (decoder depth) |

| sh | Signifies that attention heads are shared |

| skv | Signifies that key-values projection matrices are tied |

If a model checkpoint has no specific, *el* or *dl* than both the number of encoder- and decoder layers correspond to *nl*.

## Pre-Training

The checkpoint was pretrained on the [Colossal, Cleaned version of Common Crawl (C4)](https://huggingface.co/datasets/c4) for 524288 steps using

the span-based masked language modeling (MLM) objective.

## Fine-Tuning

**Note**: This model is a **pretrained** checkpoint and has to be fine-tuned for practical usage.

The checkpoint was pretrained in English and is therefore only useful for English NLP tasks.

You can follow on of the following examples on how to fine-tune the model:

*PyTorch*:

- [Summarization](https://github.com/huggingface/transformers/tree/master/examples/pytorch/summarization)

- [Question Answering](https://github.com/huggingface/transformers/blob/master/examples/pytorch/question-answering/run_seq2seq_qa.py)

- [Text Classification](https://github.com/huggingface/transformers/tree/master/examples/pytorch/text-classification) - *Note*: You will have to slightly adapt the training example here to make it work with an encoder-decoder model.

*Tensorflow*:

- [Summarization](https://github.com/huggingface/transformers/tree/master/examples/tensorflow/summarization)

- [Text Classification](https://github.com/huggingface/transformers/tree/master/examples/tensorflow/text-classification) - *Note*: You will have to slightly adapt the training example here to make it work with an encoder-decoder model.

*JAX/Flax*:

- [Summarization](https://github.com/huggingface/transformers/tree/master/examples/flax/summarization)

- [Text Classification](https://github.com/huggingface/transformers/tree/master/examples/flax/text-classification) - *Note*: You will have to slightly adapt the training example here to make it work with an encoder-decoder model.

## Downstream Performance

TODO: Add table if available

## Computational Complexity

TODO: Add table if available

## More information

We strongly recommend the reader to go carefully through the original paper **[Scale Efficiently: Insights from Pre-training and Fine-tuning Transformers](https://arxiv.org/abs/2109.10686)** to get a more nuanced understanding of this model checkpoint.

As explained in the following [issue](https://github.com/google-research/google-research/issues/986#issuecomment-1035051145), checkpoints including the *sh* or *skv*

model architecture variations have *not* been ported to Transformers as they are probably of limited practical usage and are lacking a more detailed description. Those checkpoints are kept [here](https://huggingface.co/NewT5SharedHeadsSharedKeyValues) as they might be ported potentially in the future.

| null |

Non_BioNLP

|

# T5-Efficient-TINY-NH16 (Deep-Narrow version)

T5-Efficient-TINY-NH16 is a variation of [Google's original T5](https://ai.googleblog.com/2020/02/exploring-transfer-learning-with-t5.html) following the [T5 model architecture](https://huggingface.co/docs/transformers/model_doc/t5).

It is a *pretrained-only* checkpoint and was released with the

paper **[Scale Efficiently: Insights from Pre-training and Fine-tuning Transformers](https://arxiv.org/abs/2109.10686)**

by *Yi Tay, Mostafa Dehghani, Jinfeng Rao, William Fedus, Samira Abnar, Hyung Won Chung, Sharan Narang, Dani Yogatama, Ashish Vaswani, Donald Metzler*.

In a nutshell, the paper indicates that a **Deep-Narrow** model architecture is favorable for **downstream** performance compared to other model architectures

of similar parameter count.

To quote the paper:

> We generally recommend a DeepNarrow strategy where the model’s depth is preferentially increased

> before considering any other forms of uniform scaling across other dimensions. This is largely due to

> how much depth influences the Pareto-frontier as shown in earlier sections of the paper. Specifically, a

> tall small (deep and narrow) model is generally more efficient compared to the base model. Likewise,

> a tall base model might also generally more efficient compared to a large model. We generally find

> that, regardless of size, even if absolute performance might increase as we continue to stack layers,

> the relative gain of Pareto-efficiency diminishes as we increase the layers, converging at 32 to 36

> layers. Finally, we note that our notion of efficiency here relates to any one compute dimension, i.e.,

> params, FLOPs or throughput (speed). We report all three key efficiency metrics (number of params,

> FLOPS and speed) and leave this decision to the practitioner to decide which compute dimension to

> consider.

To be more precise, *model depth* is defined as the number of transformer blocks that are stacked sequentially.

A sequence of word embeddings is therefore processed sequentially by each transformer block.

## Details model architecture

This model checkpoint - **t5-efficient-tiny-nh16** - is of model type **Tiny** with the following variations:

- **nh** is **16**

It has **25.02** million parameters and thus requires *ca.* **100.07 MB** of memory in full precision (*fp32*)

or **50.04 MB** of memory in half precision (*fp16* or *bf16*).

A summary of the *original* T5 model architectures can be seen here:

| Model | nl (el/dl) | ff | dm | kv | nh | #Params|

| ----| ---- | ---- | ---- | ---- | ---- | ----|

| Tiny | 4/4 | 1024 | 256 | 32 | 4 | 16M|

| Mini | 4/4 | 1536 | 384 | 32 | 8 | 31M|

| Small | 6/6 | 2048 | 512 | 32 | 8 | 60M|

| Base | 12/12 | 3072 | 768 | 64 | 12 | 220M|

| Large | 24/24 | 4096 | 1024 | 64 | 16 | 738M|

| Xl | 24/24 | 16384 | 1024 | 128 | 32 | 3B|

| XXl | 24/24 | 65536 | 1024 | 128 | 128 | 11B|

whereas the following abbreviations are used:

| Abbreviation | Definition |

| ----| ---- |

| nl | Number of transformer blocks (depth) |

| dm | Dimension of embedding vector (output vector of transformers block) |

| kv | Dimension of key/value projection matrix |

| nh | Number of attention heads |

| ff | Dimension of intermediate vector within transformer block (size of feed-forward projection matrix) |

| el | Number of transformer blocks in the encoder (encoder depth) |

| dl | Number of transformer blocks in the decoder (decoder depth) |

| sh | Signifies that attention heads are shared |

| skv | Signifies that key-values projection matrices are tied |

If a model checkpoint has no specific, *el* or *dl* than both the number of encoder- and decoder layers correspond to *nl*.

## Pre-Training

The checkpoint was pretrained on the [Colossal, Cleaned version of Common Crawl (C4)](https://huggingface.co/datasets/c4) for 524288 steps using

the span-based masked language modeling (MLM) objective.

## Fine-Tuning

**Note**: This model is a **pretrained** checkpoint and has to be fine-tuned for practical usage.

The checkpoint was pretrained in English and is therefore only useful for English NLP tasks.

You can follow on of the following examples on how to fine-tune the model:

*PyTorch*:

- [Summarization](https://github.com/huggingface/transformers/tree/master/examples/pytorch/summarization)

- [Question Answering](https://github.com/huggingface/transformers/blob/master/examples/pytorch/question-answering/run_seq2seq_qa.py)

- [Text Classification](https://github.com/huggingface/transformers/tree/master/examples/pytorch/text-classification) - *Note*: You will have to slightly adapt the training example here to make it work with an encoder-decoder model.

*Tensorflow*:

- [Summarization](https://github.com/huggingface/transformers/tree/master/examples/tensorflow/summarization)

- [Text Classification](https://github.com/huggingface/transformers/tree/master/examples/tensorflow/text-classification) - *Note*: You will have to slightly adapt the training example here to make it work with an encoder-decoder model.

*JAX/Flax*:

- [Summarization](https://github.com/huggingface/transformers/tree/master/examples/flax/summarization)

- [Text Classification](https://github.com/huggingface/transformers/tree/master/examples/flax/text-classification) - *Note*: You will have to slightly adapt the training example here to make it work with an encoder-decoder model.

## Downstream Performance

TODO: Add table if available

## Computational Complexity

TODO: Add table if available

## More information

We strongly recommend the reader to go carefully through the original paper **[Scale Efficiently: Insights from Pre-training and Fine-tuning Transformers](https://arxiv.org/abs/2109.10686)** to get a more nuanced understanding of this model checkpoint.

As explained in the following [issue](https://github.com/google-research/google-research/issues/986#issuecomment-1035051145), checkpoints including the *sh* or *skv*

model architecture variations have *not* been ported to Transformers as they are probably of limited practical usage and are lacking a more detailed description. Those checkpoints are kept [here](https://huggingface.co/NewT5SharedHeadsSharedKeyValues) as they might be ported potentially in the future.

|

{"datasets": ["c4"], "language": ["en"], "license": "apache-2.0", "tags": ["deep-narrow"], "inference": false}

|

task

|

[

"TEXT_CLASSIFICATION",

"QUESTION_ANSWERING",

"SUMMARIZATION"

] | 45,793 |

mradermacher/Llama-3-VNTL-Yollisa-8B-GGUF

|

mradermacher

|

translation

|

[

"transformers",

"gguf",

"mergekit",

"merge",

"translation",

"japanese_media",

"otaku_media",

"visual_novels",

"VNs",

"en",

"ja",

"base_model:Casual-Autopsy/Llama-3-VNTL-Yollisa-8B",

"base_model:quantized:Casual-Autopsy/Llama-3-VNTL-Yollisa-8B",

"endpoints_compatible",

"region:us"

] | 2025-03-05T19:27:44Z |

2025-03-05T23:14:12+00:00

| 446 | 1 |

---

base_model: Casual-Autopsy/Llama-3-VNTL-Yollisa-8B

language:

- en

- ja

library_name: transformers

tags:

- mergekit

- merge

- translation

- japanese_media

- otaku_media

- visual_novels

- VNs

quantized_by: mradermacher

---

## About

<!-- ### quantize_version: 2 -->

<!-- ### output_tensor_quantised: 1 -->

<!-- ### convert_type: hf -->

<!-- ### vocab_type: -->

<!-- ### tags: -->

static quants of https://huggingface.co/Casual-Autopsy/Llama-3-VNTL-Yollisa-8B

<!-- provided-files -->

weighted/imatrix quants are available at https://huggingface.co/mradermacher/Llama-3-VNTL-Yollisa-8B-i1-GGUF

## Usage

If you are unsure how to use GGUF files, refer to one of [TheBloke's

READMEs](https://huggingface.co/TheBloke/KafkaLM-70B-German-V0.1-GGUF) for

more details, including on how to concatenate multi-part files.

## Provided Quants

(sorted by size, not necessarily quality. IQ-quants are often preferable over similar sized non-IQ quants)

| Link | Type | Size/GB | Notes |

|:-----|:-----|--------:|:------|

| [GGUF](https://huggingface.co/mradermacher/Llama-3-VNTL-Yollisa-8B-GGUF/resolve/main/Llama-3-VNTL-Yollisa-8B.Q2_K.gguf) | Q2_K | 3.3 | |

| [GGUF](https://huggingface.co/mradermacher/Llama-3-VNTL-Yollisa-8B-GGUF/resolve/main/Llama-3-VNTL-Yollisa-8B.Q3_K_S.gguf) | Q3_K_S | 3.8 | |

| [GGUF](https://huggingface.co/mradermacher/Llama-3-VNTL-Yollisa-8B-GGUF/resolve/main/Llama-3-VNTL-Yollisa-8B.Q3_K_M.gguf) | Q3_K_M | 4.1 | lower quality |

| [GGUF](https://huggingface.co/mradermacher/Llama-3-VNTL-Yollisa-8B-GGUF/resolve/main/Llama-3-VNTL-Yollisa-8B.Q3_K_L.gguf) | Q3_K_L | 4.4 | |

| [GGUF](https://huggingface.co/mradermacher/Llama-3-VNTL-Yollisa-8B-GGUF/resolve/main/Llama-3-VNTL-Yollisa-8B.IQ4_XS.gguf) | IQ4_XS | 4.6 | |

| [GGUF](https://huggingface.co/mradermacher/Llama-3-VNTL-Yollisa-8B-GGUF/resolve/main/Llama-3-VNTL-Yollisa-8B.Q4_K_S.gguf) | Q4_K_S | 4.8 | fast, recommended |

| [GGUF](https://huggingface.co/mradermacher/Llama-3-VNTL-Yollisa-8B-GGUF/resolve/main/Llama-3-VNTL-Yollisa-8B.Q4_K_M.gguf) | Q4_K_M | 5.0 | fast, recommended |

| [GGUF](https://huggingface.co/mradermacher/Llama-3-VNTL-Yollisa-8B-GGUF/resolve/main/Llama-3-VNTL-Yollisa-8B.Q5_K_S.gguf) | Q5_K_S | 5.7 | |

| [GGUF](https://huggingface.co/mradermacher/Llama-3-VNTL-Yollisa-8B-GGUF/resolve/main/Llama-3-VNTL-Yollisa-8B.Q5_K_M.gguf) | Q5_K_M | 5.8 | |

| [GGUF](https://huggingface.co/mradermacher/Llama-3-VNTL-Yollisa-8B-GGUF/resolve/main/Llama-3-VNTL-Yollisa-8B.Q6_K.gguf) | Q6_K | 6.7 | very good quality |

| [GGUF](https://huggingface.co/mradermacher/Llama-3-VNTL-Yollisa-8B-GGUF/resolve/main/Llama-3-VNTL-Yollisa-8B.Q8_0.gguf) | Q8_0 | 8.6 | fast, best quality |

| [GGUF](https://huggingface.co/mradermacher/Llama-3-VNTL-Yollisa-8B-GGUF/resolve/main/Llama-3-VNTL-Yollisa-8B.f16.gguf) | f16 | 16.2 | 16 bpw, overkill |

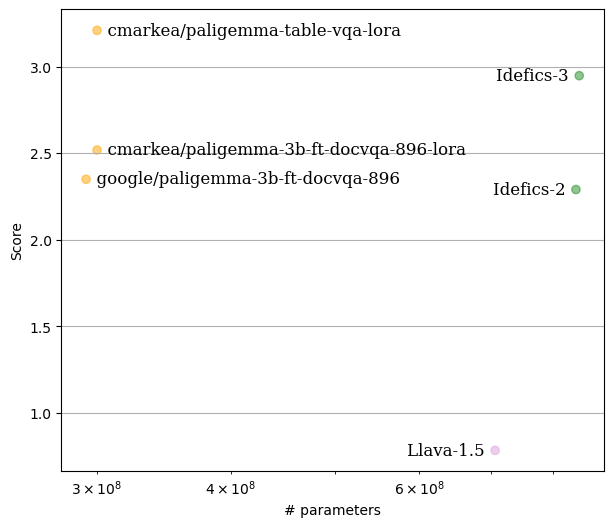

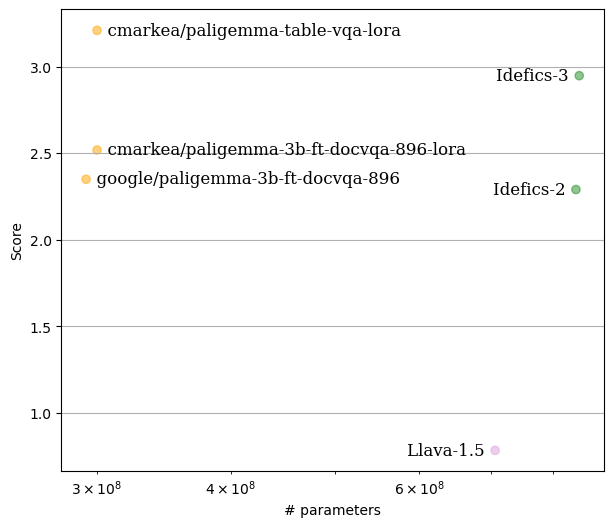

Here is a handy graph by ikawrakow comparing some lower-quality quant

types (lower is better):

And here are Artefact2's thoughts on the matter:

https://gist.github.com/Artefact2/b5f810600771265fc1e39442288e8ec9

## FAQ / Model Request

See https://huggingface.co/mradermacher/model_requests for some answers to

questions you might have and/or if you want some other model quantized.

## Thanks

I thank my company, [nethype GmbH](https://www.nethype.de/), for letting

me use its servers and providing upgrades to my workstation to enable

this work in my free time.

<!-- end -->

| null |

Non_BioNLP

|

## About

<!-- ### quantize_version: 2 -->

<!-- ### output_tensor_quantised: 1 -->

<!-- ### convert_type: hf -->

<!-- ### vocab_type: -->

<!-- ### tags: -->

static quants of https://huggingface.co/Casual-Autopsy/Llama-3-VNTL-Yollisa-8B

<!-- provided-files -->

weighted/imatrix quants are available at https://huggingface.co/mradermacher/Llama-3-VNTL-Yollisa-8B-i1-GGUF

## Usage

If you are unsure how to use GGUF files, refer to one of [TheBloke's

READMEs](https://huggingface.co/TheBloke/KafkaLM-70B-German-V0.1-GGUF) for

more details, including on how to concatenate multi-part files.

## Provided Quants

(sorted by size, not necessarily quality. IQ-quants are often preferable over similar sized non-IQ quants)

| Link | Type | Size/GB | Notes |

|:-----|:-----|--------:|:------|

| [GGUF](https://huggingface.co/mradermacher/Llama-3-VNTL-Yollisa-8B-GGUF/resolve/main/Llama-3-VNTL-Yollisa-8B.Q2_K.gguf) | Q2_K | 3.3 | |

| [GGUF](https://huggingface.co/mradermacher/Llama-3-VNTL-Yollisa-8B-GGUF/resolve/main/Llama-3-VNTL-Yollisa-8B.Q3_K_S.gguf) | Q3_K_S | 3.8 | |

| [GGUF](https://huggingface.co/mradermacher/Llama-3-VNTL-Yollisa-8B-GGUF/resolve/main/Llama-3-VNTL-Yollisa-8B.Q3_K_M.gguf) | Q3_K_M | 4.1 | lower quality |

| [GGUF](https://huggingface.co/mradermacher/Llama-3-VNTL-Yollisa-8B-GGUF/resolve/main/Llama-3-VNTL-Yollisa-8B.Q3_K_L.gguf) | Q3_K_L | 4.4 | |

| [GGUF](https://huggingface.co/mradermacher/Llama-3-VNTL-Yollisa-8B-GGUF/resolve/main/Llama-3-VNTL-Yollisa-8B.IQ4_XS.gguf) | IQ4_XS | 4.6 | |

| [GGUF](https://huggingface.co/mradermacher/Llama-3-VNTL-Yollisa-8B-GGUF/resolve/main/Llama-3-VNTL-Yollisa-8B.Q4_K_S.gguf) | Q4_K_S | 4.8 | fast, recommended |

| [GGUF](https://huggingface.co/mradermacher/Llama-3-VNTL-Yollisa-8B-GGUF/resolve/main/Llama-3-VNTL-Yollisa-8B.Q4_K_M.gguf) | Q4_K_M | 5.0 | fast, recommended |

| [GGUF](https://huggingface.co/mradermacher/Llama-3-VNTL-Yollisa-8B-GGUF/resolve/main/Llama-3-VNTL-Yollisa-8B.Q5_K_S.gguf) | Q5_K_S | 5.7 | |

| [GGUF](https://huggingface.co/mradermacher/Llama-3-VNTL-Yollisa-8B-GGUF/resolve/main/Llama-3-VNTL-Yollisa-8B.Q5_K_M.gguf) | Q5_K_M | 5.8 | |

| [GGUF](https://huggingface.co/mradermacher/Llama-3-VNTL-Yollisa-8B-GGUF/resolve/main/Llama-3-VNTL-Yollisa-8B.Q6_K.gguf) | Q6_K | 6.7 | very good quality |

| [GGUF](https://huggingface.co/mradermacher/Llama-3-VNTL-Yollisa-8B-GGUF/resolve/main/Llama-3-VNTL-Yollisa-8B.Q8_0.gguf) | Q8_0 | 8.6 | fast, best quality |

| [GGUF](https://huggingface.co/mradermacher/Llama-3-VNTL-Yollisa-8B-GGUF/resolve/main/Llama-3-VNTL-Yollisa-8B.f16.gguf) | f16 | 16.2 | 16 bpw, overkill |

Here is a handy graph by ikawrakow comparing some lower-quality quant

types (lower is better):

And here are Artefact2's thoughts on the matter:

https://gist.github.com/Artefact2/b5f810600771265fc1e39442288e8ec9

## FAQ / Model Request

See https://huggingface.co/mradermacher/model_requests for some answers to

questions you might have and/or if you want some other model quantized.

## Thanks

I thank my company, [nethype GmbH](https://www.nethype.de/), for letting

me use its servers and providing upgrades to my workstation to enable

this work in my free time.

<!-- end -->

|

{"base_model": "Casual-Autopsy/Llama-3-VNTL-Yollisa-8B", "language": ["en", "ja"], "library_name": "transformers", "tags": ["mergekit", "merge", "translation", "japanese_media", "otaku_media", "visual_novels", "VNs"], "quantized_by": "mradermacher"}

|

task

|

[

"TRANSLATION"

] | 45,794 |

Cyber-ThreaD/CyBERT-DNRTI

|

Cyber-ThreaD

|

token-classification

|

[

"transformers",

"tensorboard",

"safetensors",

"roberta",

"token-classification",

"generated_from_trainer",

"base_model:SynamicTechnologies/CYBERT",

"base_model:finetune:SynamicTechnologies/CYBERT",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | 2023-12-06T16:04:44Z |

2024-12-02T13:51:22+00:00

| 36 | 0 |

---

base_model: SynamicTechnologies/CYBERT

metrics:

- precision

- recall

- f1

- accuracy

tags:

- generated_from_trainer

model-index:

- name: anonymouspd/CyBERT-DNRTI

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# Cyber-ThreaD/CyBERT-DNRTI

This model is a fine-tuned version of [SynamicTechnologies/CYBERT](https://huggingface.co/SynamicTechnologies/CYBERT) on the [DNRTI](https://github.com/SCreaMxp/DNRTI-A-Large-scale-Dataset-for-Named-Entity-Recognition-in-Threat-Intelligence) dataset.

It achieves the following results on the evaluation set:

- Loss: 0.3378

- Precision: 0.5628

- Recall: 0.6439

- F1: 0.6006

- Accuracy: 0.9077

It achieves the following results on the prediction set:

- Loss: 0.2841

- Precision: 0.6301

- Recall: 0.6926

- F1: 0.6599

- Accuracy: 0.9201

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 10.0

### Training results

| Training Loss | Epoch | Step | Validation Loss | Precision | Recall | F1 | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:---------:|:------:|:------:|:--------:|

| 0.8529 | 0.76 | 500 | 0.5937 | 0.4470 | 0.3593 | 0.3984 | 0.8508 |

| 0.5566 | 1.52 | 1000 | 0.5027 | 0.4669 | 0.4196 | 0.4420 | 0.8636 |

| 0.4678 | 2.28 | 1500 | 0.4671 | 0.4706 | 0.4832 | 0.4768 | 0.8694 |

| 0.4038 | 3.04 | 2000 | 0.4320 | 0.4629 | 0.5371 | 0.4972 | 0.8739 |

| 0.3572 | 3.81 | 2500 | 0.4002 | 0.5134 | 0.5394 | 0.5261 | 0.8858 |

| 0.3167 | 4.57 | 3000 | 0.4047 | 0.4691 | 0.6094 | 0.5302 | 0.8826 |

| 0.2987 | 5.33 | 3500 | 0.3761 | 0.5158 | 0.5854 | 0.5484 | 0.8948 |

| 0.2706 | 6.09 | 4000 | 0.3558 | 0.5362 | 0.6066 | 0.5693 | 0.9001 |

| 0.2461 | 6.85 | 4500 | 0.3493 | 0.5511 | 0.5735 | 0.5621 | 0.9028 |

| 0.2311 | 7.61 | 5000 | 0.3526 | 0.5334 | 0.6518 | 0.5867 | 0.9024 |

| 0.2171 | 8.37 | 5500 | 0.3418 | 0.5586 | 0.6407 | 0.5969 | 0.9071 |

| 0.2062 | 9.13 | 6000 | 0.3378 | 0.5628 | 0.6439 | 0.6006 | 0.9077 |

| 0.1972 | 9.89 | 6500 | 0.3384 | 0.5648 | 0.6527 | 0.6056 | 0.9087 |

### Framework versions

- Transformers 4.36.0.dev0

- Pytorch 2.1.0+cu118

- Datasets 2.14.6

- Tokenizers 0.14.1

### Citing & Authors

If you use the model kindly cite the following work

```

@inproceedings{deka2024attacker,

title={AttackER: Towards Enhancing Cyber-Attack Attribution with a Named Entity Recognition Dataset},

author={Deka, Pritam and Rajapaksha, Sampath and Rani, Ruby and Almutairi, Amirah and Karafili, Erisa},

booktitle={International Conference on Web Information Systems Engineering},

pages={255--270},

year={2024},

organization={Springer}

}

```

| null |

Non_BioNLP

|

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# Cyber-ThreaD/CyBERT-DNRTI

This model is a fine-tuned version of [SynamicTechnologies/CYBERT](https://huggingface.co/SynamicTechnologies/CYBERT) on the [DNRTI](https://github.com/SCreaMxp/DNRTI-A-Large-scale-Dataset-for-Named-Entity-Recognition-in-Threat-Intelligence) dataset.

It achieves the following results on the evaluation set:

- Loss: 0.3378

- Precision: 0.5628

- Recall: 0.6439

- F1: 0.6006

- Accuracy: 0.9077

It achieves the following results on the prediction set:

- Loss: 0.2841

- Precision: 0.6301

- Recall: 0.6926

- F1: 0.6599

- Accuracy: 0.9201

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 10.0

### Training results

| Training Loss | Epoch | Step | Validation Loss | Precision | Recall | F1 | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:---------:|:------:|:------:|:--------:|

| 0.8529 | 0.76 | 500 | 0.5937 | 0.4470 | 0.3593 | 0.3984 | 0.8508 |

| 0.5566 | 1.52 | 1000 | 0.5027 | 0.4669 | 0.4196 | 0.4420 | 0.8636 |

| 0.4678 | 2.28 | 1500 | 0.4671 | 0.4706 | 0.4832 | 0.4768 | 0.8694 |

| 0.4038 | 3.04 | 2000 | 0.4320 | 0.4629 | 0.5371 | 0.4972 | 0.8739 |

| 0.3572 | 3.81 | 2500 | 0.4002 | 0.5134 | 0.5394 | 0.5261 | 0.8858 |

| 0.3167 | 4.57 | 3000 | 0.4047 | 0.4691 | 0.6094 | 0.5302 | 0.8826 |

| 0.2987 | 5.33 | 3500 | 0.3761 | 0.5158 | 0.5854 | 0.5484 | 0.8948 |

| 0.2706 | 6.09 | 4000 | 0.3558 | 0.5362 | 0.6066 | 0.5693 | 0.9001 |

| 0.2461 | 6.85 | 4500 | 0.3493 | 0.5511 | 0.5735 | 0.5621 | 0.9028 |

| 0.2311 | 7.61 | 5000 | 0.3526 | 0.5334 | 0.6518 | 0.5867 | 0.9024 |

| 0.2171 | 8.37 | 5500 | 0.3418 | 0.5586 | 0.6407 | 0.5969 | 0.9071 |

| 0.2062 | 9.13 | 6000 | 0.3378 | 0.5628 | 0.6439 | 0.6006 | 0.9077 |

| 0.1972 | 9.89 | 6500 | 0.3384 | 0.5648 | 0.6527 | 0.6056 | 0.9087 |

### Framework versions

- Transformers 4.36.0.dev0

- Pytorch 2.1.0+cu118

- Datasets 2.14.6

- Tokenizers 0.14.1

### Citing & Authors

If you use the model kindly cite the following work

```

@inproceedings{deka2024attacker,

title={AttackER: Towards Enhancing Cyber-Attack Attribution with a Named Entity Recognition Dataset},

author={Deka, Pritam and Rajapaksha, Sampath and Rani, Ruby and Almutairi, Amirah and Karafili, Erisa},

booktitle={International Conference on Web Information Systems Engineering},

pages={255--270},

year={2024},

organization={Springer}

}

```

|

{"base_model": "SynamicTechnologies/CYBERT", "metrics": ["precision", "recall", "f1", "accuracy"], "tags": ["generated_from_trainer"], "model-index": [{"name": "anonymouspd/CyBERT-DNRTI", "results": []}]}

|

task

|

[

"NAMED_ENTITY_RECOGNITION"

] | 45,795 |

diegoref/testtest

|

diegoref

|

text-classification

|

[

"transformers",

"pytorch",

"tensorboard",

"bert",

"text-classification",

"generated_from_trainer",

"dataset:glue",

"license:apache-2.0",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | 2023-03-28T14:02:37Z |

2023-03-28T14:19:01+00:00

| 13 | 0 |

---

datasets:

- glue

license: apache-2.0

metrics:

- accuracy

- f1

tags:

- generated_from_trainer

model-index:

- name: testtest

results:

- task:

type: text-classification

name: Text Classification

dataset:

name: glue

type: glue

config: mrpc

split: validation

args: mrpc

metrics:

- type: accuracy

value: 0.8700980392156863

name: Accuracy

- type: f1

value: 0.9090909090909091

name: F1

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# testtest

This model is a fine-tuned version of [bert-base-uncased](https://huggingface.co/bert-base-uncased) on the glue dataset.

It achieves the following results on the evaluation set:

- Loss: 0.6050

- Accuracy: 0.8701

- F1: 0.9091

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3.0

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy | F1 |

|:-------------:|:-----:|:----:|:---------------:|:--------:|:------:|

| No log | 1.0 | 459 | 0.3529 | 0.8627 | 0.9007 |

| 0.4988 | 2.0 | 918 | 0.4728 | 0.8652 | 0.9079 |

| 0.2792 | 3.0 | 1377 | 0.6050 | 0.8701 | 0.9091 |

### Framework versions

- Transformers 4.27.3

- Pytorch 1.13.1+cu116

- Datasets 2.10.1

- Tokenizers 0.13.2

| null |

Non_BioNLP

|

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# testtest

This model is a fine-tuned version of [bert-base-uncased](https://huggingface.co/bert-base-uncased) on the glue dataset.

It achieves the following results on the evaluation set:

- Loss: 0.6050

- Accuracy: 0.8701

- F1: 0.9091

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3.0

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy | F1 |

|:-------------:|:-----:|:----:|:---------------:|:--------:|:------:|

| No log | 1.0 | 459 | 0.3529 | 0.8627 | 0.9007 |

| 0.4988 | 2.0 | 918 | 0.4728 | 0.8652 | 0.9079 |

| 0.2792 | 3.0 | 1377 | 0.6050 | 0.8701 | 0.9091 |

### Framework versions

- Transformers 4.27.3

- Pytorch 1.13.1+cu116

- Datasets 2.10.1

- Tokenizers 0.13.2

|

{"datasets": ["glue"], "license": "apache-2.0", "metrics": ["accuracy", "f1"], "tags": ["generated_from_trainer"], "model-index": [{"name": "testtest", "results": [{"task": {"type": "text-classification", "name": "Text Classification"}, "dataset": {"name": "glue", "type": "glue", "config": "mrpc", "split": "validation", "args": "mrpc"}, "metrics": [{"type": "accuracy", "value": 0.8700980392156863, "name": "Accuracy"}, {"type": "f1", "value": 0.9090909090909091, "name": "F1"}]}]}]}

|

task

|

[

"TEXT_CLASSIFICATION"

] | 45,796 |

allegro/BiDi-pol-slk

|

allegro

|

translation

|

[

"transformers",

"safetensors",

"marian",

"text2text-generation",

"translation",

"mt",

"pytorch",

"sentence-piece",

"multilingual",

"allegro",

"laniqo",

"cs",

"pl",

"sk",

"sl",

"en",

"license:cc-by-4.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | 2025-01-28T13:15:09Z |

2025-02-10T23:46:08+00:00

| 39 | 0 |

---

language:

- cs

- pl

- sk

- sl

- en

library_name: transformers

license: cc-by-4.0

tags:

- translation

- mt

- marian

- pytorch

- sentence-piece

- multilingual

- allegro

- laniqo

---

# MultiSlav BiDi Models

<p align="center">

<a href="https://ml.allegro.tech/"><img src="allegro-title.svg" alt="MLR @ Allegro.com"></a>

</p>

## Multilingual BiDi MT Models

___BiDi___ is a collection of Encoder-Decoder vanilla transformer models trained on sentence-level Machine Translation task.

Each model is supporting Bi-Directional translation.

___BiDi___ models are part of the [___MultiSlav___ collection](https://huggingface.co/collections/allegro/multislav-6793d6b6419e5963e759a683). More information will be available soon in our upcoming MultiSlav paper.

Experiments were conducted under research project by [Machine Learning Research](https://ml.allegro.tech/) lab for [Allegro.com](https://ml.allegro.tech/).

Big thanks to [laniqo.com](laniqo.com) for cooperation in the research.

<p align="center">

<img src="bi-di.svg">

</p>

Graphic above provides an example of an BiDi model - [BiDi-ces-pol](https://huggingface.co/allegro/bidi-ces-pol) to translate from Polish to Czech language.

___BiDi-ces-pol___ is a bi-directional model supporting translation both __form Czech to Polish__ and __from Polish to Czech__ directions.

### Supported languages

To use a ___BiDi___ model, you must provide the target language for translation.

Target language tokens are represented as 3-letter ISO 639-3 language codes embedded in a format >>xxx<<.

All accepted directions and their respective tokens are listed below.

Note that, for each model only two directions are available.

Each of them was added as a special token to Sentence-Piece tokenizer.

| **Target Language** | **First token** |

|---------------------|-----------------|

| Czech | `>>ces<<` |

| English | `>>eng<<` |

| Polish | `>>pol<<` |

| Slovak | `>>slk<<` |

| Slovene | `>>slv<<` |

### Bi-Di models available

We provided 10 ___BiDi___ models, allowing to translate between 20 languages.

| **Bi-Di model** | **Languages supported** | **HF repository** |

|-----------------|-------------------------|---------------------------------------------------------------------|

| BiDi-ces-eng | Czech ↔ English | [allegro/BiDi-ces-eng](https://huggingface.co/allegro/bidi-ces-eng) |

| BiDi-ces-pol | Czech ↔ Polish | [allegro/BiDi-ces-pol](https://huggingface.co/allegro/bidi-ces-pol) |

| BiDi-ces-slk | Czech ↔ Slovak | [allegro/BiDi-ces-slk](https://huggingface.co/allegro/bidi-ces-slk) |

| BiDi-ces-slv | Czech ↔ Slovene | [allegro/BiDi-ces-slv](https://huggingface.co/allegro/bidi-ces-slv) |

| BiDi-eng-pol | English ↔ Polish | [allegro/BiDi-eng-pol](https://huggingface.co/allegro/bidi-eng-pol) |

| BiDi-eng-slk | English ↔ Slovak | [allegro/BiDi-eng-slk](https://huggingface.co/allegro/bidi-eng-slk) |

| BiDi-eng-slv | English ↔ Slovene | [allegro/BiDi-eng-slv](https://huggingface.co/allegro/bidi-eng-slv) |

| BiDi-pol-slk | Polish ↔ Slovak | [allegro/BiDi-pol-slk](https://huggingface.co/allegro/bidi-pol-slk) |

| BiDi-pol-slv | Polish ↔ Slovene | [allegro/BiDi-pol-slv](https://huggingface.co/allegro/bidi-pol-slv) |

| BiDi-slk-slv | Slovak ↔ Slovene | [allegro/BiDi-slk-slv](https://huggingface.co/allegro/bidi-slk-slv) |

## Use case quickstart

Example code-snippet to use model. Due to bug the `MarianMTModel` must be used explicitly.

Remember to adjust source and target languages to your use-case.

```python

from transformers import AutoTokenizer, MarianMTModel

source_lang = "pol"

target_lang = "ces"

first_lang, second_lang = sorted([source_lang, target_lang])

model_name = f"Allegro/BiDi-{first_lang}-{second_lang}"

tokenizer = AutoTokenizer.from_pretrained(model_name)

model = MarianMTModel.from_pretrained(model_name)

text = f">>{target_lang}<<" + " " + "Allegro to internetowa platforma e-commerce, na której swoje produkty sprzedają średnie i małe firmy, jak również duże marki."

batch_to_translate = [text]

translations = model.generate(**tokenizer.batch_encode_plus(batch_to_translate, return_tensors="pt"))

decoded_translation = tokenizer.batch_decode(translations, skip_special_tokens=True, clean_up_tokenization_spaces=True)[0]

print(decoded_translation)

```

Generated Czech output:

> Allegro je online e-commerce platforma, na které své výrobky prodávají střední a malé firmy, stejně jako velké značky.

## Training

[SentencePiece](https://github.com/google/sentencepiece) tokenizer has a vocab size 32k in total (16k per language). Tokenizer was trained on randomly sampled part of the training corpus.

During the training we used the [MarianNMT](https://marian-nmt.github.io/) framework.

Base marian configuration used: [transfromer-big](https://github.com/marian-nmt/marian-dev/blob/master/src/common/aliases.cpp#L113).

All training parameters are listed in table below.

### Training hyperparameters:

| **Hyperparameter** | **Value** |

|----------------------------|------------------------------------------------------------------------------------------------------------|

| Total Parameter Size | 209M |

| Vocab Size | 32k |

| Base Parameters | [Marian transfromer-big](https://github.com/marian-nmt/marian-dev/blob/master/src/common/aliases.cpp#L113) |

| Number of Encoding Layers | 6 |

| Number of Decoding Layers | 6 |

| Model Dimension | 1024 |

| FF Dimension | 4096 |

| Heads | 16 |

| Dropout | 0.1 |

| Batch Size | mini batch fit to VRAM |

| Training Accelerators | 4x A100 40GB |

| Max Length | 100 tokens |

| Optimizer | Adam |

| Warmup steps | 8000 |

| Context | Sentence-level MT |

| Languages Supported | See [Bi-Di models available](#Bi-Di-models-available) |

| Precision | float16 |

| Validation Freq | 3000 steps |

| Stop Metric | ChrF |

| Stop Criterion | 20 Validation steps |

## Training corpora

The main research question was: "How does adding additional, related languages impact the quality of the model?" - we explored it in the Slavic language family.

___BiDi___ models are our baseline before expanding the data-regime by using higher-level multilinguality.

Datasets were downloaded via [MT-Data](https://pypi.org/project/mtdata/0.2.10/) library.

The number of total examples post filtering and deduplication varies, depending on languages supported, see the table below.

| **Language pair** | **Number of training examples** |

|-------------------|--------------------------------:|

| Czech ↔ Polish | 63M |

| Czech ↔ Slovak | 30M |

| Czech ↔ Slovene | 25M |

| Polish ↔ Slovak | 26M |

| Polish ↔ Slovene | 23M |

| Slovak ↔ Slovene | 18M |

| ---------------- | ------------------------------- |

| Czech ↔ English | 151M |

| English ↔ Polish | 150M |

| English ↔ Slovak | 52M |

| English ↔ Slovene | 40M |

The datasets used (only applicable to specific directions):

| **Corpus** |

|----------------------|

| paracrawl |

| opensubtitles |

| multiparacrawl |

| dgt |

| elrc |

| xlent |

| wikititles |

| wmt |

| wikimatrix |

| dcep |

| ELRC |

| tildemodel |

| europarl |

| eesc |

| eubookshop |

| emea |

| jrc_acquis |

| ema |

| qed |

| elitr_eca |

| EU-dcep |

| rapid |

| ecb |

| kde4 |

| news_commentary |

| kde |

| bible_uedin |

| europat |

| elra |

| wikipedia |

| wikimedia |

| tatoeba |

| globalvoices |

| euconst |

| ubuntu |

| php |

| ecdc |

| eac |

| eac_reference |

| gnome |

| EU-eac |

| books |

| EU-ecdc |

| newsdev |

| khresmoi_summary |

| czechtourism |

| khresmoi_summary_dev |

| worldbank |

## Evaluation

Evaluation of the models was performed on [Flores200](https://huggingface.co/datasets/facebook/flores) dataset.

The table below compares performance of the open-source models and all applicable models from our collection.

Metric used: Unbabel/wmt22-comet-da.

| **Direction** | **CES → ENG** | **CES → POL** | **CES → SLK** | **CES → SLV** | **ENG → CES** | **ENG → POL** | **ENG → SLK** | **ENG → SLV** | **POL → CES** | **POL → ENG** | **POL → SLK** | **POL → SLV** | **SLK → CES** | **SLK → ENG** | **SLK → POL** | **SLK → SLV** | **SLV → CES** | **SLV → ENG** | **SLV → POL** | **SLV → SLK** |

|----------------------------------------------------|--------------:|--------------:|--------------:|--------------:|--------------:|--------------:|--------------:|--------------:|--------------:|--------------:|--------------:|--------------:|--------------:|--------------:|--------------:|--------------:|--------------:|--------------:|--------------:|--------------:|

| **M2M-100** | 87.0 | 89.0 | 92.1 | 89.7 | 88.6 | 86.4 | 88.4 | 87.3 | 89.6 | 84.6 | 89.4 | 88.4 | 92.7 | 86.8 | 89.1 | 89.6 | 90.3 | 86.4 | 88.7 | 90.1 |

| **NLLB-200** | 88.1 | 88.9 | 91.2 | 88.6 | 90.4 | __88.5__ | 90.1 | 88.8 | 89.4 | __85.8__ | 88.9 | 87.7 | 91.8 | 88.2 | 88.9 | 88.8 | 90.0 | __87.5__ | 88.6 | 89.4 |

| **Seamless-M4T** | 87.5 | 80.9 | 90.8 | 82.0 | __90.7__ | __88.5__ | __90.6__ | __89.6__ | 79.6 | 85.4 | 80.0 | 76.4 | 91.5 | 87.2 | 81.2 | 82.9 | 80.9 | 87.3 | 76.7 | 81.0 |

| **OPUS-MT Sla-Sla** | __88.2__ | 82.8 | - | 83.4 | 89.1 | 85.6 | - | 84.5 | 82.9 | 82.2 | - | 81.2 | - | - | - | - | 83.5 | 84.1 | 80.8 | - |

| **OPUS-MT SK-EN** | - | - | - | - | - | - | 89.5 | - | - | - | - | - | - | __88.4__ | - | - | - | - | - | - |

| _Our contributions:_ | | | | | | | | | | | | | | | | | | | | |

| **BiDi Models**<span style="color:green;">*</span> | 87.5 | 89.4 | 92.4 | 89.8 | 87.8 | 86.2 | 87.2 | 86.6 | 90.0 | 85.0 | 89.1 | 88.4 | 92.9 | 87.3 | 88.8 | 89.4 | 90.0 | 86.9 | 88.1 | 89.1 |

| **P4-pol**<span style="color:red;">◊</span> | - | 89.6 | 90.8 | 88.7 | - | - | - | - | 90.2 | - | 89.8 | 88.7 | 91.0 | - | 89.3 | 88.4 | 89.3 | - | 88.7 | 88.5 |

| **P5-eng**<span style="color:red;">◊</span> | 88.0 | 89.0 | 90.7 | 89.0 | 88.8 | 87.3 | 88.4 | 87.5 | 89.0 | 85.7 | 88.5 | 87.8 | 91.0 | 88.2 | 88.6 | 88.5 | 89.6 | 87.2 | 88.4 | 88.9 |

| **P5-ces**<span style="color:red;">◊</span> | 87.9 | 89.6 | __92.5__ | 89.9 | 88.4 | 85.0 | 87.9 | 85.9 | 90.3 | 84.5 | 89.5 | 88.0 | __93.0__ | 87.8 | 89.4 | 89.8 | 90.3 | 85.7 | 87.9 | 89.8 |

| **MultiSlav-4slav** | - | 89.7 | __92.5__ | 90.0 | - | - | - | - | 90.2 | - | 89.6 | 88.7 | 92.9 | - | 89.4 | 90.1 | __90.6__ | - | 88.9 | __90.2__ |

| **MultiSlav-5lang** | 87.8 | __89.8__ | __92.5__ | __90.1__ | 88.9 | 86.9 | 88.0 | 87.3 | __90.4__ | 85.4 | 89.8 | __88.9__ | 92.9 | 87.8 | __89.6__ | __90.2__ | __90.6__ | 87.0 | __89.2__ | __90.2__ |

<span style="color:red;">◊</span> system of 2 models *Many2XXX* and *XXX2Many*, see [P5-ces2many](https://huggingface.co/allegro/p5-ces2many)

<span style="color:green;">*</span> results combined for all bi-directional models; each values for applicable model

## Limitations and Biases

We did not evaluate inherent bias contained in training datasets. It is advised to validate bias of our models in perspective domain. This might be especially problematic in translation from English to Slavic languages, which require explicitly indicated gender and might hallucinate based on bias present in training data.

## License

The model is licensed under CC BY 4.0, which allows for commercial use.

## Citation

TO BE UPDATED SOON 🤗

## Contact Options

Authors:

- MLR @ Allegro: [Artur Kot](https://linkedin.com/in/arturkot), [Mikołaj Koszowski](https://linkedin.com/in/mkoszowski), [Wojciech Chojnowski](https://linkedin.com/in/wojciech-chojnowski-744702348), [Mieszko Rutkowski](https://linkedin.com/in/mieszko-rutkowski)

- Laniqo.com: [Artur Nowakowski](https://linkedin.com/in/artur-nowakowski-mt), [Kamil Guttmann](https://linkedin.com/in/kamil-guttmann), [Mikołaj Pokrywka](https://linkedin.com/in/mikolaj-pokrywka)

Please don't hesitate to contact authors if you have any questions or suggestions:

- e-mail: [email protected] or [email protected]

- LinkedIn: [Artur Kot](https://linkedin.com/in/arturkot) or [Mikołaj Koszowski](https://linkedin.com/in/mkoszowski)

| null |

Non_BioNLP

|

# MultiSlav BiDi Models

<p align="center">

<a href="https://ml.allegro.tech/"><img src="allegro-title.svg" alt="MLR @ Allegro.com"></a>

</p>

## Multilingual BiDi MT Models

___BiDi___ is a collection of Encoder-Decoder vanilla transformer models trained on sentence-level Machine Translation task.

Each model is supporting Bi-Directional translation.

___BiDi___ models are part of the [___MultiSlav___ collection](https://huggingface.co/collections/allegro/multislav-6793d6b6419e5963e759a683). More information will be available soon in our upcoming MultiSlav paper.

Experiments were conducted under research project by [Machine Learning Research](https://ml.allegro.tech/) lab for [Allegro.com](https://ml.allegro.tech/).

Big thanks to [laniqo.com](laniqo.com) for cooperation in the research.

<p align="center">

<img src="bi-di.svg">

</p>

Graphic above provides an example of an BiDi model - [BiDi-ces-pol](https://huggingface.co/allegro/bidi-ces-pol) to translate from Polish to Czech language.

___BiDi-ces-pol___ is a bi-directional model supporting translation both __form Czech to Polish__ and __from Polish to Czech__ directions.

### Supported languages

To use a ___BiDi___ model, you must provide the target language for translation.

Target language tokens are represented as 3-letter ISO 639-3 language codes embedded in a format >>xxx<<.

All accepted directions and their respective tokens are listed below.

Note that, for each model only two directions are available.

Each of them was added as a special token to Sentence-Piece tokenizer.

| **Target Language** | **First token** |

|---------------------|-----------------|

| Czech | `>>ces<<` |

| English | `>>eng<<` |

| Polish | `>>pol<<` |

| Slovak | `>>slk<<` |

| Slovene | `>>slv<<` |

### Bi-Di models available

We provided 10 ___BiDi___ models, allowing to translate between 20 languages.

| **Bi-Di model** | **Languages supported** | **HF repository** |

|-----------------|-------------------------|---------------------------------------------------------------------|

| BiDi-ces-eng | Czech ↔ English | [allegro/BiDi-ces-eng](https://huggingface.co/allegro/bidi-ces-eng) |

| BiDi-ces-pol | Czech ↔ Polish | [allegro/BiDi-ces-pol](https://huggingface.co/allegro/bidi-ces-pol) |

| BiDi-ces-slk | Czech ↔ Slovak | [allegro/BiDi-ces-slk](https://huggingface.co/allegro/bidi-ces-slk) |

| BiDi-ces-slv | Czech ↔ Slovene | [allegro/BiDi-ces-slv](https://huggingface.co/allegro/bidi-ces-slv) |

| BiDi-eng-pol | English ↔ Polish | [allegro/BiDi-eng-pol](https://huggingface.co/allegro/bidi-eng-pol) |

| BiDi-eng-slk | English ↔ Slovak | [allegro/BiDi-eng-slk](https://huggingface.co/allegro/bidi-eng-slk) |

| BiDi-eng-slv | English ↔ Slovene | [allegro/BiDi-eng-slv](https://huggingface.co/allegro/bidi-eng-slv) |

| BiDi-pol-slk | Polish ↔ Slovak | [allegro/BiDi-pol-slk](https://huggingface.co/allegro/bidi-pol-slk) |

| BiDi-pol-slv | Polish ↔ Slovene | [allegro/BiDi-pol-slv](https://huggingface.co/allegro/bidi-pol-slv) |

| BiDi-slk-slv | Slovak ↔ Slovene | [allegro/BiDi-slk-slv](https://huggingface.co/allegro/bidi-slk-slv) |

## Use case quickstart

Example code-snippet to use model. Due to bug the `MarianMTModel` must be used explicitly.

Remember to adjust source and target languages to your use-case.

```python

from transformers import AutoTokenizer, MarianMTModel

source_lang = "pol"

target_lang = "ces"

first_lang, second_lang = sorted([source_lang, target_lang])

model_name = f"Allegro/BiDi-{first_lang}-{second_lang}"

tokenizer = AutoTokenizer.from_pretrained(model_name)

model = MarianMTModel.from_pretrained(model_name)

text = f">>{target_lang}<<" + " " + "Allegro to internetowa platforma e-commerce, na której swoje produkty sprzedają średnie i małe firmy, jak również duże marki."

batch_to_translate = [text]

translations = model.generate(**tokenizer.batch_encode_plus(batch_to_translate, return_tensors="pt"))

decoded_translation = tokenizer.batch_decode(translations, skip_special_tokens=True, clean_up_tokenization_spaces=True)[0]

print(decoded_translation)

```

Generated Czech output:

> Allegro je online e-commerce platforma, na které své výrobky prodávají střední a malé firmy, stejně jako velké značky.

## Training

[SentencePiece](https://github.com/google/sentencepiece) tokenizer has a vocab size 32k in total (16k per language). Tokenizer was trained on randomly sampled part of the training corpus.

During the training we used the [MarianNMT](https://marian-nmt.github.io/) framework.

Base marian configuration used: [transfromer-big](https://github.com/marian-nmt/marian-dev/blob/master/src/common/aliases.cpp#L113).

All training parameters are listed in table below.

### Training hyperparameters:

| **Hyperparameter** | **Value** |

|----------------------------|------------------------------------------------------------------------------------------------------------|

| Total Parameter Size | 209M |

| Vocab Size | 32k |

| Base Parameters | [Marian transfromer-big](https://github.com/marian-nmt/marian-dev/blob/master/src/common/aliases.cpp#L113) |

| Number of Encoding Layers | 6 |

| Number of Decoding Layers | 6 |

| Model Dimension | 1024 |

| FF Dimension | 4096 |

| Heads | 16 |

| Dropout | 0.1 |

| Batch Size | mini batch fit to VRAM |

| Training Accelerators | 4x A100 40GB |

| Max Length | 100 tokens |

| Optimizer | Adam |

| Warmup steps | 8000 |

| Context | Sentence-level MT |

| Languages Supported | See [Bi-Di models available](#Bi-Di-models-available) |

| Precision | float16 |

| Validation Freq | 3000 steps |

| Stop Metric | ChrF |

| Stop Criterion | 20 Validation steps |

## Training corpora

The main research question was: "How does adding additional, related languages impact the quality of the model?" - we explored it in the Slavic language family.

___BiDi___ models are our baseline before expanding the data-regime by using higher-level multilinguality.

Datasets were downloaded via [MT-Data](https://pypi.org/project/mtdata/0.2.10/) library.

The number of total examples post filtering and deduplication varies, depending on languages supported, see the table below.

| **Language pair** | **Number of training examples** |

|-------------------|--------------------------------:|

| Czech ↔ Polish | 63M |

| Czech ↔ Slovak | 30M |

| Czech ↔ Slovene | 25M |

| Polish ↔ Slovak | 26M |

| Polish ↔ Slovene | 23M |

| Slovak ↔ Slovene | 18M |

| ---------------- | ------------------------------- |

| Czech ↔ English | 151M |

| English ↔ Polish | 150M |

| English ↔ Slovak | 52M |

| English ↔ Slovene | 40M |

The datasets used (only applicable to specific directions):

| **Corpus** |

|----------------------|

| paracrawl |

| opensubtitles |

| multiparacrawl |

| dgt |

| elrc |

| xlent |

| wikititles |

| wmt |

| wikimatrix |

| dcep |

| ELRC |

| tildemodel |

| europarl |

| eesc |

| eubookshop |

| emea |

| jrc_acquis |

| ema |

| qed |

| elitr_eca |

| EU-dcep |

| rapid |

| ecb |

| kde4 |

| news_commentary |

| kde |

| bible_uedin |

| europat |

| elra |

| wikipedia |

| wikimedia |

| tatoeba |

| globalvoices |

| euconst |

| ubuntu |

| php |

| ecdc |

| eac |

| eac_reference |

| gnome |

| EU-eac |

| books |

| EU-ecdc |

| newsdev |

| khresmoi_summary |

| czechtourism |

| khresmoi_summary_dev |

| worldbank |

## Evaluation

Evaluation of the models was performed on [Flores200](https://huggingface.co/datasets/facebook/flores) dataset.

The table below compares performance of the open-source models and all applicable models from our collection.

Metric used: Unbabel/wmt22-comet-da.

| **Direction** | **CES → ENG** | **CES → POL** | **CES → SLK** | **CES → SLV** | **ENG → CES** | **ENG → POL** | **ENG → SLK** | **ENG → SLV** | **POL → CES** | **POL → ENG** | **POL → SLK** | **POL → SLV** | **SLK → CES** | **SLK → ENG** | **SLK → POL** | **SLK → SLV** | **SLV → CES** | **SLV → ENG** | **SLV → POL** | **SLV → SLK** |

|----------------------------------------------------|--------------:|--------------:|--------------:|--------------:|--------------:|--------------:|--------------:|--------------:|--------------:|--------------:|--------------:|--------------:|--------------:|--------------:|--------------:|--------------:|--------------:|--------------:|--------------:|--------------:|

| **M2M-100** | 87.0 | 89.0 | 92.1 | 89.7 | 88.6 | 86.4 | 88.4 | 87.3 | 89.6 | 84.6 | 89.4 | 88.4 | 92.7 | 86.8 | 89.1 | 89.6 | 90.3 | 86.4 | 88.7 | 90.1 |

| **NLLB-200** | 88.1 | 88.9 | 91.2 | 88.6 | 90.4 | __88.5__ | 90.1 | 88.8 | 89.4 | __85.8__ | 88.9 | 87.7 | 91.8 | 88.2 | 88.9 | 88.8 | 90.0 | __87.5__ | 88.6 | 89.4 |

| **Seamless-M4T** | 87.5 | 80.9 | 90.8 | 82.0 | __90.7__ | __88.5__ | __90.6__ | __89.6__ | 79.6 | 85.4 | 80.0 | 76.4 | 91.5 | 87.2 | 81.2 | 82.9 | 80.9 | 87.3 | 76.7 | 81.0 |

| **OPUS-MT Sla-Sla** | __88.2__ | 82.8 | - | 83.4 | 89.1 | 85.6 | - | 84.5 | 82.9 | 82.2 | - | 81.2 | - | - | - | - | 83.5 | 84.1 | 80.8 | - |

| **OPUS-MT SK-EN** | - | - | - | - | - | - | 89.5 | - | - | - | - | - | - | __88.4__ | - | - | - | - | - | - |

| _Our contributions:_ | | | | | | | | | | | | | | | | | | | | |

| **BiDi Models**<span style="color:green;">*</span> | 87.5 | 89.4 | 92.4 | 89.8 | 87.8 | 86.2 | 87.2 | 86.6 | 90.0 | 85.0 | 89.1 | 88.4 | 92.9 | 87.3 | 88.8 | 89.4 | 90.0 | 86.9 | 88.1 | 89.1 |

| **P4-pol**<span style="color:red;">◊</span> | - | 89.6 | 90.8 | 88.7 | - | - | - | - | 90.2 | - | 89.8 | 88.7 | 91.0 | - | 89.3 | 88.4 | 89.3 | - | 88.7 | 88.5 |

| **P5-eng**<span style="color:red;">◊</span> | 88.0 | 89.0 | 90.7 | 89.0 | 88.8 | 87.3 | 88.4 | 87.5 | 89.0 | 85.7 | 88.5 | 87.8 | 91.0 | 88.2 | 88.6 | 88.5 | 89.6 | 87.2 | 88.4 | 88.9 |

| **P5-ces**<span style="color:red;">◊</span> | 87.9 | 89.6 | __92.5__ | 89.9 | 88.4 | 85.0 | 87.9 | 85.9 | 90.3 | 84.5 | 89.5 | 88.0 | __93.0__ | 87.8 | 89.4 | 89.8 | 90.3 | 85.7 | 87.9 | 89.8 |

| **MultiSlav-4slav** | - | 89.7 | __92.5__ | 90.0 | - | - | - | - | 90.2 | - | 89.6 | 88.7 | 92.9 | - | 89.4 | 90.1 | __90.6__ | - | 88.9 | __90.2__ |

| **MultiSlav-5lang** | 87.8 | __89.8__ | __92.5__ | __90.1__ | 88.9 | 86.9 | 88.0 | 87.3 | __90.4__ | 85.4 | 89.8 | __88.9__ | 92.9 | 87.8 | __89.6__ | __90.2__ | __90.6__ | 87.0 | __89.2__ | __90.2__ |

<span style="color:red;">◊</span> system of 2 models *Many2XXX* and *XXX2Many*, see [P5-ces2many](https://huggingface.co/allegro/p5-ces2many)

<span style="color:green;">*</span> results combined for all bi-directional models; each values for applicable model

## Limitations and Biases

We did not evaluate inherent bias contained in training datasets. It is advised to validate bias of our models in perspective domain. This might be especially problematic in translation from English to Slavic languages, which require explicitly indicated gender and might hallucinate based on bias present in training data.

## License

The model is licensed under CC BY 4.0, which allows for commercial use.

## Citation

TO BE UPDATED SOON 🤗

## Contact Options

Authors:

- MLR @ Allegro: [Artur Kot](https://linkedin.com/in/arturkot), [Mikołaj Koszowski](https://linkedin.com/in/mkoszowski), [Wojciech Chojnowski](https://linkedin.com/in/wojciech-chojnowski-744702348), [Mieszko Rutkowski](https://linkedin.com/in/mieszko-rutkowski)

- Laniqo.com: [Artur Nowakowski](https://linkedin.com/in/artur-nowakowski-mt), [Kamil Guttmann](https://linkedin.com/in/kamil-guttmann), [Mikołaj Pokrywka](https://linkedin.com/in/mikolaj-pokrywka)

Please don't hesitate to contact authors if you have any questions or suggestions:

- e-mail: [email protected] or [email protected]

- LinkedIn: [Artur Kot](https://linkedin.com/in/arturkot) or [Mikołaj Koszowski](https://linkedin.com/in/mkoszowski)

|

{"language": ["cs", "pl", "sk", "sl", "en"], "library_name": "transformers", "license": "cc-by-4.0", "tags": ["translation", "mt", "marian", "pytorch", "sentence-piece", "multilingual", "allegro", "laniqo"]}

|

task

|

[

"TRANSLATION"

] | 45,797 |

Omerhan/checkpoint-14376-v5

|

Omerhan

|

sentence-similarity

|

[

"sentence-transformers",

"safetensors",

"xlm-roberta",

"sentence-similarity",

"feature-extraction",

"generated_from_trainer",

"dataset_size:920106",

"loss:MatryoshkaLoss",

"loss:MultipleNegativesRankingLoss",

"tr",

"arxiv:1908.10084",

"arxiv:2205.13147",

"arxiv:1705.00652",

"base_model:intfloat/multilingual-e5-large-instruct",

"base_model:finetune:intfloat/multilingual-e5-large-instruct",

"license:apache-2.0",

"autotrain_compatible",

"text-embeddings-inference",

"endpoints_compatible",

"region:us"

] | 2025-01-02T04:20:16Z |

2025-01-02T04:21:28+00:00

| 8 | 0 |

---

base_model: intfloat/multilingual-e5-large-instruct

language:

- tr

library_name: sentence-transformers

license: apache-2.0

pipeline_tag: sentence-similarity

tags:

- sentence-transformers

- sentence-similarity

- feature-extraction

- generated_from_trainer

- dataset_size:920106

- loss:MatryoshkaLoss

- loss:MultipleNegativesRankingLoss

widget:

- source_sentence: Fısıh için patates cipsi kosher

sentences:

- 'Geçen yıl 125.000 $ kazandıysanız ve 10.000 $ düşebiliyorsanız, vergilendirilebilir

geliriniz 115.000 $ ''a düşürülür. Ontario''da yaşadıysanız, indiriminiz sizi

sadece 4.000 $ ''ın üzerinde vergiden kurtarır. Öte yandan, 10.000 $''lık bir

vergi kredisi sadece 2,105 $ değerinde olurdu. Yukarıdaki, kesintilerin her zaman

vergi kredilerinden daha iyi olduğunu gösteriyor gibi görünüyor, ancak her zaman

değil: Bir vergi kredisi ve indirim, 35.000 $ vergi elde ederseniz aynı tasarrufla

sonuçlanacaktır.'

- Diğer şeker ikame edicilere göre daha az bir aftertate sahip olduğu iddia edilir

ve fırınlanmış ürünlerde ve yumuşak içeceklerde kullanıma uygundur. Ürün Kosher

- ve potansiyel olarak Hamursuz Bayramı için Kosher - ve yakın gelecekte birçok

üründe görünmesini bekleyebiliriz.Ayrıca hepimiz güçlü müshiller olduklarının

farkında olmalıyız!) Rosh Hashana yaklaşırken, belki de Shimshon'un benzetmesini

genişletebiliriz ve bir kullanım için bir tatlı olabilir.

- Hamursuz Bayramı için Kaşer işaretli patates cipsi bu sorunların hiçbirisi olmadığı

için sertifikalandırılmıştır...Köşe yağında kızartılmış basit patates, Hamursuz

Bayramı için Kaşer olmayan hiçbir şey yapmayan makinelerde işlenir.Fısıh Bayramı

için Kaşer işaretli patates cipsleri bu sorunlardan hiçbirine sahip olmadığı için

sertifikalandırılmıştır...Köşe yağında kızartılmış basit patates, Hamursuz Bayramı

için Kaşer olmayan makinelerde işlenmiştir.

- source_sentence: Kim söyledi mona lisa gülümsemesini kaybetti

sentences:

- Mona Lisa Lost Her Smile sözleri ve akorları sadece kişisel kullanımınız için

tasarlanmıştır, gerçekten David Allan Coe tarafından kaydedilen güzel bir country

şarkısıdır.

- 'Arama Ara: Rose Müzik merkezi, Huber Heights, OH''da bulunan ve Interstate 70''in

hemen dışında yer alan tamamen kapalı bir açık hava amfitiyatrosudur. Amfitiyatro,

balkon koltuklarının ön sıra koltukları kadar iyi olduğu 4200 kişilik bir oturma

kapasiteli mekandır. Bu tesiste nerede oturursanız oturun, bir fan olarak deneyiminizin

avantajları vardır.'

- Ortaya çıkan görüntüler, yüzlerce yıllık vernik ve diğer değişiklikleri ortadan

kaldırıyor, sanatçının boyalı figürü nasıl hayata geçirdiğine ve da Vinci ve çağdaşlarına

nasıl göründüğüne ışık tutuyor. Mona Lisa'nın yüzü biraz daha geniş görünüyor

ve gülümseme farklı ve gözler farklı, dedi Cotte.

- source_sentence: kovanlar bir tür gıda zehirlenmesidir

sentences:

- Bazen gıda zehirlenmesinden hasta hissetmek, kötü yiyecekleri yedikten sonraki

saatler içinde ortaya çıkar. Diğer zamanlarda, biri birkaç gün sonraya kadar hasta

hissetmeyebilir. Hafif gıda zehirlenmesi vakalarında, çok uzun süre hasta hissetmeyeceksiniz

ve yakında tekrar iyi hissedeceksiniz.

- Bebeklerde botulizm. genellikle kabızlığa neden olur; yetişkinlerde, ya da neden

olabilir. Kabızlık veya ishal. Gıda alerjileri gıda zehirlenmesi ile karıştırılabilir.

En ciddi alerjik reaksiyon türleri anidir. kaşıntı, kovanlar, nefes alma zorluğu

ve düşük kan pre-. tabi. Buna anafilaksi veya alerjik şok denir.

- CloseHandle. CloseHandle işlevi açık bir nesne kulpunu kapatır. BOOL CloseHandle(

Handle hObject // close to close to close ; Parametreler hObject Handle to a open

object. Return Values. Fonksiyon başarılı olursa, dönüş değeri sıfırdır. İşlev

başarısız olursa, dönüş değeri sıfırdır. Genişletilmiş hata bilgisi almak için

GetLastError. Remarks'u arayın.

- source_sentence: Hint Müslüman erkek çocuk isimleri ile anlam

sentences:

- Hayır, hamileyseniz pişmemiş pepperoni yemek güvenli değildir. Ham gıda, listeria

olarak adlandırılan zararlı bakteriler içerir. Listeria bakterileri, hamile kadınlarda

beyin enfeksiyonuna ve hatta ölüme yol açabilecek listeriosis'e neden olabilir.

- Bir erkek ya da kız için güzel bir isme ihtiyacınız olsun, size dünya çapında

popüler isimlerin büyük bir koleksiyonunu veriyoruz. İsteğinize bağlı olarak bebeğiniz

için bir Hıristiyan adı, bir Hindu adı veya bir Müslüman adı seçebilirsiniz. Bir

erkek ya da kız için güzel bir isme ihtiyacınız varsa, size dünya çapında popüler

isimlerin büyük bir koleksiyonunu veriyoruz. İsteğinize bağlı olarak bebeğiniz

için bir Hıristiyan adı, bir Hindu adı veya bir Müslüman adı seçebilirsiniz.

- '- Modern bebek erkek isimleri. - Modern bebek kız isimleri. Hint Boy ve Hint

Kız İsimleri Komple Listesi. Anlamları ile bebek isimleri tam listemize göz atın,

sevimli bebek fotoğrafları, anketler, zodyak etkisi ve çok daha fazlası prensesiniz

veya rockstar.ee için en iyi ismi seçmek için bizim kapsamlı veritabanı popüler

Hindu isimleri, benzersiz Müslüman isimleri, en iyi on Sih isimleri, A''dan Z''ye

Hıristiyan isimleri, sevimli bebek Pencap isimleri, kısa ve tatlı Jain Gurati,

güzel'

- source_sentence: ret kuyruğu nedir

sentences:

- 'Bir kuyruktan gelen mesajlar ''ölü harfli'' olabilir; yani, aşağıdaki olaylardan

herhangi biri meydana geldiğinde başka bir değiş tokuşa yeniden yayınlanabilir:

1 İleti, requeue=false ile (basic.reject veya basic.nack) reddedilir, 2 İletinin

TTL''si sona erer; veya. 3 Kuyruk uzunluğu sınırı aşılır.'

- 2.'reddetmek'. Bir fikir veya inançla aynı fikirde değilseniz,'reddetmek' demiyorsunuz.

Bunu reddettiğinizi söylüyorsunuz. Bazı insanlar karma ekonomi fikrini reddediyor.

Ailemin dini inançlarını reddetmek benim için zordu. 3. İsim olarak kullanılır.

Reddetmek, attığınız şeylere atıfta bulunmak için kullanılan bir isimdir.

- Clark County, Amerika Birleşik Devletleri'nin Wisconsin eyaletinde yer alan bir

ilçedir. 2010 nüfus sayımına göre nüfusu 34.690'dır. İlçe merkezi Neillsville'dir.

---

# intfloat-fine-tuned

This is a [sentence-transformers](https://www.SBERT.net) model finetuned from [intfloat/multilingual-e5-large-instruct](https://huggingface.co/intfloat/multilingual-e5-large-instruct) on the json dataset. It maps sentences & paragraphs to a 1024-dimensional dense vector space and can be used for semantic textual similarity, semantic search, paraphrase mining, text classification, clustering, and more.

## Model Details

### Model Description

- **Model Type:** Sentence Transformer

- **Base model:** [intfloat/multilingual-e5-large-instruct](https://huggingface.co/intfloat/multilingual-e5-large-instruct) <!-- at revision c9e87c786ffac96aeaeb42863276930883923ecb -->

- **Maximum Sequence Length:** 512 tokens

- **Output Dimensionality:** 1024 tokens

- **Similarity Function:** Cosine Similarity

- **Training Dataset:**

- json

- **Language:** tr

- **License:** apache-2.0

### Model Sources

- **Documentation:** [Sentence Transformers Documentation](https://sbert.net)