Datasets:

language:

- en

license: cc-by-nc-4.0

size_categories:

- 1K<n<10K

task_categories:

- image-text-to-text

tags:

- multimodality

- reasoning

configs:

- config_name: cube

data_files:

- split: train

path: cube/train-*

- config_name: cube_easy

data_files:

- split: train

path: cube_easy/train-*

- config_name: cube_perception

data_files:

- split: train

path: cube_perception/train-*

- config_name: portal_binary

data_files:

- split: train

path: portal_binary/train-*

- config_name: portal_blanks

data_files:

- split: train

path: portal_blanks/train-*

dataset_info:

- config_name: cube

features:

- name: image

dtype: image

- name: face_arrays

sequence:

sequence:

sequence: int64

- name: question

dtype: string

- name: reference_solution

dtype: string

splits:

- name: train

num_bytes: 274367533

num_examples: 1000

download_size: 270371502

dataset_size: 274367533

- config_name: cube_easy

features:

- name: question

dtype: string

- name: face_arrays

sequence:

sequence:

sequence: int64

- name: reference_solution

dtype: string

splits:

- name: train

num_bytes: 3940912

num_examples: 1000

download_size: 394861

dataset_size: 3940912

- config_name: cube_perception

features:

- name: image

dtype: image

- name: question

dtype: string

- name: answer

sequence:

sequence: int64

splits:

- name: train

num_bytes: 7924582

num_examples: 200

download_size: 7775024

dataset_size: 7924582

- config_name: portal_binary

features:

- name: map_name

dtype: string

- name: images

sequence: string

- name: system_prompt

dtype: string

- name: user_prompt

dtype: string

- name: answer

dtype: string

splits:

- name: train

num_bytes: 2051248

num_examples: 512

download_size: 160438

dataset_size: 2051248

- config_name: portal_blanks

features:

- name: map_name

dtype: string

- name: images

sequence: string

- name: system_prompt

dtype: string

- name: user_prompt

dtype: string

- name: answer

dtype: string

splits:

- name: train

num_bytes: 2468355

num_examples: 512

download_size: 305275

dataset_size: 2468355

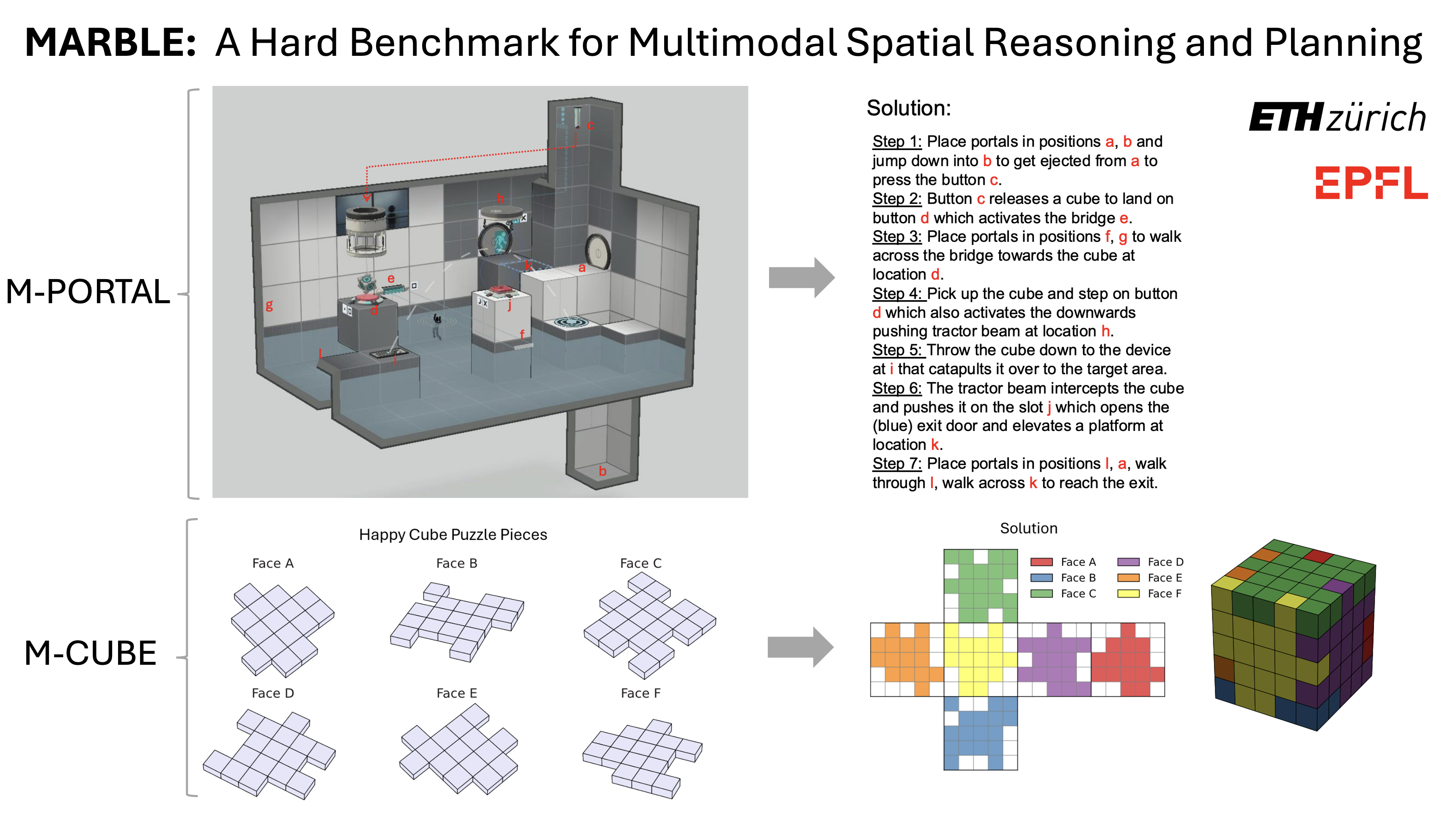

MARBLE: A Hard Benchmark for Multimodal Spatial Reasoning and Planning

🌐 Homepage | 📖 Paper | 🤗 Dataset | 🔗 Code

Introduction

MARBLE is a challenging multimodal reasoning benchmark designed to scrutinize multimodal language models (MLLMs) in their ability to carefully reason step-by-step through complex multimodal problems and environments. MARBLE is composed of two highly challenging tasks, M-Portal and M-Cube, that require the crafting and understanding of multistep plans leveraging spatial, visual, and physical constraints. We find that current MLLMs perform poorly on MARBLE—all the 12 advanced models obtain near-random performance on M-Portal and 0% accuracy on M-Cube. Only in simplified subtasks some models outperform the random baseline, indicating that complex reasoning is still a challenge for existing MLLMs. Moreover, we show that perception remains a bottleneck, where MLLMs occasionally fail to extract information from the visual inputs. By shedding a light on the limitations of MLLMs, we hope that MARBLE will spur the development of the next generation of models with the ability to reason and plan across many, multimodal reasoning steps.

Dataset Details

The benchmark consists of two datasets M-Portal and M-CUBE, each also contains 2 subtasks respectively (portal_binary and portal_blanks for M-PORTAL and cube and cube_easy for M-CUBE). Besides, M-CUBE also contains a simple perception tasks cube_perception.

M-PORTAL: multi-step spatial-planning puzzles modelled on levels from Portal 2.

map_name: Portal 2 map name.images: images for each map (images.zip).system_promptanduser_prompt: instruction of the problem.answer: solution.

M-CUBE: 3D Cube assemblies from six jigsaw pieces, inspired by Happy Cube puzzles.

image: image of 6 jigsaw pieces.face_arrays: 6 jigsaw pieces converted to binary arrays (0=gap, 1=bump).question: instruction of the Happy Cube Puzzle.reference_solution: one of the valid solutions.

Evaluation

Please refer to 🔗 Code

Overall Results

Performance on M-PORTAL:

| Model | Plan-correctness (F1 %) | Fill-the-blanks (Acc %) |

|---|---|---|

| GPT-o3 | 6.6 | 17.6 |

| Gemini-2.5-pro | 4.7 | 16.1 |

| DeepSeek-R1-0528* | 0.0 | 8.4 |

| Claude-3.7-Sonnet | 6.3 | 6.8 |

| DeepSeek-R1* | 6.1 | 5.5 |

| Seed1.5-VL | 7.6 | 3.5 |

| GPT-o4-mini | 0.0 | 3.1 |

| GPT-4o | 6.5 | 0.4 |

| Llama-4-Scout | 6.5 | 0.2 |

| Qwen2.5-VL-72B | 6.6 | 0.2 |

| InternVL3-78B | 6.4 | 0.0 |

| Qwen3-235B-A22B* | 0.0 | 0.0 |

| Random | 6.1 | 3e-3 |

Performance on M-CUBE:

| Model | CUBE (Acc %) | CUBE-easy (Acc %) |

|---|---|---|

| GPT-o3 | 0.0 | 72.0 |

| GPT-o4-mini | 0.0 | 16.0 |

| DeepSeek-R1* | 0.0 | 14.0 |

| Gemini-2.5-pro | 0.0 | 11.0 |

| DeepSeek-R1-0528* | 0.0 | 8.0 |

| Claude-3.7-Sonnet | 0.0 | 7.4 |

| InternVL3-78B | 0.0 | 2.8 |

| Seed1.5-VL | 0.0 | 2.0 |

| GPT-4o | 0.0 | 2.0 |

| Qwen2.5-VL-72B | 0.0 | 2.0 |

| Llama-4-Scout | 0.0 | 1.6 |

| Qwen3-235B-A22B* | 0.0 | 0.3 |

| Random | 1e-5 | 3.1 |

Contact

- Yulun Jiang: [email protected]

BibTex

@article{jiang2025marble,

title={MARBLE: A Hard Benchmark for Multimodal Spatial Reasoning and Planning},

author={Jiang, Yulun and Chai, Yekun and Brbi'c, Maria and Moor, Michael},

journal={arXiv preprint arXiv:2506.22992},

year={2025},

url={https://arxiv.org/abs/2506.22992}

}